Information Theory Tutorial

ArXiV 1802.05968

1) Intro

2) Finding a route, bit by bit

3) Bits are NOT binary digits

4) Information & Entropy

5) Entropy - Continuous Variables

6) Max Entropy Distributions

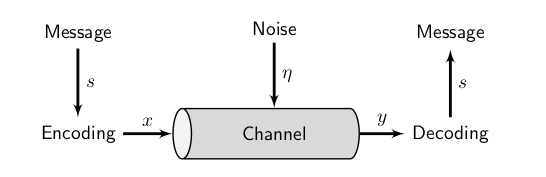

7) Channel Capacity

8) Shannon's Source Coding Theorem

9) Noise & Channel Capacity

10) Mutual Information

11) Shannon's Noisy Channel Coding Theorem

12) Gaussian Channels

13) Fourier Analysis

14) History (very, very short)

15) Key Equations

16) Resources

1) Intro

2) Finding a route, bit by bit

3) Bits are NOT binary digits

4) Information & Entropy

5) Entropy - Continuous Variables

6) Max Entropy Distributions

7) Channel Capacity

8) Shannon's Source Coding Theorem

9) Noise & Channel Capacity

10) Mutual Information

11) Shannon's Noisy Channel Coding Theorem

12) Gaussian Channels

13) Fourier Analysis

14) History (very, very short)

15) Key Equations

16) Resources