semiconductors/gpus

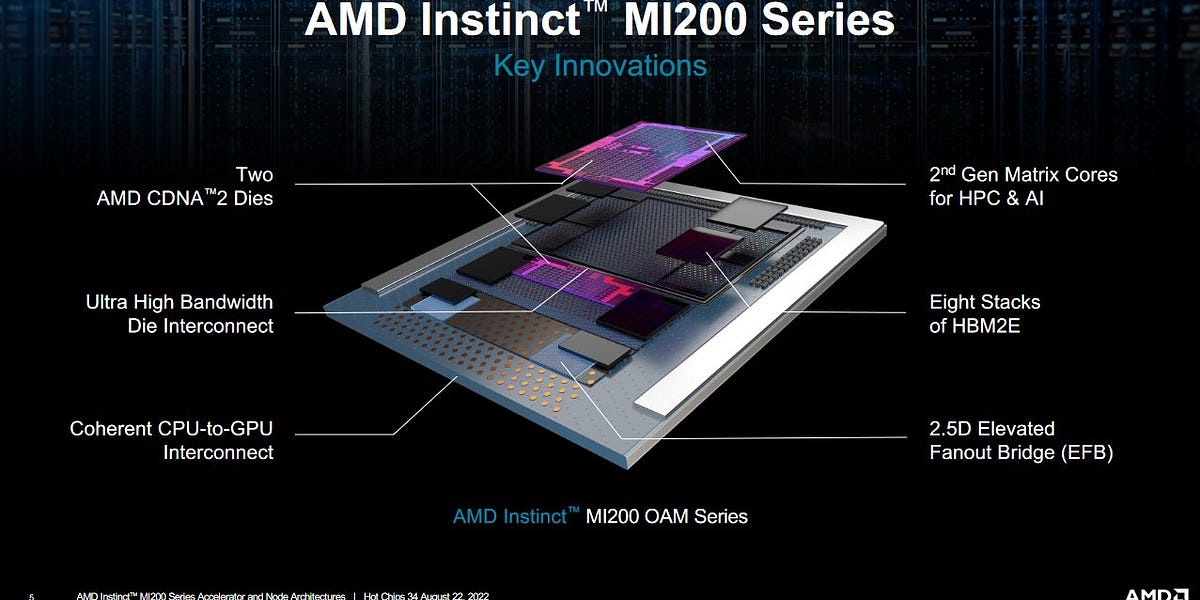

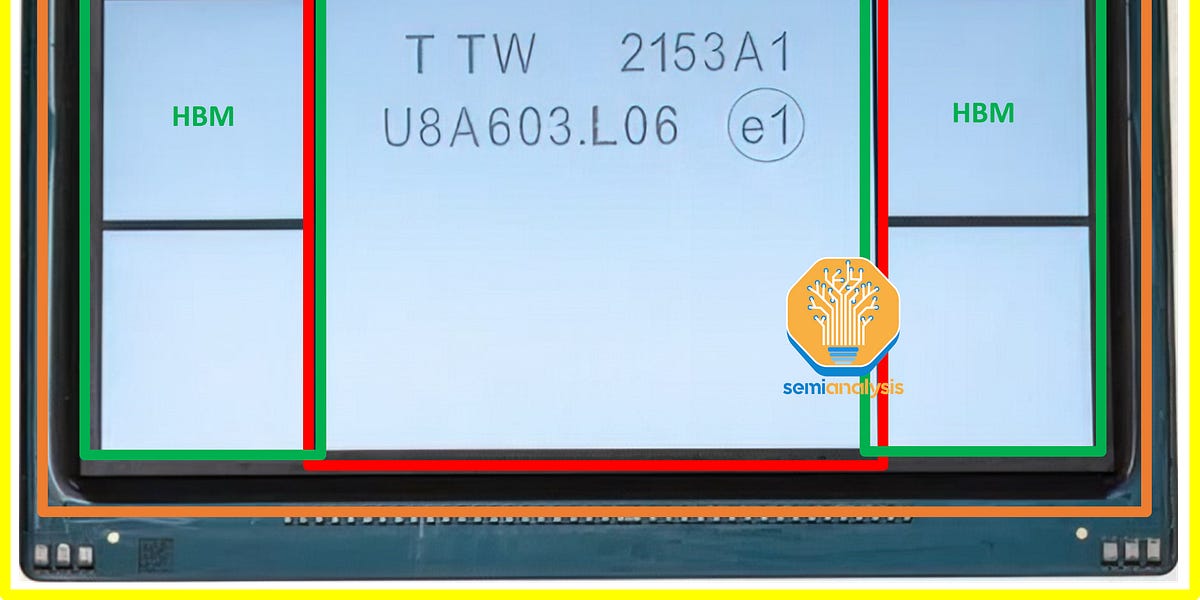

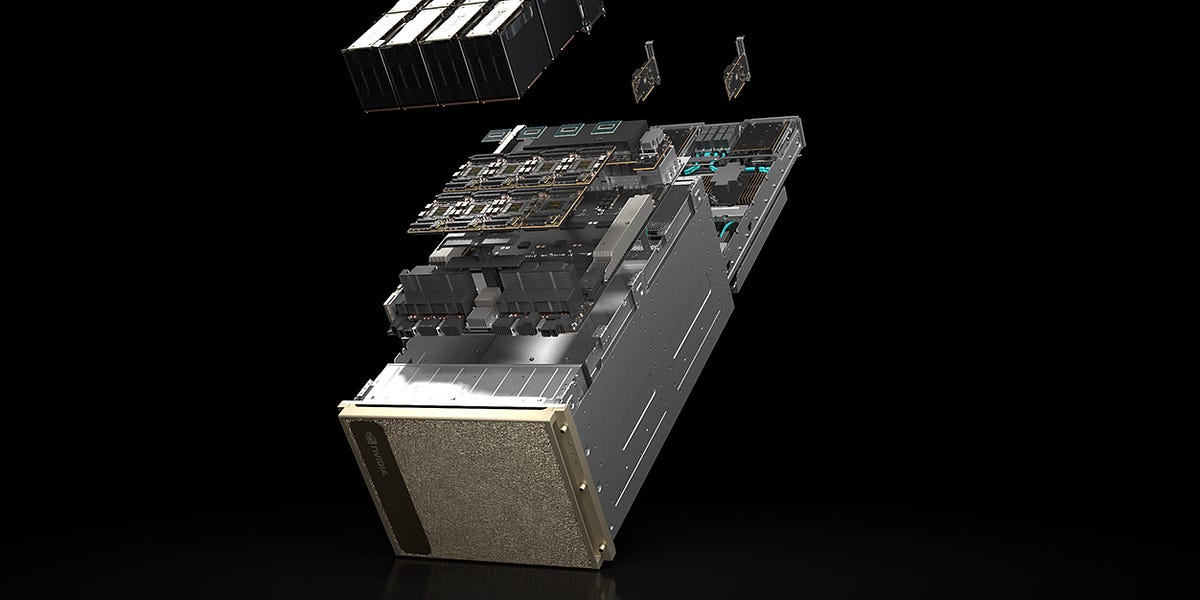

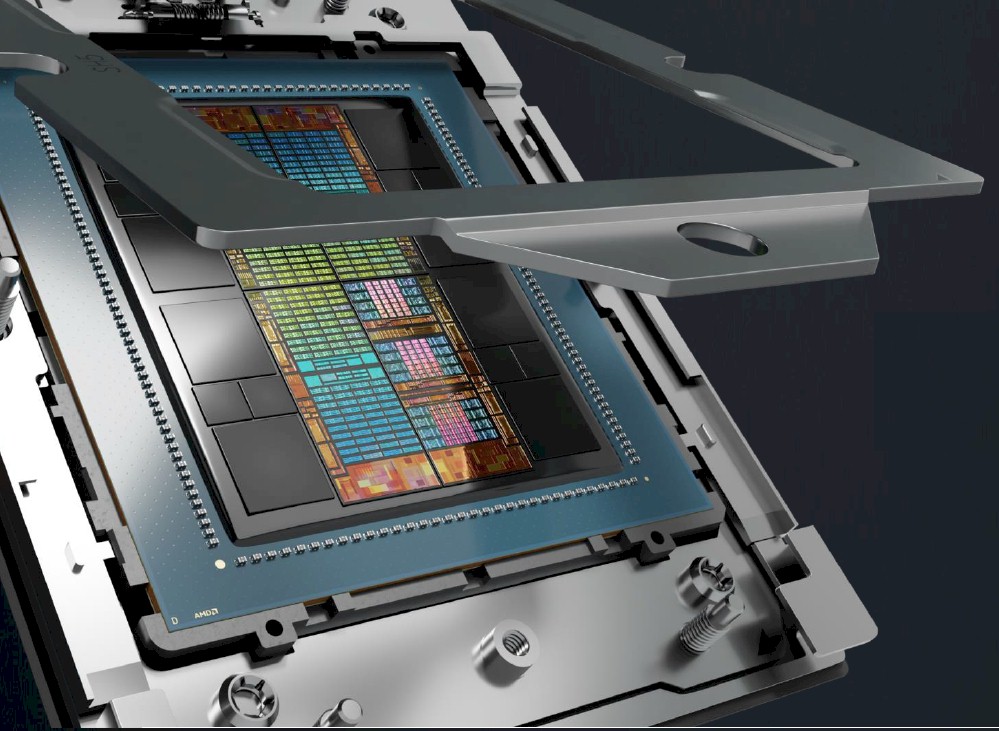

The Future of AI Accelerators: A Roadmap of Industry Leaders The AI hardware race is heating up, with major players like NVIDIA, AMD, Intel, Google, Amazon, and more unveiling their upcoming AI accelerators. Here’s a quick breakdown of the latest trends: Key Takeaways: NVIDIA Dominance: NVIDIA continues to lead with a robust roadmap, extending from H100 to future Rubin and Rubin Ultra chips with HBM4 memory by 2026-2027. AMD’s Competitive Push: AMD’s MI300 series is already competing, with MI350 and future MI400 models on the horizon. Intel’s AI Ambitions: Gaudi accelerators are growing, with Falcon Shores on track for a major memory upgrade. Google & Amazon’s Custom Chips: Google’s TPU lineup expands rapidly, while Amazon’s Trainium & Inferentia gain traction. Microsoft & Meta’s AI Expansion: Both companies are pushing their AI chip strategies with Maia and MTIA projects, respectively. Broadcom & ByteDance Join the Race: New challengers are emerging, signaling increased competition in AI hardware. What This Means: With the growing demand for AI and LLMs, companies are racing to deliver high-performance AI accelerators with advanced HBM (High Bandwidth Memory) configurations. The next few years will be crucial in shaping the AI infrastructure landscape. $NVDA $AMD $INTC $GOOGL $AMZN $META $AVGO $ASML $BESI

Getting 'low level' with Nvidia and AMD GPUs

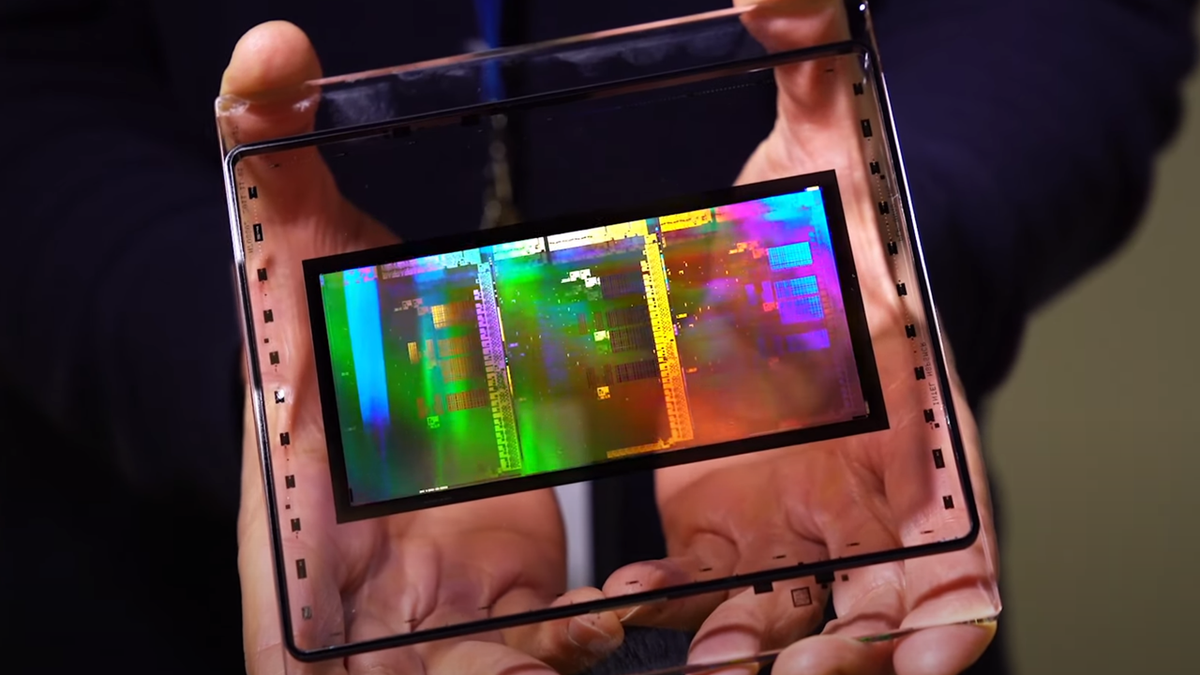

Intel's first Arc B580 GPUs based on the Xe2 "Battlemage" architecture have been leaked & they look quite compelling.

Datacenter GPUs and some consumer cards now exceed performance limits

Beijing will be thrilled by this nerfed silicon

GPT-4 Profitability, Cost, Inference Simulator, Parallelism Explained, Performance TCO Modeling In Large & Small Model Inference and Training

While a lot of people focus on the floating point and integer processing architectures of various kinds of compute engines, we are spending more and more

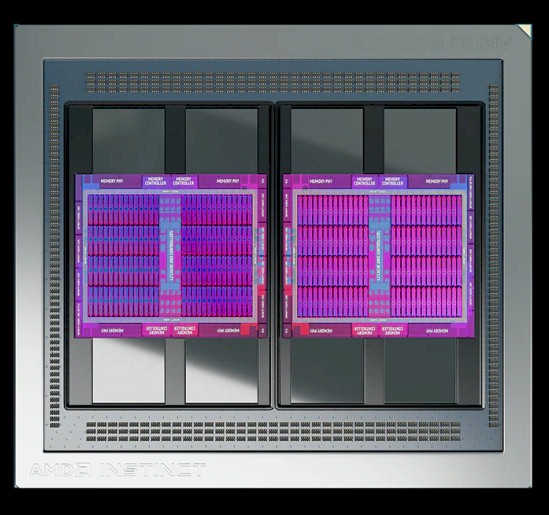

Lenovo, the firm emerging as a driving force behind AI computing, has expressed tremendous optimism about AMD's Instinct MI300X accelerator.

Chafing at their dependence, Amazon, Google, Meta and Microsoft are racing to cut into Nvidia’s dominant share of the market.

AMD, Nvidia, and Intel have all diverged their GPU architectures to separately optimize for compute and graphics.

Quarterly Ramp for Nvidia, Broadcom, Google, AMD, AMD Embedded (Xilinx), Amazon, Marvell, Microsoft, Alchip, Alibaba T-Head, ZTE Sanechips, Samsung, Micron, and SK Hynix

GDDR7 is getting closer, says Micron.

Though it'll arrive just in time for mid-cycle refresh from AMD, Nvidia, and Intel, it's unclear if there will be any takers just yet.

Micron $MU looks very weak in AI

The great thing about the Cambrian explosion in compute that has been forced by the end of Dennard scaling of clock frequencies and Moore’s Law lowering

GPUs may dominate, but CPUs could be perfect for smaller AI models

Google's new machines combine Nvidia H100 GPUs with Google’s high-speed interconnections for AI tasks like training very large language models.

Faster masks, less power.

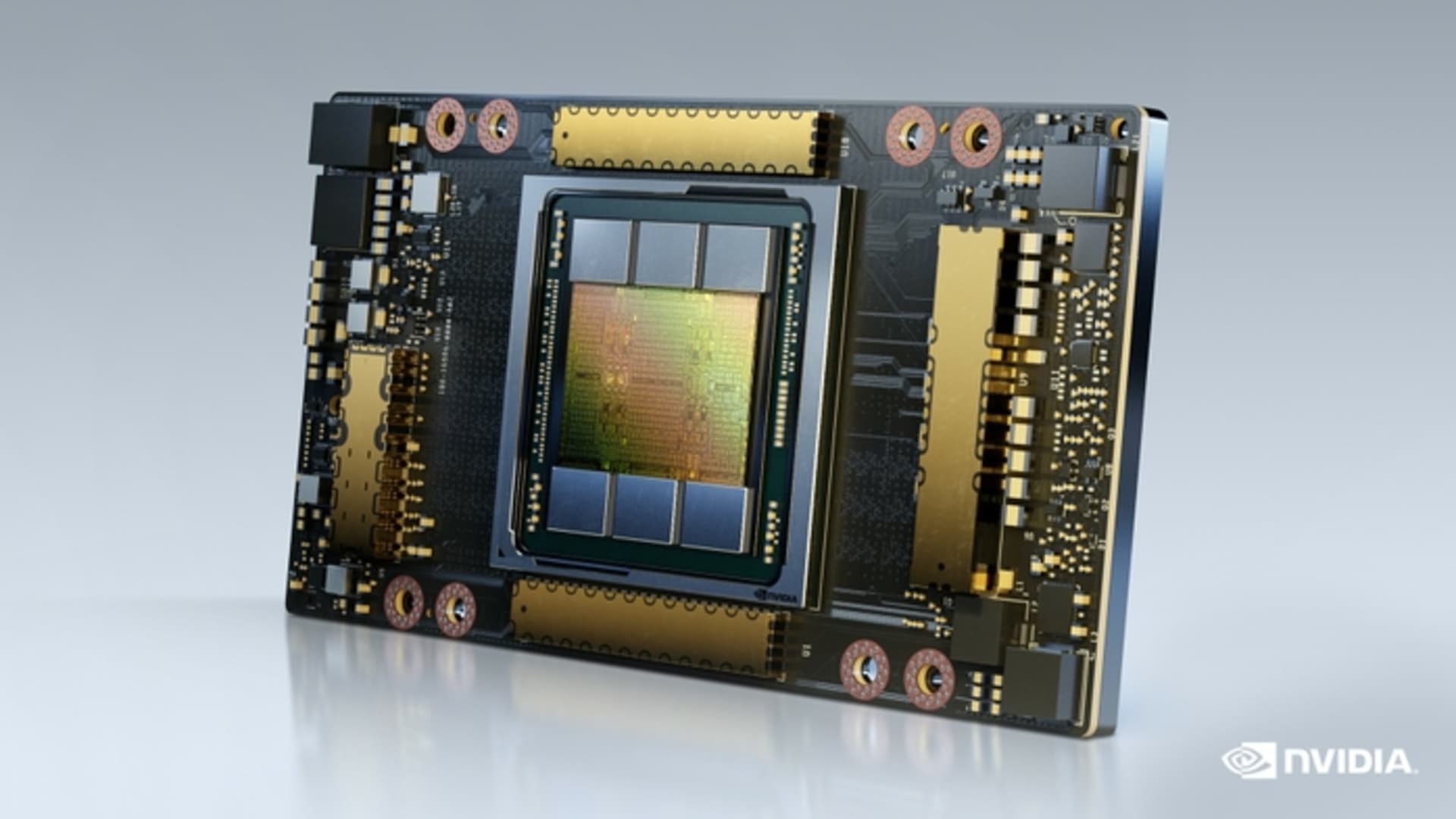

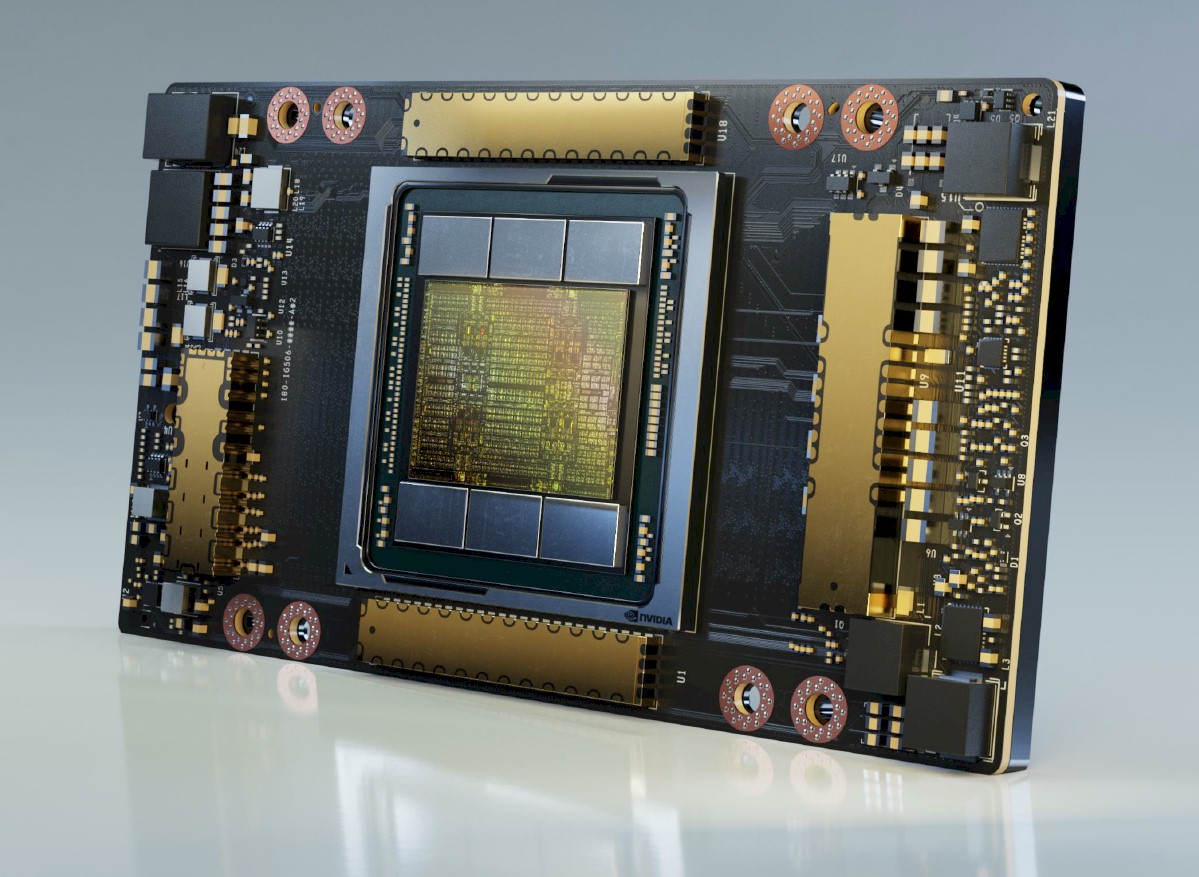

The $10,000 Nvidia A100has become one of the most critical tools in the artificial intelligence industry,

Here, I provide an in-depth analysis of GPUs for deep learning/machine learning and explain what is the best GPU for your use-case and budget.

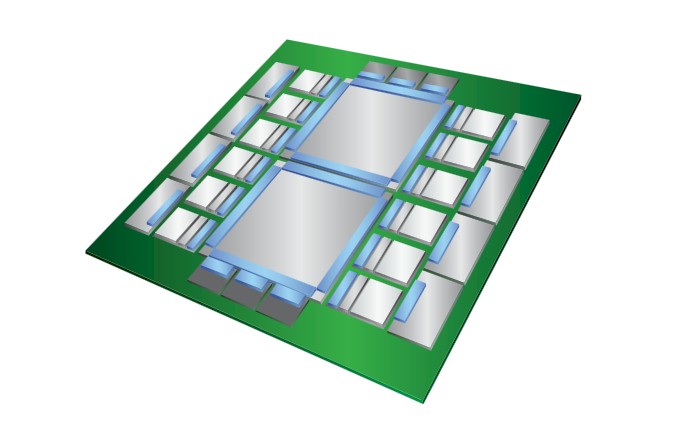

There are two types of packaging that represent the future of computing, and both will have validity in certain domains: Wafer scale integration and

Nvidia has staked its growth in the datacenter on machine learning. Over the past few years, the company has rolled out features in its GPUs aimed neural

The modern GPU compute engine is a microcosm of the high performance computing datacenter at large. At every level of HPC – across systems in the

Like its U.S. counterpart, Google, Baidu has made significant investments to build robust, large-scale systems to support global advertising programs. As

Its second analog AI chip is optimized for different card sizes, but still aimed at computer vision workloads at the edge.

Current custom AI hardware devices are built around super-efficient, high performance matrix multiplication. This category of accelerators includes the

What makes a GPU a GPU, and when did we start calling it that? Turns out that’s a more complicated question than it sounds.

AMD is one of the oldest designers of large scale microprocessors and has been the subject of polarizing debate among technology enthusiasts for nearly 50 years. Its...

One of the main tenets of the hyperscalers and cloud builders is that they buy what they can and they only build what they must. And if they are building

AMD ROCm documentation

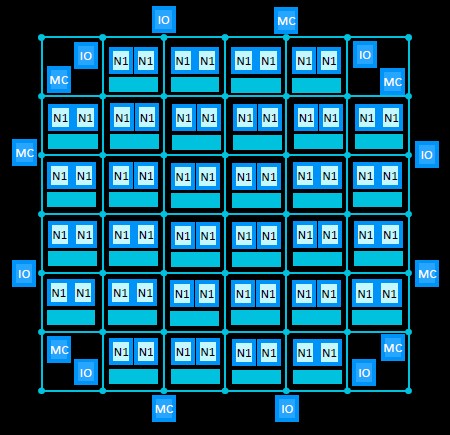

Long-time Slashdot reader UnknowingFool writes: AMD filed a patent on using chiplets for a GPU with hints on why it has waited this long to extend their CPU strategy to GPUs. The latency between chiplets poses more of a performance problem for GPUs, and AMD is attempting to solve the problem with a ...

Micron's GDDR6X is one of the star components in Nvidia's RTX 3070, 3080, and 3080 video cards. It's so fast it should boost gaming past the 4K barrier.

When you have 54.2 billion transistors to play with, you can pack a lot of different functionality into a computing device, and this is precisely what

In this tutorial, you will learn how to get started with your NVIDIA Jetson Nano, including installing Keras + TensorFlow, accessing the camera, and performing image classification and object detection.

363 votes, 25 comments. This post has been split into a two-part series to work around Reddit’s per-post character limit. Please find Part 2 in the…

Making Waves in Deep Learning How deep learning applications will map onto a chip.

A new crop of applications is driving the market along some unexpected routes, in some cases bypassing the processor as the landmark for performance and