chatgpt prompting

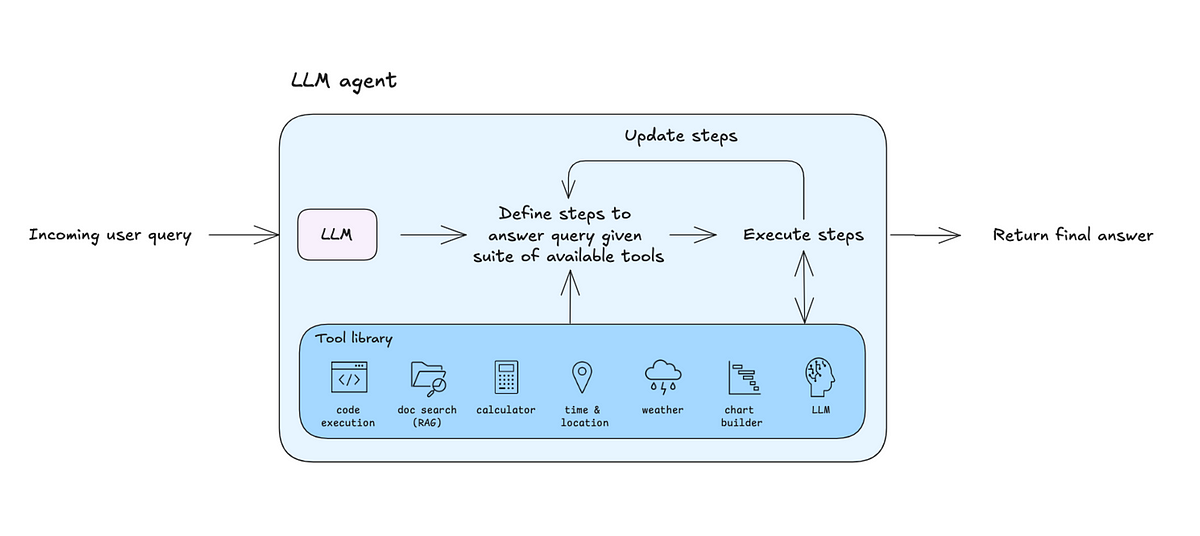

Building a fully functional, code-editing agent in less than 400 lines.

Doug Turnbull recently wrote about how [all search is structured now](https://softwaredoug.com/blog/2025/04/02/all-search-structured-now): Many times, even a small open source LLM will be able to turn a search query into reasonable …

Google's Gemma 3 model (the 27B variant is particularly capable, I've been trying it out [via Ollama](https://ollama.com/library/gemma3)) supports function calling exclusively through prompt engineering. The official documentation describes two recommended …

Solid techniques to get really good results from any LLM

OpenAI's president Greg Brockman recently shared this cool template for prompting their reasoning models o1/o3. Turns out, this is great for ANY reasoning… | 32 comments on LinkedIn

Johann Rehberger snagged a copy of the [ChatGPT Operator](https://simonwillison.net/2025/Jan/23/introducing-operator/) system prompt. As usual, the system prompt doubles as better written documentation than any of the official sources. It asks users …

[caption align=

Prompt engineering is crucial to leveraging ChatGPT's capabilities, enabling users to elicit relevant, accurate, high-quality responses from the model. As language models like ChatGPT become more sophisticated, mastering the art of crafting effective prompts has become essential. This comprehensive overview delves into prompt engineering principles, techniques, and best practices, providing a detailed understanding drawn from multiple authoritative sources. Understanding Prompt Engineering Prompt engineering involves the deliberate design and refinement of input prompts to influence the output of a language model like ChatGPT. The efficacy of a prompt directly impacts the relevance and coherence of the AI's responses. Effective prompt engineering

Amazon trained me to write evidence-based narratives. I love the format. It’s a clear and compelling way to present information to drive…

In the developing field of Artificial Intelligence (AI), the ability to think quickly has become increasingly significant. The necessity of communicating with AI models efficiently becomes critical as these models get more complex. In this article we will explain a number of sophisticated prompt engineering strategies, simplifying these difficult ideas through straightforward human metaphors. The techniques and their examples have been discussed to see how they resemble human approaches to problem-solving. Chaining Methods Analogy: Solving a problem step-by-step. Chaining techniques are similar to solving an issue one step at a time. Chaining techniques include directing the AI via a systematic

27 examples (with actual prompts) of how product managers are using Perplexity today

Apply these techniques when crafting prompts for large language models to elicit more relevant responses.

Generative AI (GenAI) tools have come a long way. Believe it or not, the first generative AI tools were introduced in the 1960s in a Chatbot. Still, it was only in 2014 that generative adversarial networks (GANs) were introduced, a type of Machine Learning (ML) algorithm that allowed generative AI to finally create authentic images, videos, and audio of real people. In 2024, we can create anything imaginable using generative AI tools like ChatGPT, DALL-E, and others. However, there is a problem. We can use those AI tools but can not get the most out of them or use them

Prompt engineering has burgeoned into a pivotal technique for augmenting the capabilities of large language models (LLMs) and vision-language models (VLMs), utilizing task-specific instructions or prompts to amplify model efficacy without altering core model parameters. These prompts range from natural language instructions that provide context to guide the model to learning vector representations that activate relevant knowledge, fostering success in myriad applications like question-answering and commonsense reasoning. Despite its burgeoning use, a systematic organization and understanding of the diverse prompt engineering methods still need to be discovered. This survey by researchers from the Indian Institute of Technology Patna, Stanford University,

How do we communicate effectively with LLMs?

Sure, anyone can use OpenAI’s chatbot. But with smart engineering, you can get way more interesting results.

In the rapidly evolving world of artificial intelligence, the ability to communicate effectively with AI tools has become an indispensable skill. Whether you're generating content, solving complex data problems, or creating stunning digital art, the quality of the outcomes you receive is directly…

Unlock the power of GPT-4 summarization with Chain of Density (CoD), a technique that attempts to balance information density for high-quality summaries.

Explore how the Skeleton-of-Thought prompt engineering technique enhances generative AI by reducing latency, offering structured output, and optimizing projects.

Learn how to use GPT / LLMs to create complex summaries such as for medical text

Our first Promptpack for businesses

7 prompting tricks, Langchain, and Python example code

3 levels of using LLMs in practice

In this chapter, you'll learn how to concatenate multiple endpoints in order to generate text. You'll apply this by creating a story.

A practical and simple approach for “reasoning” with LLMs

Understanding one of the most effective techniques to improve the effectiveness of prompts in LLM applications.

This article delves into the concept of Chain-of-Thought (CoT) prompting, a technique that enhances the reasoning capabilities of large language models (LLMs). It discusses the principles behind CoT prompting, its application, and its impact on the performance of LLMs.

An effective prompt is the first step in benefitting from ChatGPT. That's the challenge — an effective prompt.

In this article, we will demonstrate how to use different prompts to ask ChatGPT for help and make...

Explore how clear syntax can enable you to communicate intent to language models, and also help ensure that outputs are easy to parse

ChatGPT can generate usable content. But it can also analyze existing content — articles, descriptions — and suggest improvements for SEO and social media.

Learn how standard greedy tokenization introduces a subtle and powerful bias that can have all kinds of unintended consequences.

Our weekly selection of must-read Editors’ Picks and original features

Prompt engineering is an emerging skill and one companies are looking to hire for as they employ more AI tools. And yet dedicated prompt engineering roles may be somewhat short-lived as workforces become more proficient in using the tools.

A Comprehensive Overview of Prompt Engineering

Prompt Engineering, also known as In-Context Prompting, refers to methods for how to communicate with LLM to steer its behavior for desired outcomes without updating the model weights. It is an empirical science and the effect of prompt engineering methods can vary a lot among models, thus requiring heavy experimentation and heuristics. This post only focuses on prompt engineering for autoregressive language models, so nothing with Cloze tests, image generation or multimodality models.

Garbage in, garbage out has never been more true.

This repository offers a comprehensive collection of tutorials and implementations for Prompt Engineering techniques, ranging from fundamental concepts to advanced strategies. It serves as an essen...

A comparison between a report written by a human and one composed by AI reveals the weaknesses of the latter when it comes to journalism.