Why do we use the logistic and softmax functions? Thermal physics may have an answer.

Why do we use the logistic and softmax functions? Thermal physics may have an answer.

Keep your neural network alive by understanding the downsides of ReLU

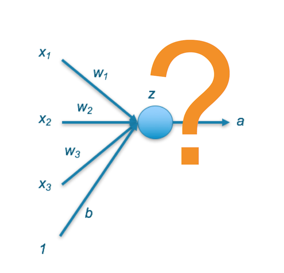

Activation functions are functions which take an input signal and convert it to an output signal. Activation functions introduce…

Recently, a colleague of mine asked me a few questions like “why do we have so many activation functions?”, “why is that one works better…

Activation functions are functions that we apply in neural networks after (typically) applying an affine transformation combining weights and input features. They are typically non-linear functions. The rectified linear unit, or ReLU, has been the most popular in the past decade, although the choice is architecture dependent and many alternatives have emerged in recent years. In this section, you will find a constantly updating list of activation functions.

Week Two - 100 Days of Code Challenge

The purpose of this post is to provide guidance on which combination of final-layer activation function and loss function should be used in…

Stay up-to-date on the latest data science and AI news in the worlds of artificial intelligence, machine learning, deep learning, implementation, and more.