bandits

Applying Reinforcement Learning strategies to real-world use cases, especially in dynamic pricing, can reveal many surprises

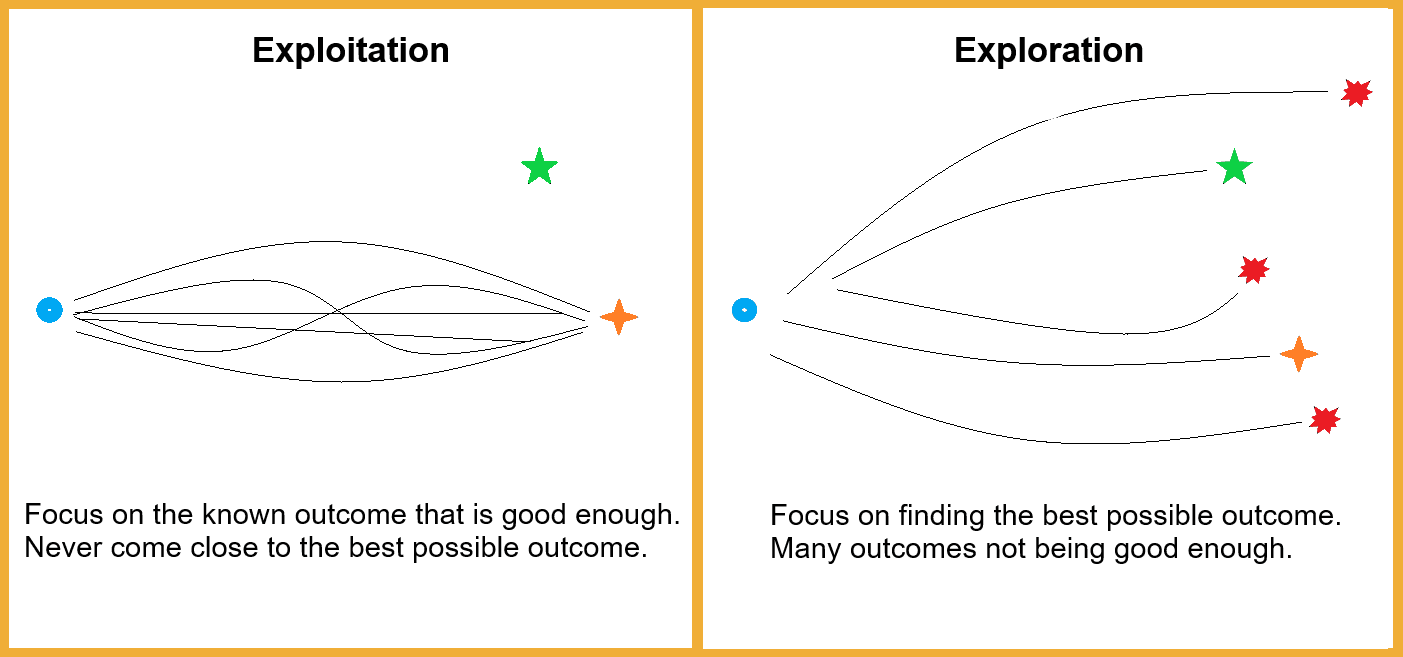

Delve deeper into the concept of multi-armed bandits, reinforcement learning, and exploration vs. exploitation dilemma.

As of 2024, The Data Incubator is now Pragmatic Data! Explore Pragmatic Institute’s new offerings, learn about team training opportunities, and more.

Making the best recommendations to anonymous audiences

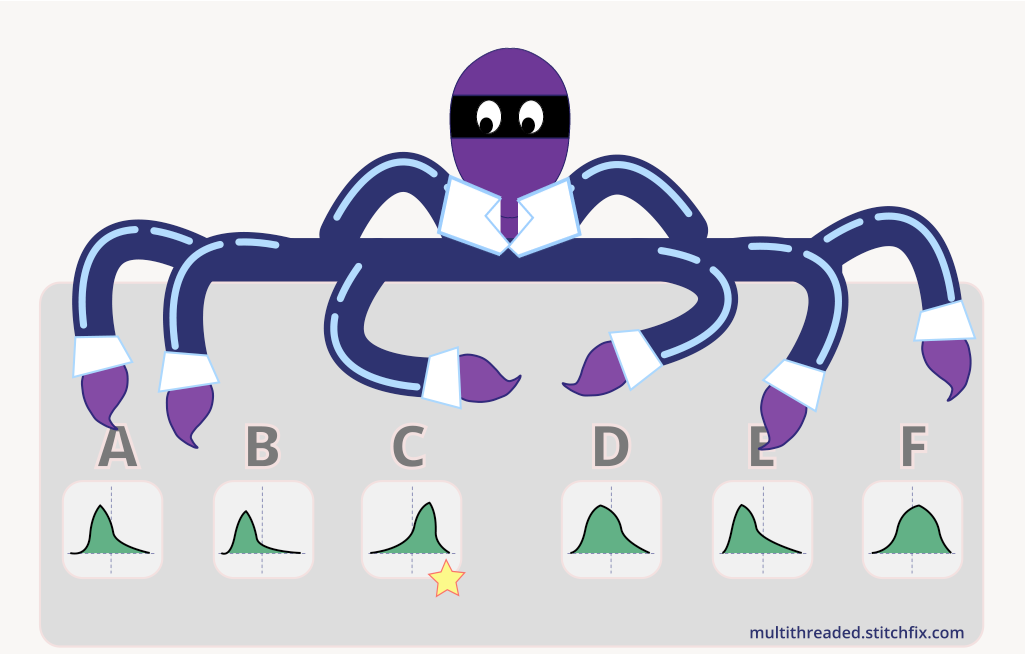

Library for multi-armed bandit selection strategies, including efficient deterministic implementations of Thompson sampling and epsilon-greedy. - stitchfix/mab

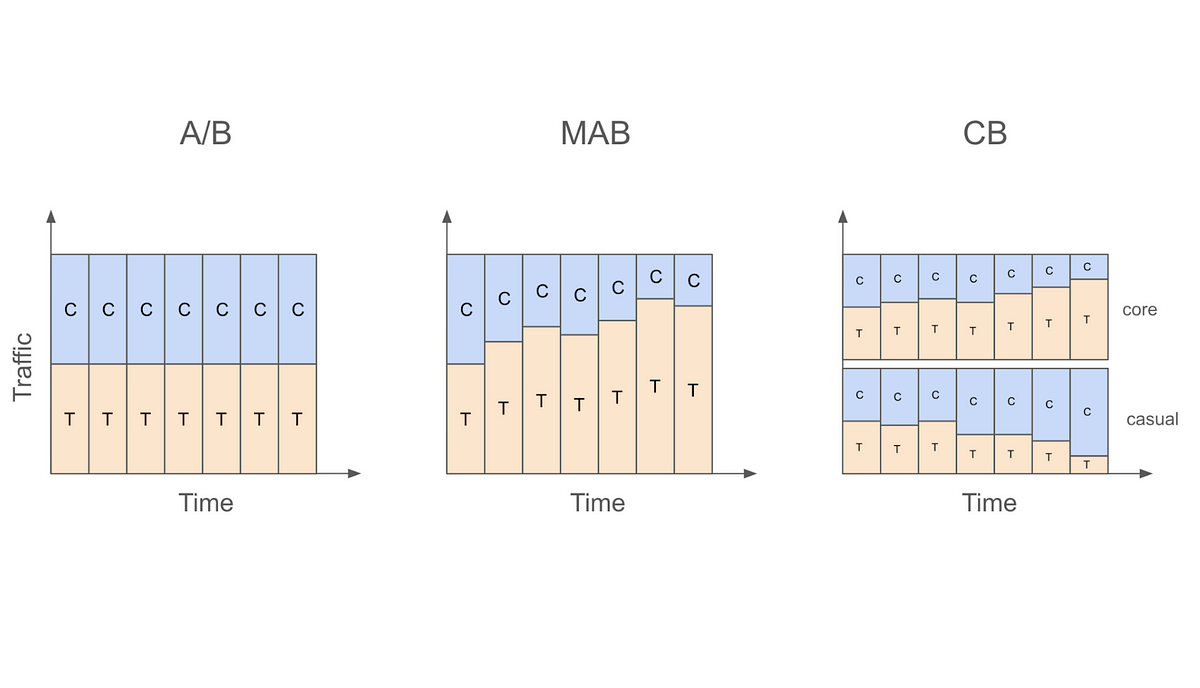

We've recently built support for multi-armed bandits into the Stitch Fix experimentation platform. This post will explain how and why.

Introducing the multi-armed bandit (MAB) optimization used for hero images on hotels.com

Multi-armed bandits are a simple but very powerful framework for algorithms that make decisions over time under uncertainty. “Introduction to Multi-Armed Bandits” by Alex Slivkins provides an accessible, textbook-like treatment of the subject.

There are multiple ways to doing the same thing in Pandas, and that might make it troublesome for the beginner user.This post is about handling most of the data manipulation cases in Python using a straightforward, simple, and matter of fact way.