BERT is an open source machine learning framework for natural language processing (NLP) that helps computers understand ambiguous language by using context

BERT is an open source machine learning framework for natural language processing (NLP) that helps computers understand ambiguous language by using context

A simple quick solution for deploying an NLP project and challenges you may faced during the process.

From predicting single sentence to fine-tuning using custom dataset to finding the best hyperparameter configuration.

Determining the optimal architectural parameters reduces network size by 84% while improving performance on natural-language-understanding tasks.

AI researchers from the Ludwig Maximilian University (LMU) of Munich have developed a bite-sized text generator capable of besting OpenAI’s state of the art GPT-3 using only a tiny fraction of its parameters. GPT-3 is a monster of an AI sys

What does Microsoft getting an "exclusive license" to GPT-3 mean for the future of AI democratization?

Google published an article “Understanding searches better than ever before” and positioned BERT as one of the most important updates to…

TensorFlow code and pre-trained models for BERT.

Discussions: Hacker News (98 points, 19 comments), Reddit r/MachineLearning (164 points, 20 comments) Translations: Chinese (Simplified), French 1, French 2, Japanese, Korean, Persian, Russian, Spanish 2021 Update: I created this brief and highly accessible video intro to BERT The year 2018 has been an inflection point for machine learning models handling text (or more accurately, Natural Language Processing or NLP for short). Our conceptual understanding of how best to represent words and sentences in a way that best captures underlying meanings and relationships is rapidly evolving. Moreover, the NLP community has been putting forward incredibly powerful components that you can freely download and use in your own models and pipelines (It’s been referred to as NLP’s ImageNet moment, referencing how years ago similar developments accelerated the development of machine learning in Computer Vision tasks).

Visualizing machine learning one concept at a time.

Pre-training SmallBERTa - A tiny model to train on a tiny dataset An end to end colab notebook that allows you to train your own LM (using HuggingFace…

In this tutorial we learn to quickly train Huggingface BERT using PyTorch Lightning for transfer learning on any NLP task

Unless you have been out of touch with the Deep Learning world, chances are that you have heard about BERT — it has been the talk of the town for the last one year. At the end of 2018 researchers …

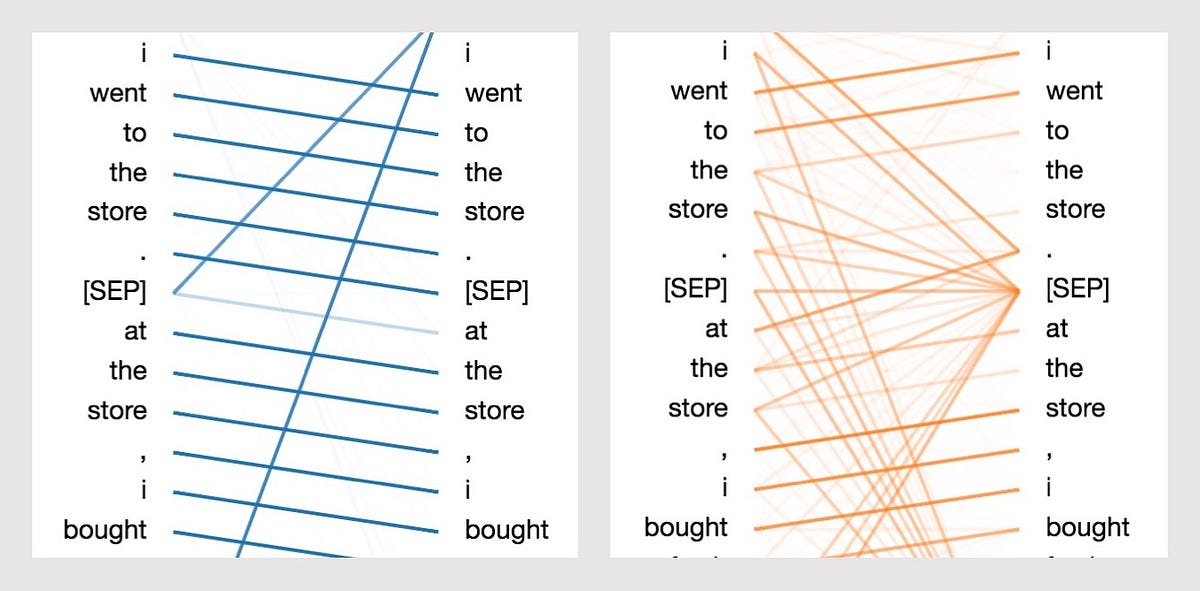

From BERT’s tangled web of attention, some intuitive patterns emerge.