boosting

#CatBoost - state-of-the-art open-source gradient boosting library with categorical features support,

Understanding the logic behind AdaBoost and implementing it using Python

This tutorial explores the LightGBM library in Python to build a classification model using the LGBMClassifier class.

The most important LightGBM parameters, what they do, and how to tune them

Avoid post-processing the SHAP values of categorical features

There are many great boosting Python libraries for data scientists to reap the benefits of. In this article, the author discusses LightGBM benefits and how they are specific to your data science job.

Combining tree-boosting with Gaussian process and mixed effects models - fabsig/GPBoost

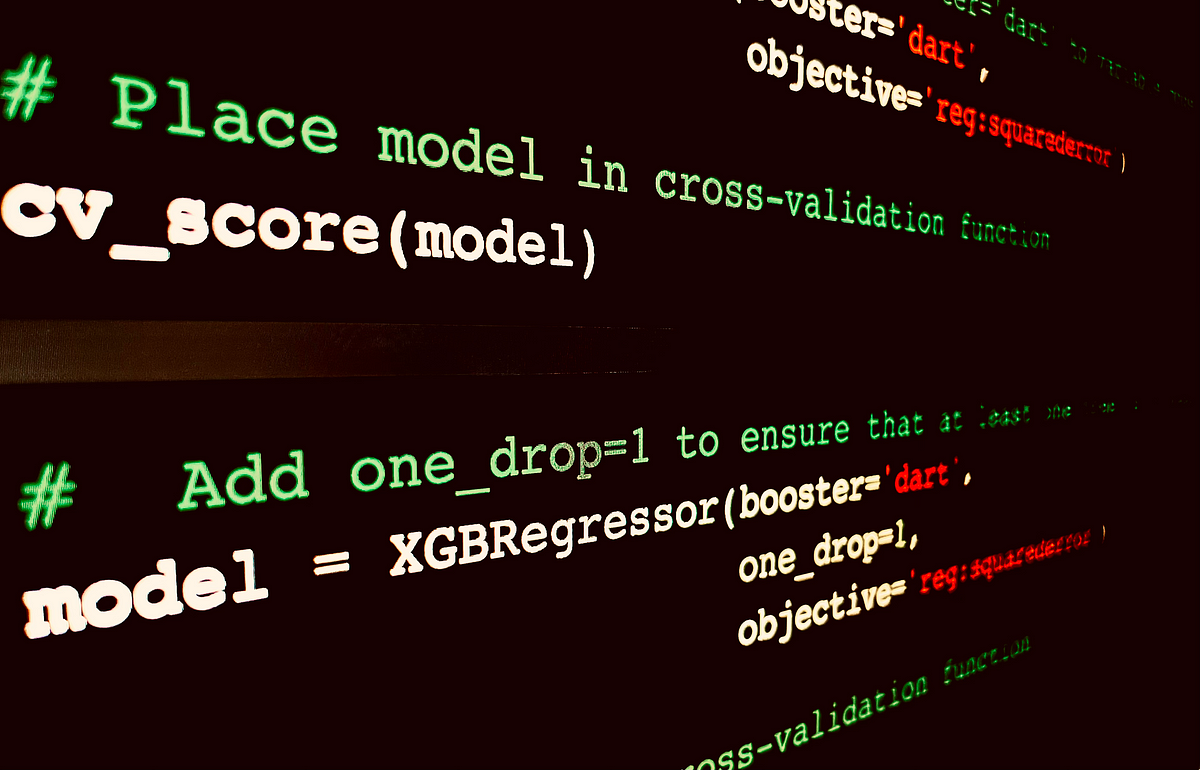

XGBoost explained as well as gradient boosting method and HP tuning by building your own gradient boosting library for decision trees.

Utilize the hottest ML library for state-of-the-art performance in classification

This example shows how quantile regression can be used to create prediction intervals. See Features in Histogram Gradient Boosting Trees for an example showcasing some other features of HistGradien...

GPU vs CPU training speed comparison for xgboost

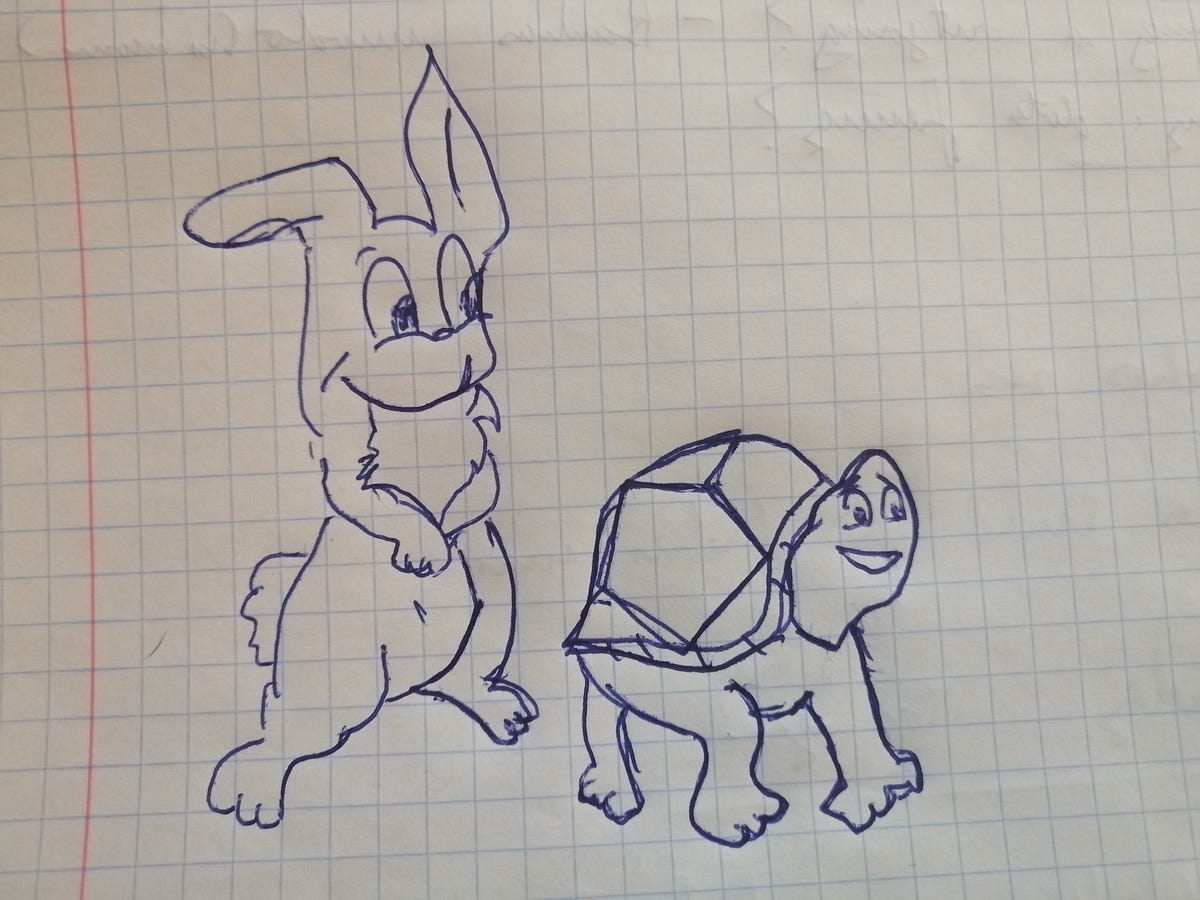

A detailed look at differences between the two algorithms and when you should choose one over the other

an end-to-end tutorial on how to apply an emerging Data Science algorithm

Learn how AdaBoost works from a Math perspective, in a comprehensive and straight-to-the-point manner.

Natural Gradient Boosting for Probabilistic Prediction - stanfordmlgroup/ngboost

A step by step guide for implementing one of the most trending machine learning algorithm using numpy

In this paper, a feature boosting network is proposed for estimating 3D hand pose and 3D body pose from a single RGB image. In this method, the features learned by the convolutional layers are boosted with a new long short-term dependence-aware (LSTD) module, which enables the intermediate convolutional feature maps to perceive the graphical long short-term dependency among different hand (or body) parts using the designed Graphical ConvLSTM. Learning a set of features that are reliable and discriminatively representative of the pose of a hand (or body) part is difficult due to the ambiguities, texture and illumination variation, and self-occlusion in the real application of 3D pose estimation. To improve the reliability of the features for representing each body part and enhance the LSTD module, we further introduce a context consistency gate (CCG) in this paper, with which the convolutional feature maps are modulated according to their consistency with the context representations. We evaluate the proposed method on challenging benchmark datasets for 3D hand pose estimation and 3D full body pose estimation. Experimental results show the effectiveness of our method that achieves state-of-the-art performance on both of the tasks.

A curated list of gradient boosting research papers with implementations. - GitHub - benedekrozemberczki/awesome-gradient-boosting-papers: A curated list of gradient boosting research papers with ...

Who is going to win this war of predictions and on what cost? Let’s explore.