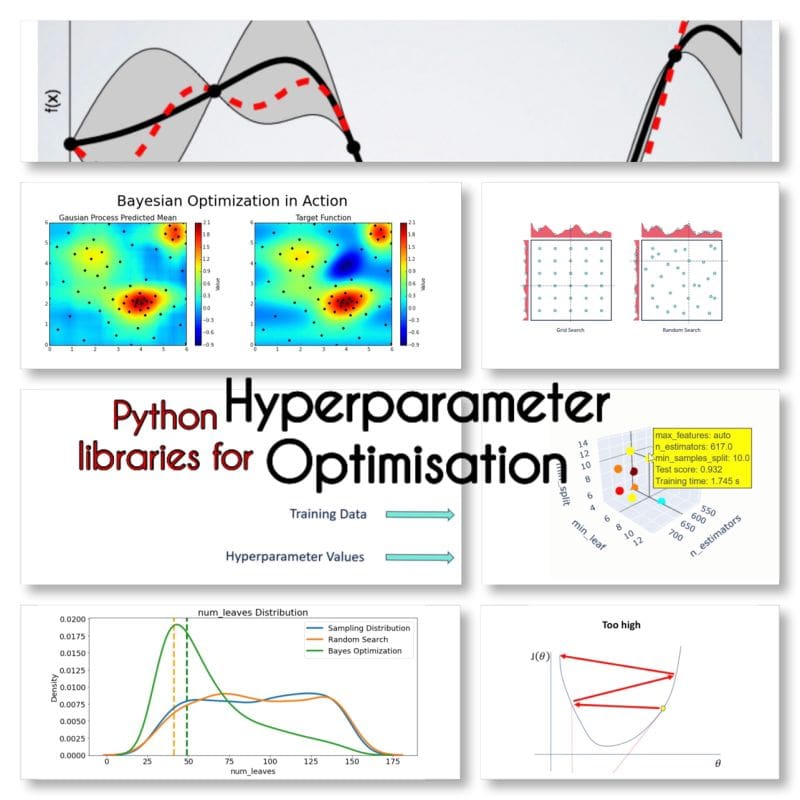

In machine learning, finding the perfect settings for a model to work at its best can be like looking for a needle in a haystack. This process, known as hyperparameter optimization, involves tweaking the settings that govern how the model learns. It's crucial because the right combination can significantly improve a model's accuracy and efficiency. However, this process can be time-consuming and complex, requiring extensive trial and error. Traditionally, researchers and developers have resorted to manual tuning or using grid search and random search methods to find the best hyperparameters. These methods do work to some extent but could be