info-theory

Important concepts in information theory, machine learning, and statistics

A primer on the math, logic, and pragmatic application of JS Divergence — including how it is best used in drift monitoring

This is the first in a series of articles about Information Theory and its relationship to data driven enterprises and strategy. While…

Using Mutual Information to measure the likelihood of candidate links in a graph.

Entropy, cross-entropy, log loss, and KL divergence

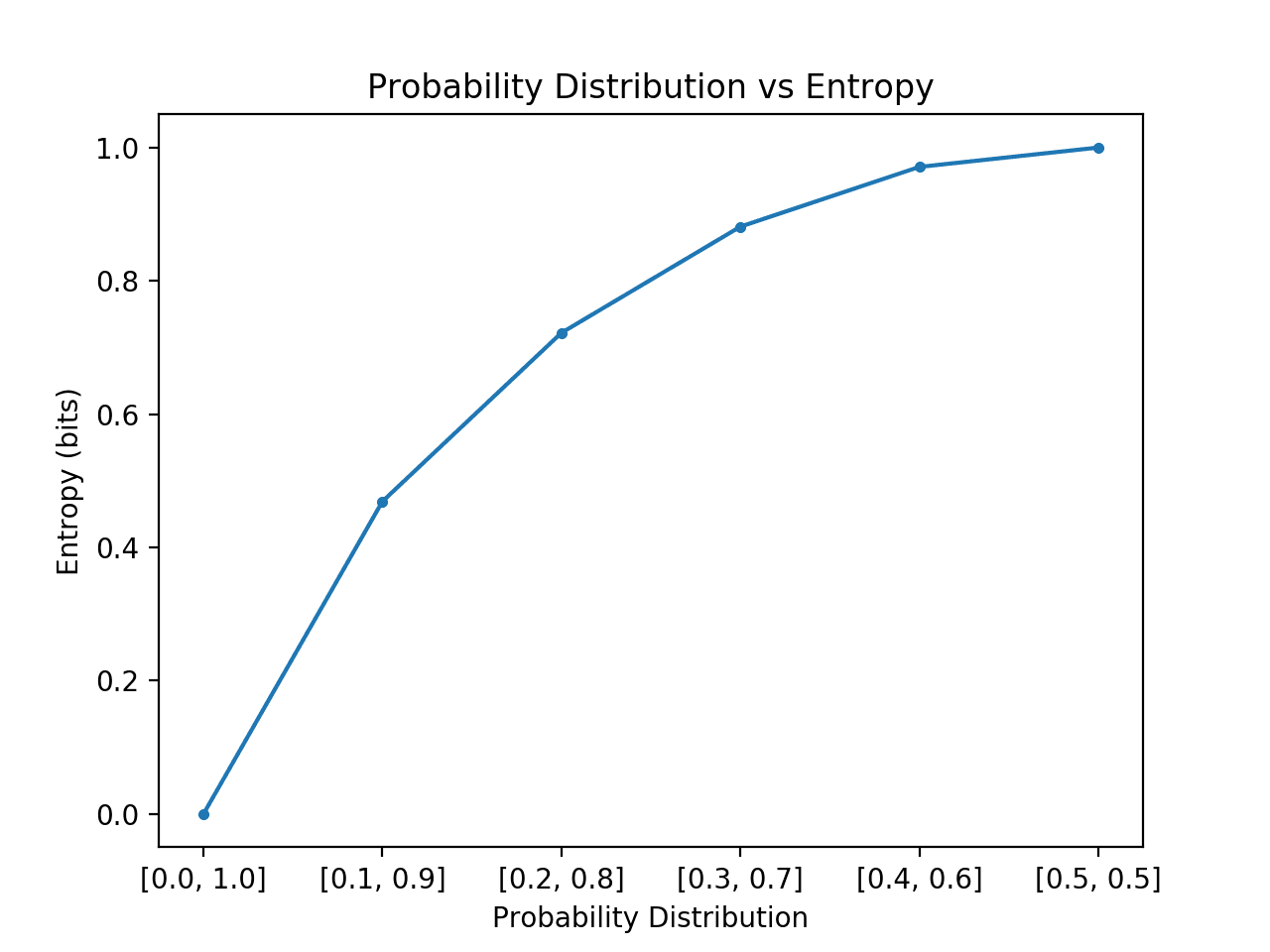

Information theory is a subfield of mathematics concerned with transmitting data across a noisy channel. A cornerstone of information theory is the idea of quantifying how much information there is in a message. More generally, this can be used to quantify the information in an event and a random variable, called entropy, and is calculated using probability. Calculating information and…

Lambdaclass's blog about distributed systems, machine learning, compilers, operating systems, security and cryptography.

In economics, the Gini coefficient, also known as the Gini index or Gini ratio, is a measure of statistical dispersion intended to represent the income inequality, the wealth inequality, or the consumption inequality within a nation or a social group. It was developed by Italian statistician and sociologist Corrado Gini.