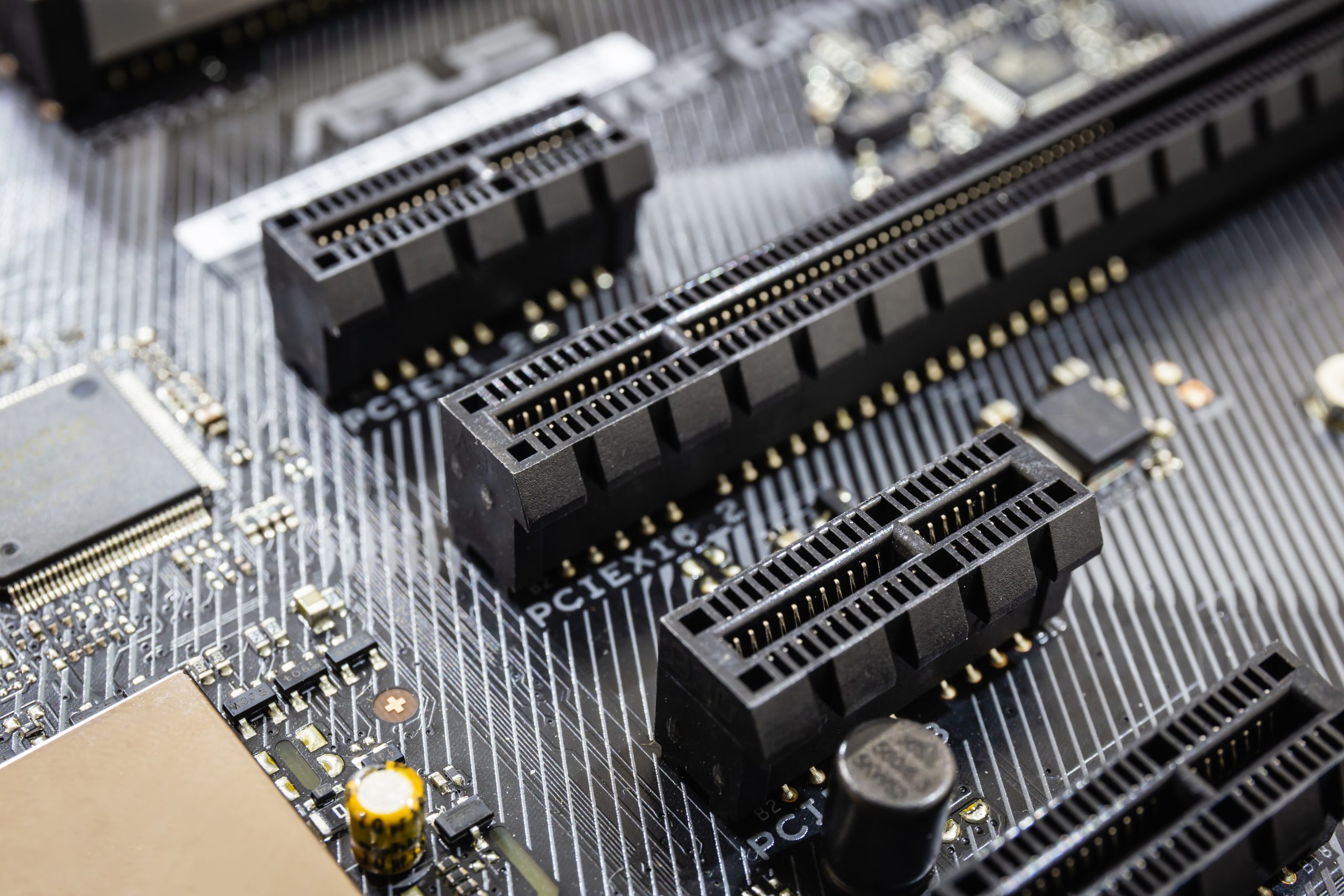

CXL 3.1 and PCIe 6.2 are transforming AI with improved data transfer and memory pooling.

CXL 3.1 and PCIe 6.2 are transforming AI with improved data transfer and memory pooling.

Many features of UCIe 2.0 seen as “heavy” are optional, causing confusion.

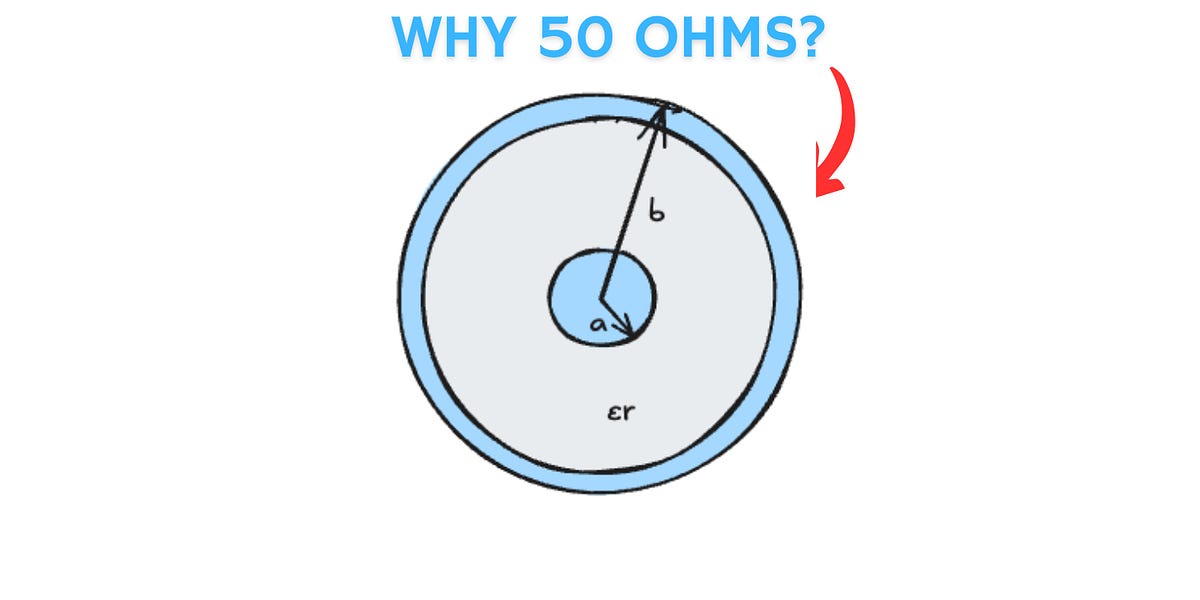

The history, physics, and practical trade-offs behind a number every RF engineer knows.

Nvidia's endorsement of co-packaged optics means the time is right

Understanding and minimizing discontinuities is increasingly important as serial links become both shorter and faster. Applying the old edge rate to roundtrip relationship to the modern era, Donald Telian in this article offers a rule-of-thumb to help gauge which interconnect structures, and hence discontinuities, to care about – and to what degree.

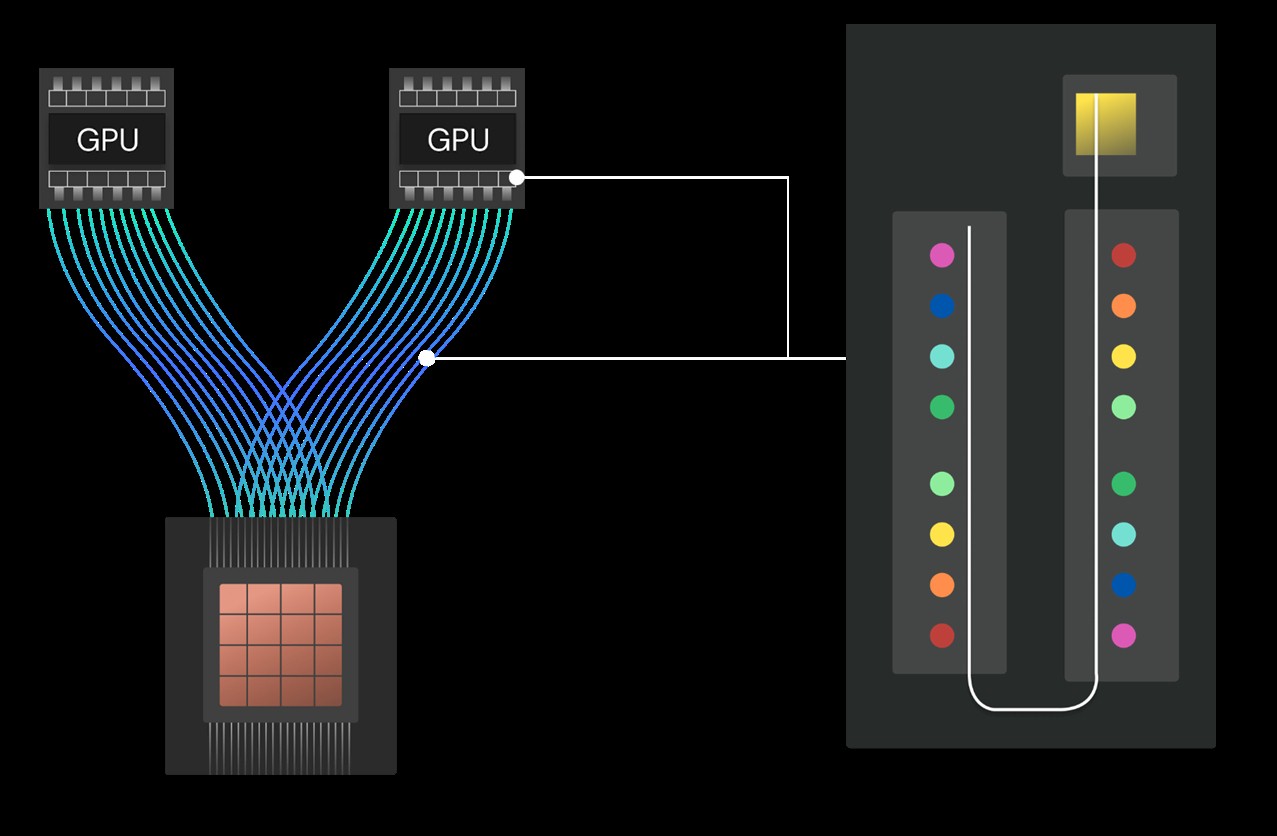

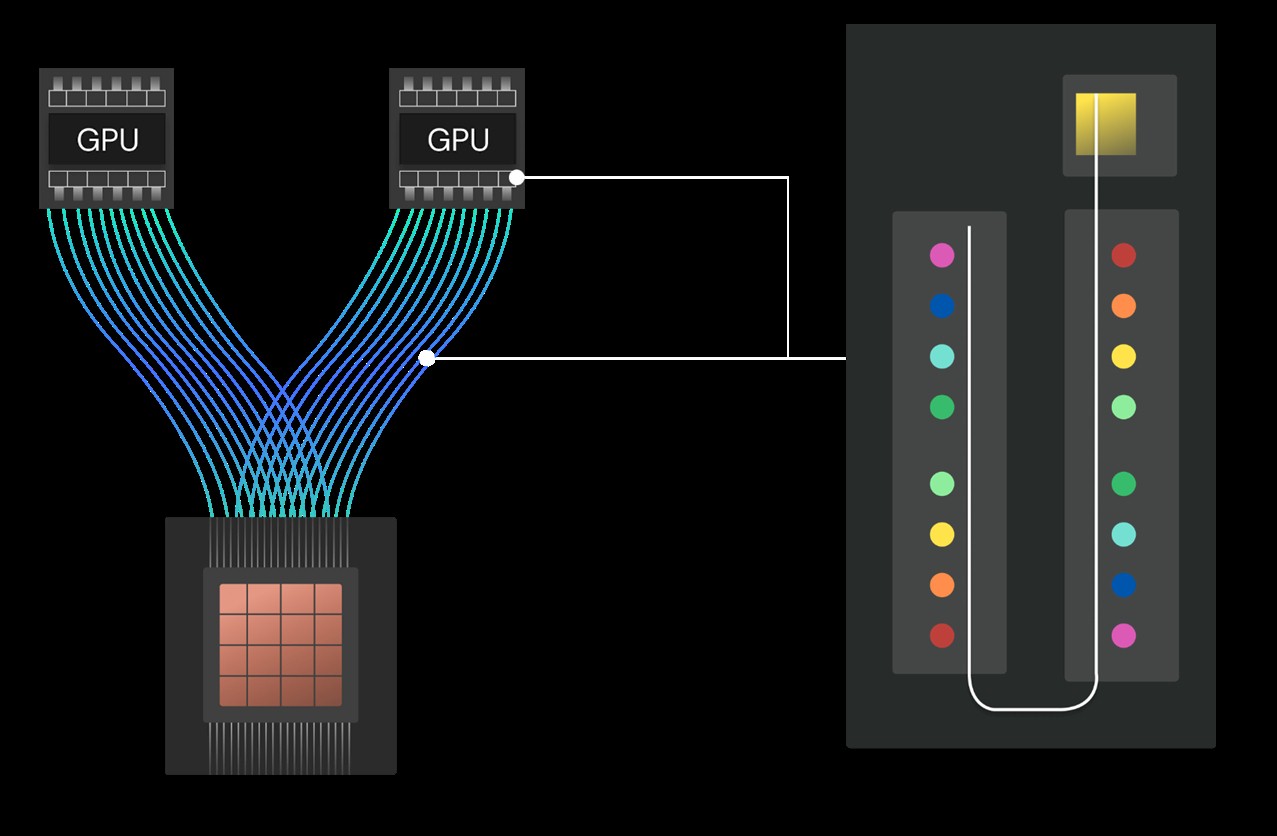

According to rumors, Nvidia is not expected to deliver optical interconnects for its GPU memory-lashing NVLink protocol until the “Rubin Ultra” GPU

According to rumors, Nvidia is not expected to deliver optical interconnects for its GPU memory-lashing NVLink protocol until the “Rubin Ultra” GPU

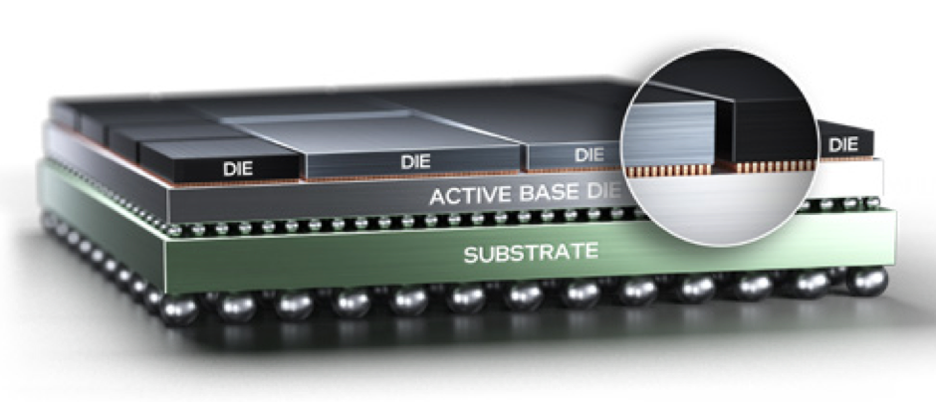

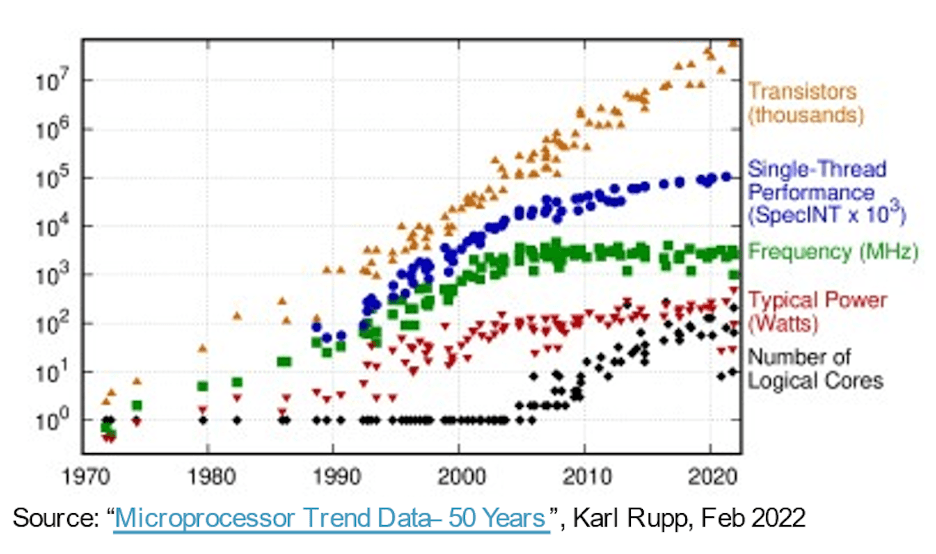

Foundry competition heats up in three dimensions and with novel technologies as planar scaling benefits diminish.

In this guide, we’ll elucidate the pivotal role of FO-PLP in advancing the semiconductor sector. Harnessing cost-effectiveness with enhanced functionality, FO-PLP beckons a new era of electronic sophistication. Let’s delve into the ultimate guide to Fan-Out Panel-Level Packaging and explore how it’s shaping the future. Overview of Fan-Out Panel-Level Packaging (FO-PLP) Fan-Out Panel-Level Packaging

While a lot of people focus on the floating point and integer processing architectures of various kinds of compute engines, we are spending more and more

The Ethernet roadmap has had a few bumps and potholes in the four and a half decades since the 10M generation was first published in 1980. Remember the

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Intel said it has made a significant breakthrough in the development of glass substrates for next-generation advanced packaging in an attempt to stay on the past of Moore’s Law. The big chip maker said this milestone […]

Panmnesia has devised CXL-based vector search methods that are much faster than Microsoft’s Bing and Outlook.

Google's new machines combine Nvidia H100 GPUs with Google’s high-speed interconnections for AI tasks like training very large language models.

Samsung Electronics has stepped up its deployment in the fan-out (FO) wafer-level packaging segment with plans to set up related production lines in Japan, according to industry sources.

In the march to more capable, faster, smaller, and lower…

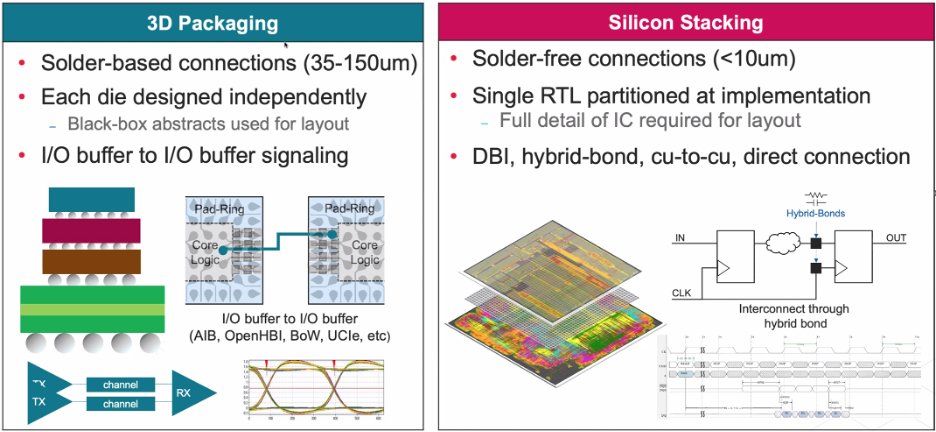

While terms often are used interchangeably, they are very different technologies with different challenges.

Historically Intel put all its cumulative chip knowledge to work advancing Moore's Law and applying those learnings to its future CPUs. Today, some of

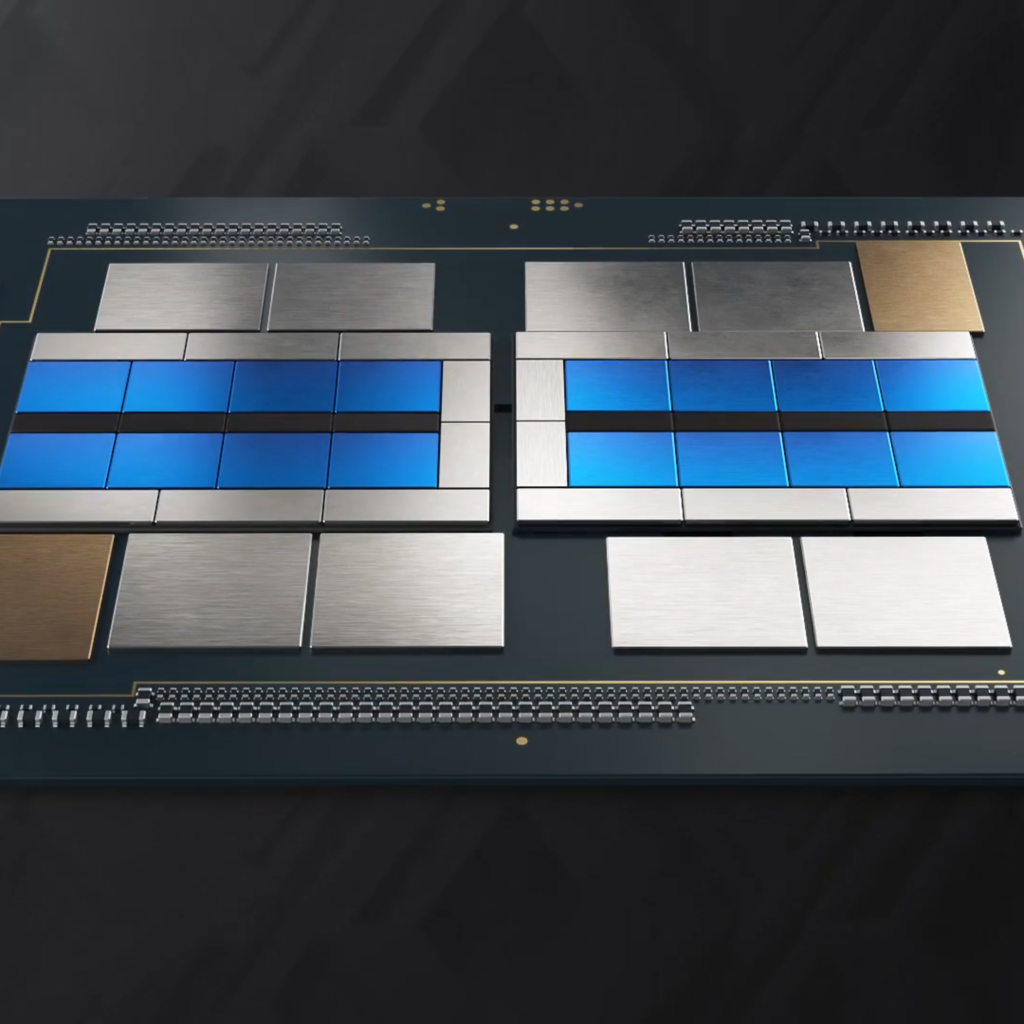

Intel's multi-die interconnect bridge (EMIB) is an approach to in-package high-density interconnect of heterogeneous chips.

Powered by the promises of the CHIPS Act, Intel is investing more than $100 billion to increase domestic chip manufacturing capacity and capabilities.

If a few cores are good, then a lot of cores ought to be better. But when it comes to HPC this isn’t always the case, despite what the Top500 ranking –

Interconnects—those sometimes nanometers-wide metal wires that link transistors into circuits on an IC—are in need of a major overhaul. And as chip fabs march toward the outer reaches of Moore’s Law, interconnects are also becoming the industry’s choke point.

Attached: 2 images One of my nice friends at Hurricane Electric gave me a dead 100G-LR4 optic to tear apart for your entertainment, so for the sake of your entertainment, lets dig into it! 🧵

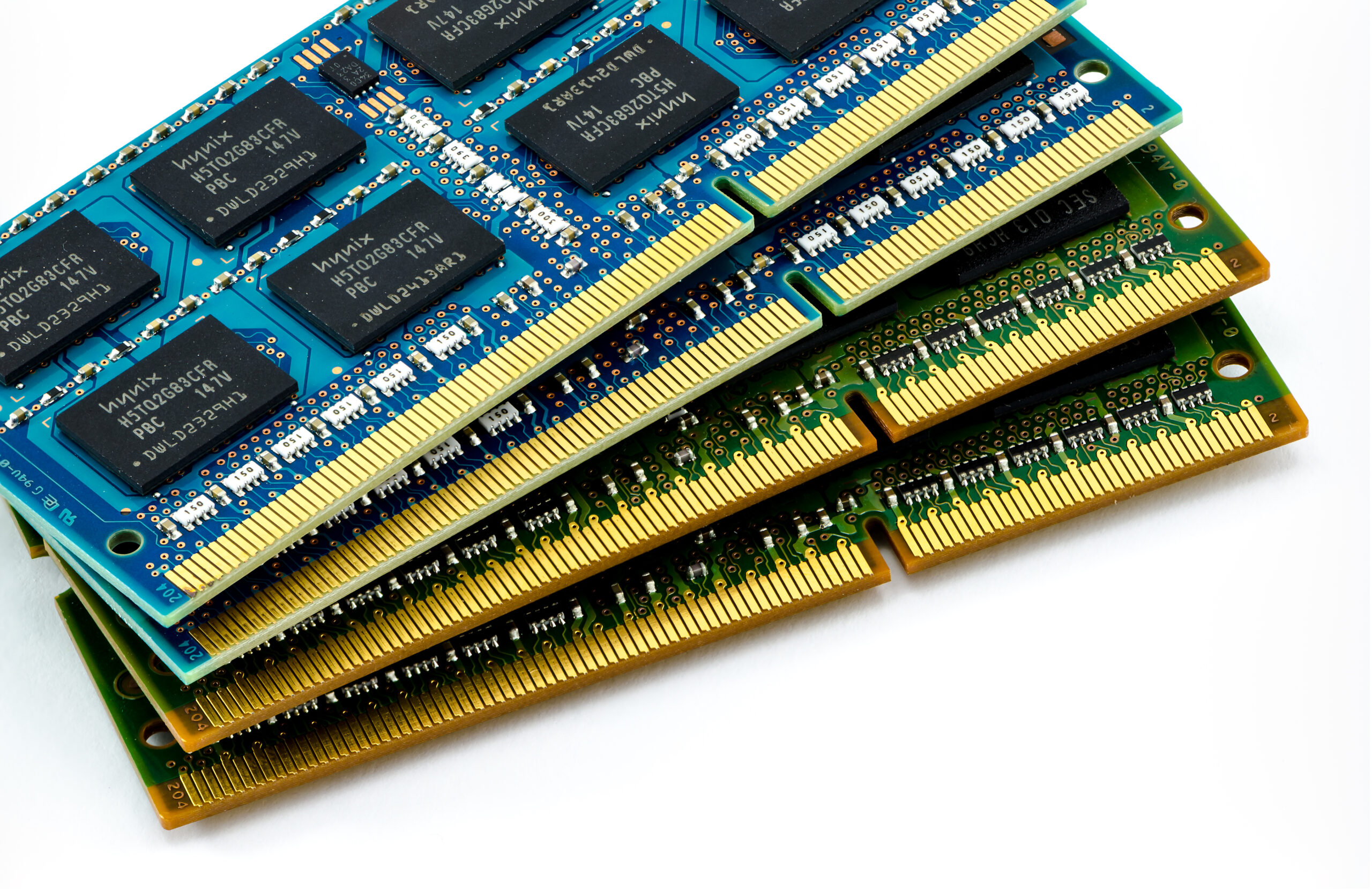

Conventional wisdom says that trying to attach system memory to the PCI-Express bus is a bad idea if you care at all about latency. The further the memory

The world's largest chip scales to new heights.

Let's learn more about the world's most important manufactured product. Meaningful insight, timely analysis, and an occasional investment idea.

PCI-SIG has drafted the PCIe 7.0 spec and aims to finalize it in 2025.

Star Trek's glowing circuit boards may not be so crazy

Nvidia has staked its growth in the datacenter on machine learning. Over the past few years, the company has rolled out features in its GPUs aimed neural

One of the breakthrough moments in computing, which was compelled by necessity, was the advent of symmetric multiprocessor, or SMP, clustering to make two

An effective interconnect makes delivering a complex SoC easier, more predictable, and less costly.

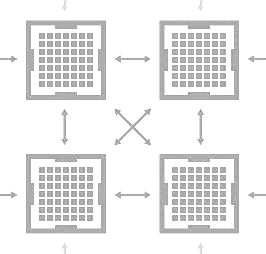

Today many servers contain 8 or more GPUs. In principle then, scaling an application from one to many GPUs should provide a tremendous performance boost. But in practice, this benefit can be difficult…

How Compute Express Link provides a means of connecting a wide range of heterogeneous computing elements.