We will discover how you can use basic or advanced aggregations using actual interview datasets.

We will discover how you can use basic or advanced aggregations using actual interview datasets.

In this article, I'll take you through a practical guide to PySpark that will help you get started with PySpark. PySpark Practical Guide.

Performing Data Visualization using PySpark

Apache Spark is one of the hottest new trends in the technology domain. It is the framework with probably the highest potential to realize…

Apache Spark runs fast, offers robust, distributed, fault-tolerant data objects, and integrates beautifully with the world of machine learning and graph analytics. Learn more here.

This post is about setting up a hyperparameter tuning framework for Data Science using scikit-learn/xgboost/lightgbm and pySpark

Here's how to install PySpark on your computer and get started working with large data sets using Python and PySpark in a Jupyter Notebook.

PySpark is a great language for performing exploratory data analysis at scale, building machine learning pipelines, and creating ETLs for…

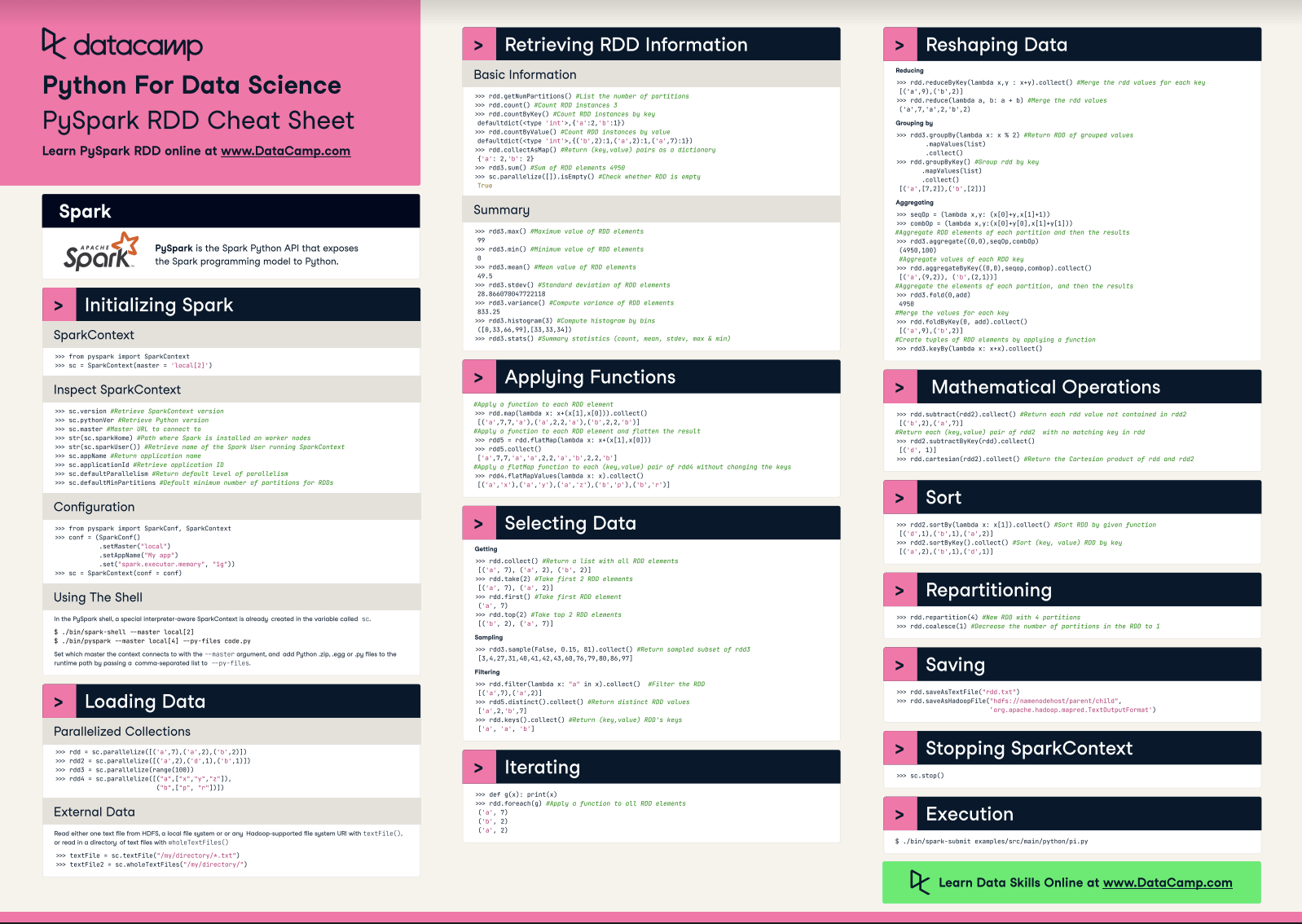

This PySpark cheat sheet with code samples covers the basics like initializing Spark in Python, loading data, sorting, and repartitioning.

PySpark-Tutorial provides basic algorithms using PySpark - mahmoudparsian/pyspark-tutorial