CXL 3.1 and PCIe 6.2 are transforming AI with improved data transfer and memory pooling.

CXL 3.1 and PCIe 6.2 are transforming AI with improved data transfer and memory pooling.

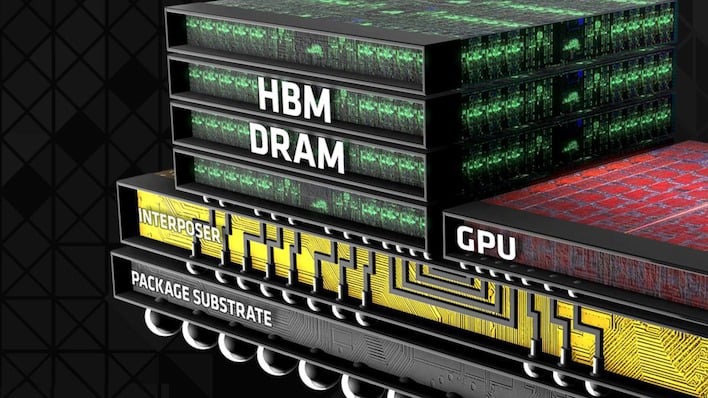

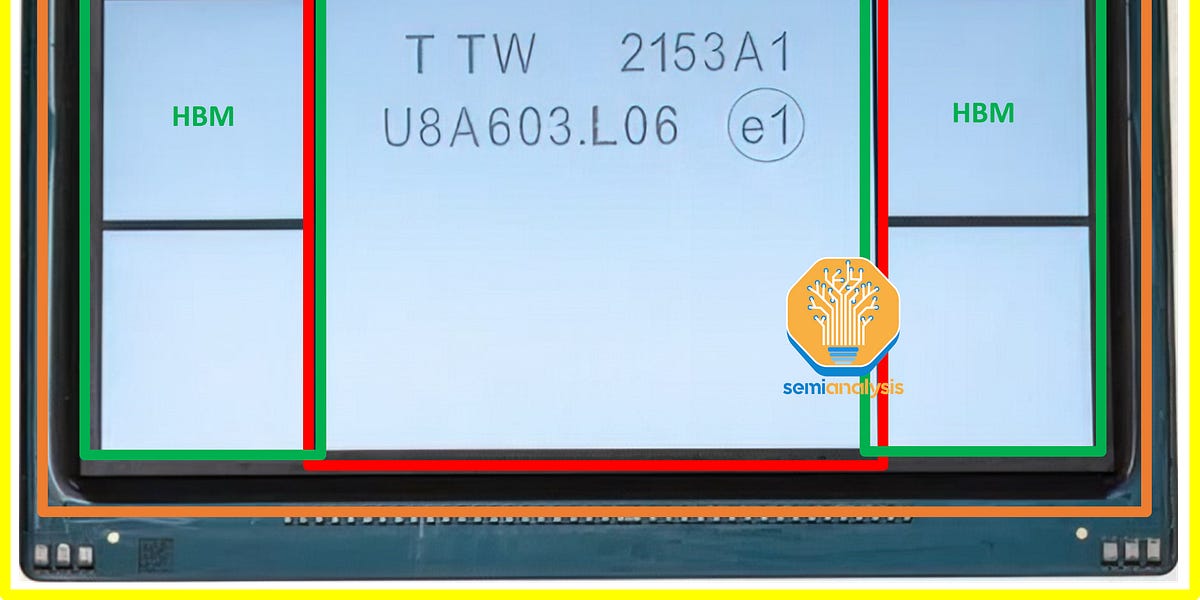

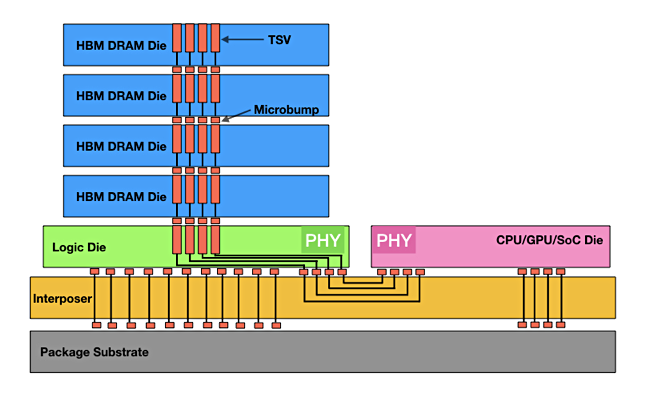

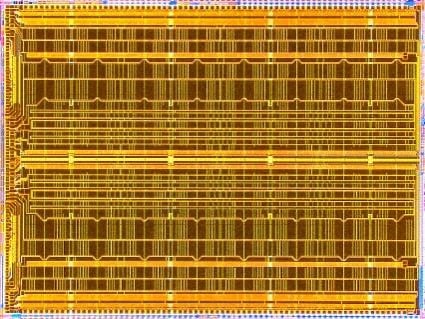

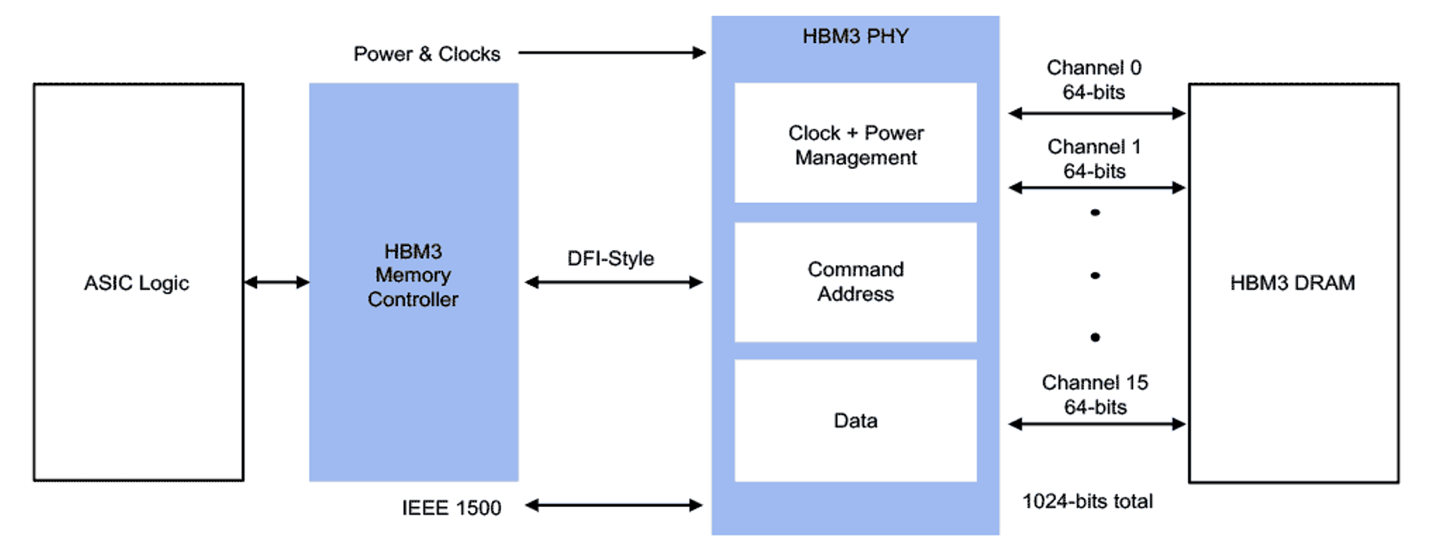

High-end xPUs (GPUs, TPUs, and other AI processors) use HBM memories to get the absolute highest memory bandwidth for training the Large Language Models (LLMs) used in today's generative AI systems. These processors, used in the thousands by hyperscale datacenters, can consume a kilowatt each. As a result, they must dissipate a phenomenal quantity of

The move to nanosheet transistors is a boon for SRAM

Discover how SK hynix’s switch to the advanced 6-phase RDQS scheme helped create the world’s best-performing HBM3E with enhanced capacity and reliability.

It hasn’t achieved commercial success, but there is still plenty of development happening; analog IMC is getting a second chance.

(The following is an update of a post that originally ran on 6 December 2013. It was republished in 2024 as a part of a series on The Memory Guy blog to honor the 3D NAND inventors who have received the 2024 FMS Lifetime Achievement Award.) A very unusual side effect of the move to

While Intel's primary product focus is on the processors, or brains, that make computers work, system memory (that's DRAM) is a critical component for performance. This is especially true in servers, where the multiplication of processing cores has outpaced the rise in memory bandwidth (in other wor...

A new technical paper titled “Integrated non-reciprocal magneto-optics with ultra-high endurance for photonic in-memory computing” was published by researchers at UC Santa Barbara, University of Cagliari, University of Pittsburgh, AIST and Tokyo Institute of Technology. Abstract “Processing information in the optical domain promises advantages in both speed and energy efficiency over existing digital hardware for... » read more

Katherine Bourzac / IEEE Spectrum: The Northwest-AI-Hub, which is researching hybrid gain cell memory that combines DRAM's density with SRAM's speed, gets a $16.3M CHIPS Act grant via the US DOD

Researchers developing dense, speedy hybrid gain cell memory recently got a boost from CHIPS Act funding

eBook: Nearly everything you need to know about memory, including detailed explanations of the different types of memory; how and where these are used today; what's changing, which memories are successful and which ones might be in the future; and the limitations of each memory type.

It's not clear when this mysterious memory will arrive, but SK Hynix is certainly talking a big game.

HBM4 is going to double the bandwidth of HBM3, but not through the usual increase in clock rate.

The impact of quantum algorithms on different cryptographic techniques and what can be done about it.

DDR6 & LPDDR6 memory will feature super-fast speeds of up to 17.6 Gbps while the CAMM2 DRAM standard is headed to desktops.

DRAM research is difficult. Work that aims to be applicable to commodity devices should consider the sense amplifier internal layouts, transistor dimensions and circuit typologies used by real devices. These are all essential elements that must be considered while proposing new ideas. Unfortunately, they are not disclosed by DRAM vendors. As such, researchers are forced… Read

Demand for high-bandwidth memory is driving competition -- and prices -- higher

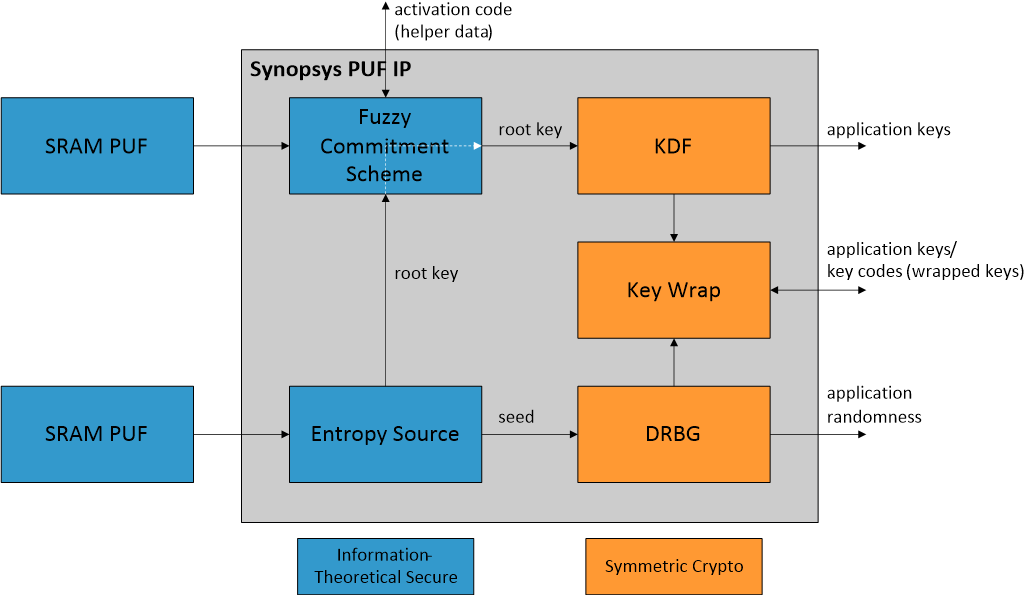

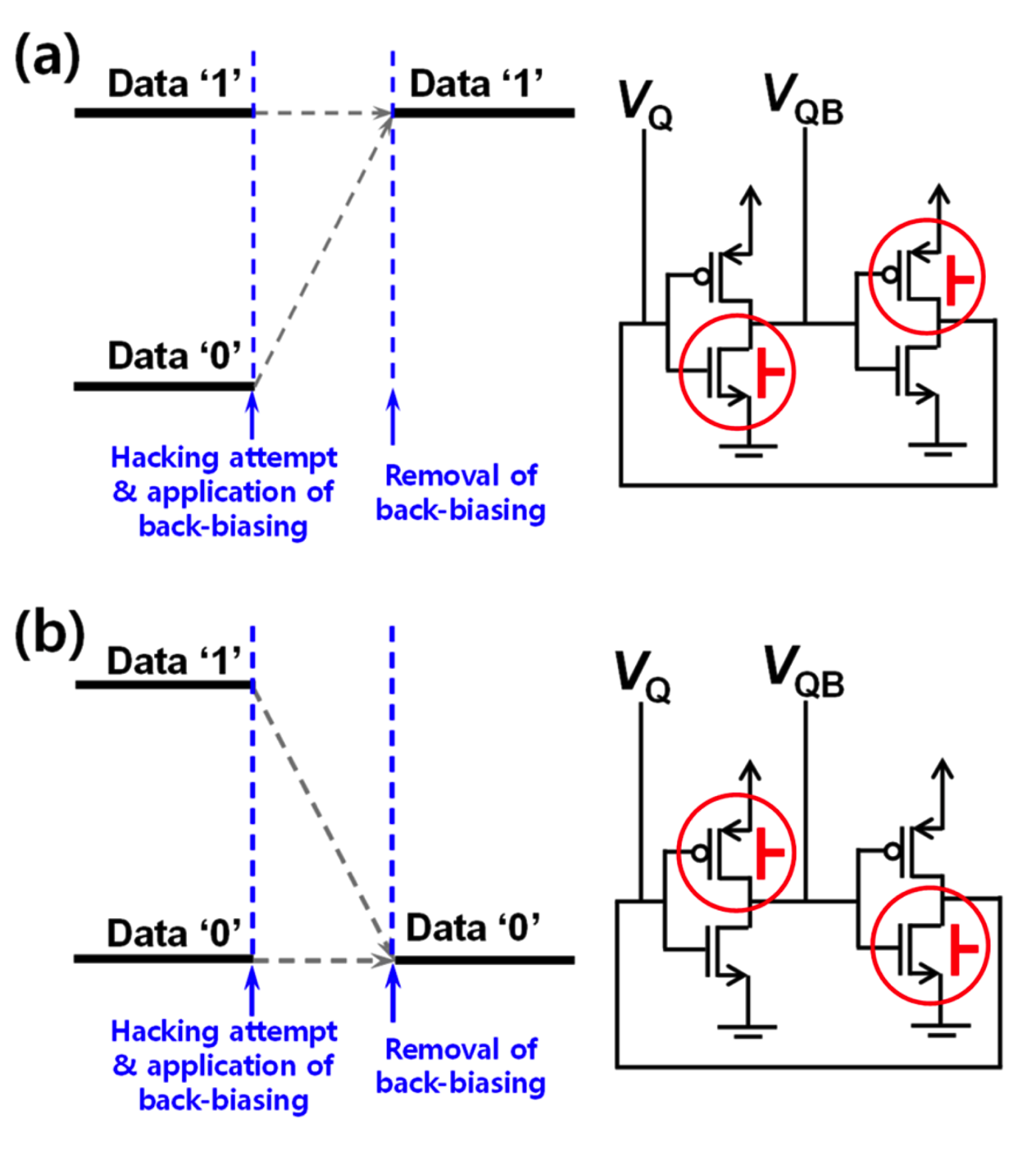

Volatile memory threat increases as chips are disaggregated into chiplets, making it easier to isolate memory and slow data degradation.

Rambus has unveiled its next-gen GDDR7 memory controller IP, featuring PAM3 Signaling, and up to 48 Gbps transfer speeds.

On the use and benefits of virtual fabrication in the development of DRAM saddle fin profiles

In-Memory Computing (IMC) introduces a new paradigm of computation that offers high efficiency in terms of latency and power consumption for AI accelerators. However, the non-idealities and...

A new technical paper titled “Functionally-Complete Boolean Logic in Real DRAM Chips: Experimental Characterization and Analysis” was published by researchers at ETH Zurich. Abstract: “Processing-using-DRAM (PuD) is an emerging paradigm that leverages the analog operational properties of DRAM circuitry to enable massively parallel in-DRAM computation. PuD has the potential to significantly reduce or eliminate costly... » read more

Explore HBM3E and GDDR6 memory capabilities, including the benefits and design considerations for each

A conference schedule has revealed that Samsung's next-gen graphics memory is bonkers fast.

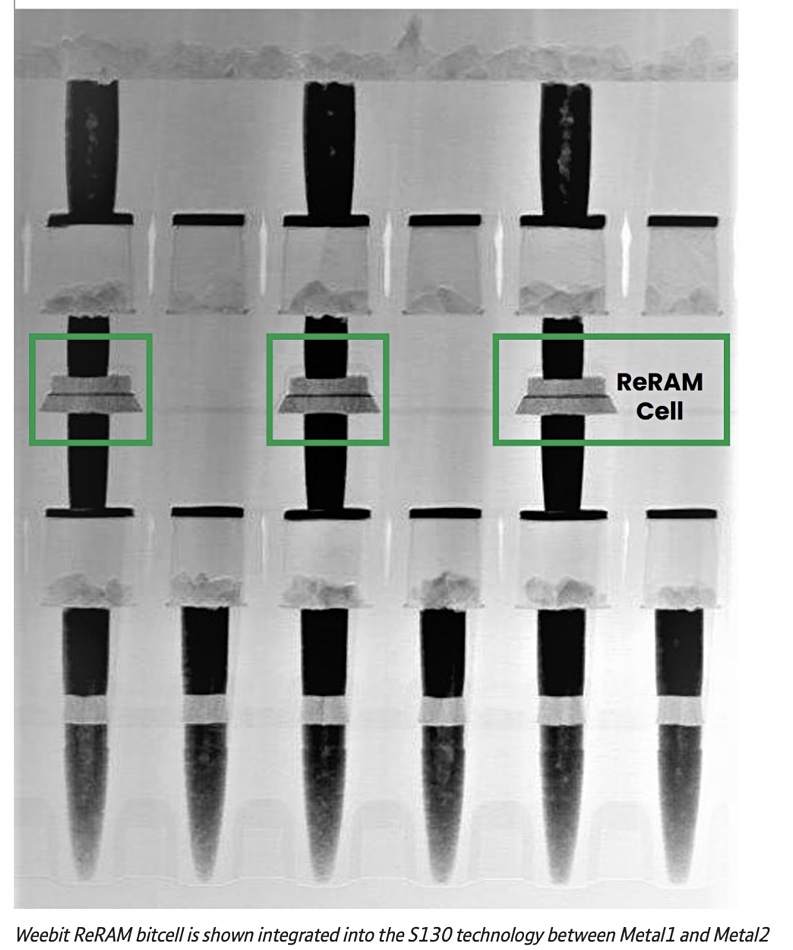

Micron’s NVDRAM chip could be a proving ground for technologies used in other products – and not become a standalone product itself. The 32Gb storage-class nonvolatile random-access memory chip design was revealed in a Micron paper at the December IEDM event, and is based on ferroelectricRAM technology with near-DRAM speed and longer-than-NAND endurance. Analysts we […]

We're getting a first glimpses of Samsung's next-generation HBM3E and GDDR7 memory chips.

A technical paper titled “Benchmarking and modeling of analog and digital SRAM in-memory computing architectures” was published by researchers at KU Leuven. Abstract: “In-memory-computing is emerging as an efficient hardware paradigm for deep neural network accelerators at the edge, enabling to break the memory wall and exploit massive computational parallelism. Two design models have surged:... » read more

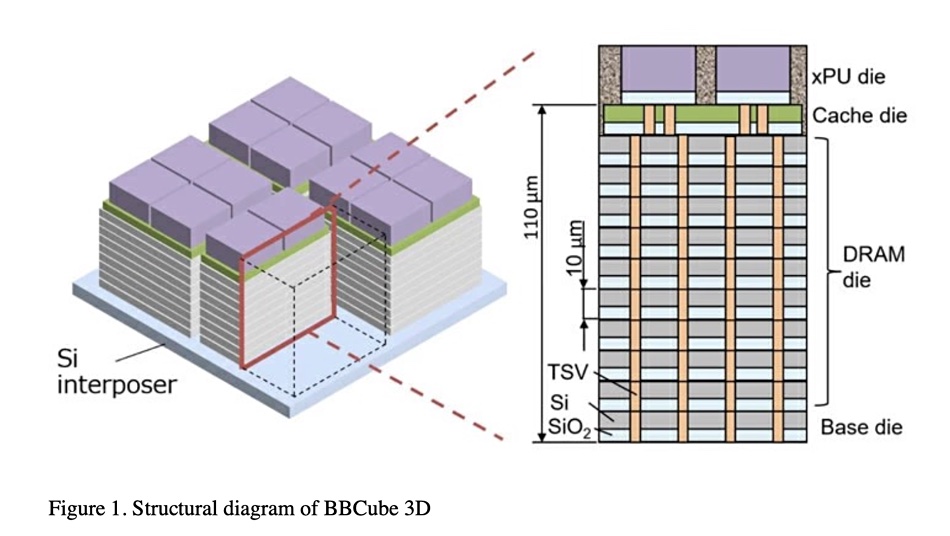

Tokyo Institute of Technology scientists have devised a 3D DRAM stack topped by a processor to provide four times more bandwidth than HBM.

Quarterly Ramp for Nvidia, Broadcom, Google, AMD, AMD Embedded (Xilinx), Amazon, Marvell, Microsoft, Alchip, Alibaba T-Head, ZTE Sanechips, Samsung, Micron, and SK Hynix

Though it'll arrive just in time for mid-cycle refresh from AMD, Nvidia, and Intel, it's unclear if there will be any takers just yet.

Panmnesia has devised CXL-based vector search methods that are much faster than Microsoft’s Bing and Outlook.

We asked memory semiconductor industry analyst Jim Handy of Objective Analysis how he views 3D DRAM technology.

New memory technologies have emerged to push the boundaries of conventional computer storage.

A new technical paper titled “Fundamentally Understanding and Solving RowHammer” was published by researchers at ETH Zurich. Abstract “We provide an overview of recent developments and future directions in the RowHammer vulnerability that plagues modern DRAM (Dynamic Random Memory Access) chips, which are used in almost all computing systems as main memory. RowHammer is the... » read more

USC researchers have announced a breakthrough in memristive technology that could shrink edge computing for AI to smartphone-sized devices.

ReRAM startup Intrinsic Semiconductor Technologies has raised $9.73 million to expand its engineering team and bring its product to market.

New applications require a deep understanding of the tradeoffs for different types of DRAM.

Need a New Year's resolution? How about stop paying for memory you don't need

A technical paper titled “Beware of Discarding Used SRAMs: Information is Stored Permanently” was published by researchers at Auburn University. The paper won “Best Paper Award” at the IEEE International Conference on Physical Assurance and Inspection of Electronics (PAINE) Oct. 25-27 in Huntsville. Abstract: “Data recovery has long been a focus of the electronics industry... » read more

Conventional wisdom says that trying to attach system memory to the PCI-Express bus is a bad idea if you care at all about latency. The further the memory

Using new materials, UPenn researchers recently demonstrated how analog compute-in-memory circuits can provide a programmable solution for AI computing.

A new technical paper titled “HiRA: Hidden Row Activation for Reducing Refresh Latency of Off-the-Shelf DRAM Chips” was published by researchers at ETH Zürich, TOBB University of Economics and Technology and Galicia Supercomputing Center (CESGA). Abstract “DRAM is the building block of modern main memory systems. DRAM cells must be periodically refreshed to prevent data... » read more

Changes are steady in the memory hierarchy, but how and where that memory is accessed is having a big impact.

Increased transistor density and utilization are creating memory performance issues.

EE Times Compares SRAM vs. DRAM, Common Issues With Each Type Of Memory, And Takes A Look At The Future For Computer Memory.

Nvidia has staked its growth in the datacenter on machine learning. Over the past few years, the company has rolled out features in its GPUs aimed neural

PARIS — If you’ve ever seen the U.S. TV series “Person of Interest,” during which an anonymous face in the Manhattan crowd, highlighted inside a digital

Innovative new clocking schemes in the latest LPDDR standard enable easier implementation of controllers and PHYs at maximum data rate as well as new options for power consumption.

Getting data in and out of memory faster is adding some unexpected challenges.

PALO ALTO, Calif., August 19, 2019 — UPMEM announced today a Processing-in-Memory (PIM) acceleration solution that allows big data and AI applications to run 20 times faster and with 10 […]

Experts at the Table: Which type of DRAM is best for different applications, and why performance and power can vary so much.

Emerging memory technologies call for an integrated PVD process system capable of depositing and measuring multiple materials under vacuum.

This article will take a closer look at the commands used to control and interact with DRAM.

Over the last two years, there has been a push for novel architectures to feed the needs of machine learning and more specifically, deep neural networks.

A full fix for the “Half-Double” technique will require rethinking how memory semiconductors are designed.

Pushing AI to the edge requires new architectures, tools, and approaches.

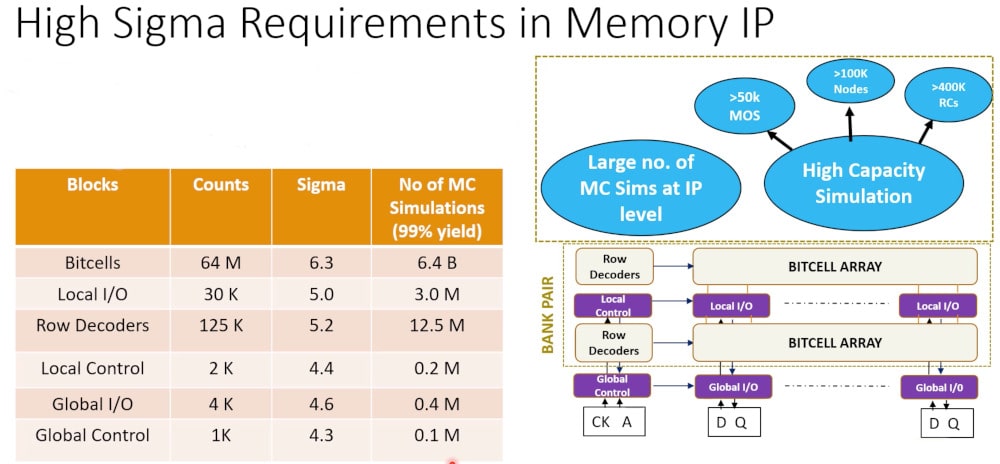

SRAM cell architecture introduction: design and process challenges assessment.

How side-band, inline, on-die, and link error correcting schemes work and the applications to which they are best suited.

Artificial neural networks and deep learning have taken center stage as the tools of choice for many contemporary machine learning practitioners and researchers. But there are many cases where you need something more powerful than basic statistical analysis, yet not as complex or compute-intensive as a deep neural network. History

Looking at a typical SoC design today it's likely to…

With no definitive release date for DDR5, DDR4 is making significant strides.

Micron's GDDR6X is one of the star components in Nvidia's RTX 3070, 3080, and 3080 video cards. It's so fast it should boost gaming past the 4K barrier.

Efficient cache for gigabytes of data written in Go. - allegro/bigcache

Good inferencing chips can move data very quickly

You can process data that doesn’t fit in memory by using four basic techniques: spending money, compression, chunking, and indexing.

“Industry 4.0” is already here for some companies—especially silicon foundries.

Comparing different machine learning use-cases and the architectures being used to address them.

The previous post in this series (excerpted from the Objective Analysis and Coughlin Associates Emerging Memory report) explained why emerging memories are necessary. Oddly enough, this series will explain bit selectors before defining all of the emerging memory technologies themselves. The reason why is that the bit selector determines how small a bit cell can

Processing In Memory Growing volume of data and limited improvements in performance create new opportunities for approaches that never got off the ground.

Micron notes that GDDR6 has silicon changes, channel enhancements, and talks a bit about performance measurements of the new memory.

New computing architectures aim to extend artificial intelligence from the cloud to smartphones