Explore the Google vs OpenAI AI ecosystem battle post-o3. Deep dive into Google's huge cost advantage (TPU vs GPU), agent strategies & model risks for enterprise

Explore the Google vs OpenAI AI ecosystem battle post-o3. Deep dive into Google's huge cost advantage (TPU vs GPU), agent strategies & model risks for enterprise

Google Poxel 11s Tensor G6 codename leaks along with the Tensor G5 chip, expected to be built on TSMC's 2nm manufacturing process.

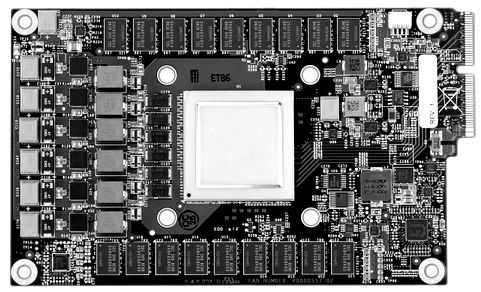

Google's sixth-generation tensor processing unit (TPU) stole the company's I/O developer conference stage with its higher-than-ever computing performance.

Google revealed the sixth iteration of its Tensor Processing Unit (TPU), named Trillium, for data centers at the I/O 2024

Google’s new AI chip is a rival to Nvidia, and its Arm-based CPU will compete with Microsoft and Amazon

Startup Groq has developed an machine learning processor that it claims blows GPUs away in large language model workloads – 10x faster than an Nvidia GPU at 10 percent of the cost, and needing a tenth of the electricity. Update: Groq model compilation time and time from access to getting it up and running clarified. […]

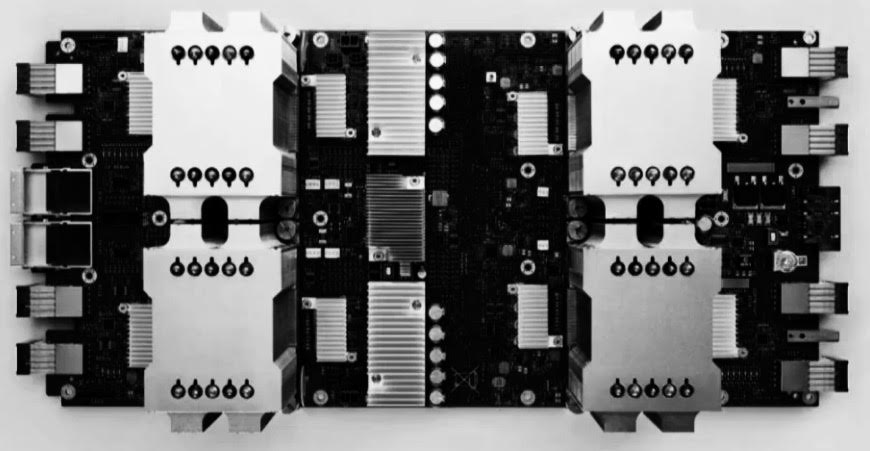

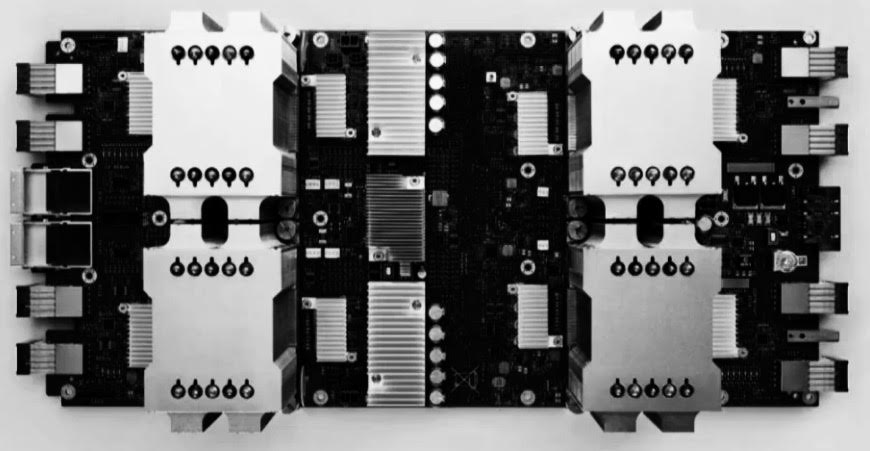

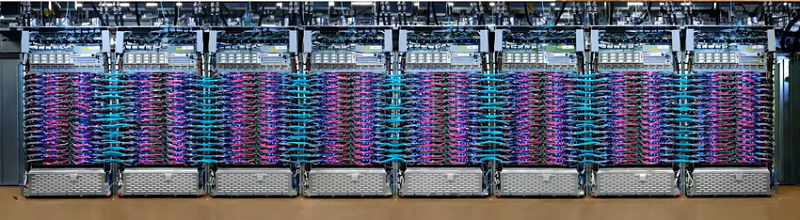

A new technical paper titled “TPU v4: An Optically Reconfigurable Supercomputer for Machine Learning with Hardware Support for Embeddings” was published by researchers at Google. Abstract: “In response to innovations in machine learning (ML) models, production workloads changed radically and rapidly. TPU v4 is the fifth Google domain specific architecture (DSA) and its third supercomputer... » read more

As we previously reported, Google unveiled its second-generation TensorFlow Processing Unit (TPU2) at Google I/O last week. Google calls this new

In this work, we analyze the performance of neural networks on a variety of heterogenous platforms. We strive to find the best platform in terms of raw benchmark performance, performance per watt a…

Google did its best to impress this week at its annual IO conference. While Google rolled out a bunch of benchmarks that were run on its current Cloud TPU

Four years ago, Google started to see the real potential for deploying neural networks to support a large number of new services. During that time it was

Google detailed TPUv4 at Google I/O 2021. They're accelerator chips that deliver high performance on AI workloads.

The inception of Google’s effort to build its own AI chips is quite well known by now but in the interests of review, we’ll note that as early 2013 the