AI bots are straining our websites by ignoring robots.txt and scraping our content. Learn how you can fight back with tools, plugins, and CDN services to block unwanted AI crawlers and protect resources.

AI bots are straining our websites by ignoring robots.txt and scraping our content. Learn how you can fight back with tools, plugins, and CDN services to block unwanted AI crawlers and protect resources.

A Step by Step Guide to Build a Trend Finder Tool with Python: Web Scraping, NLP (Sentiment Analysis & Topic Modeling), and Word Cloud Visualization

Here's the handout for a workshop I presented this morning at [NICAR 2025](https://www.ire.org/training/conferences/nicar-2025/) on web scraping, focusing on lesser know tips and tricks that became possible only with recent developments …

Web scraping has emerged as a crucial method for gathering data, allowing companies and researchers to extract insightful information from the abundance of publicly accessible online content. Selecting the best online scraping tool might be difficult because there are so many of them accessible, each with its own special features and capabilities. The best 15 web scraping tools in the market have been examined in this article, along with their salient features, advantages, and applications. These tools offer a variety of choices to effectively extract, process, and analyze data from various web sources. Scrapy A powerful, open-source Python framework called

In the rapidly advancing field of Artificial Intelligence (AI), effective use of web data can lead to unique applications and insights. A recent tweet has brought attention to Firecrawl, a potent tool in this field created by the Mendable AI team. Firecrawl is a state-of-the-art web scraping program made to tackle the complex problems involved in getting data off the internet. Web scraping is useful, but it frequently requires overcoming various challenges like proxies, caching, rate limitations, and material generated with JavaScript. Firecrawl is a vital tool for data scientists because it addresses these issues head-on. Even without a sitemap,

Get started with web scraping in Ruby using this step-by-step tutorial! Learn how to scrape a site with Nokogiri and RSelenium libraries.

Master your web scraping skills. Learn all the tips and insights we know about data collection at scale. Everything from guides to easy-to-follow tutorials.

Web scraping is something I never thought I'd do. I'm primarily a UI developer, although my career...

Kimurai is a modern web scraping framework written in Ruby which works out of box with Headless Chromium/Firefox, PhantomJS, or simple HTTP requests and allows to scrape and interact with JavaScrip...

Learn web scraping with Ruby with this step-by-step tutorial. We will see the different ways to scrape the web in Ruby through lots of example with gems like Nokogiri, Kimurai and HTTParty.

Introduction Web Scraping, also known as data extraction or data scraping, is the process...

a.k.a. leave BeautifulSoup in the past and embrace SQL I used DALL·E to generate thumbnails for this post: “cute cartoon|claymation abominable snowman scraping ice off his frozen car windshield” is nightmare fuel Some of the most common web-scraping tasks can be done in pure SQLite - meaning no Python, Node, Ruby, or other programming languages necessary, only the SQLite CLI and some extensions. The main extension that enables this: sqlite-http, which allows you to make HTTP requests and sa

Create and run automated tests for desktop, web and mobile (Android and iOS) applications (.NET, C#, Visual Basic .NET, C++, Java, Delphi, C++Builder, Intel C++ and many others).

It’s not a secret that businesses and individuals use web scrapers to collect public data from...

86K subscribers in the ruby community. Celebrate the weird and wonderful Ruby programming language with us!

You want to make friends with tabula-py and Pandas

It’s been a little while since I traded code with anyone. But a few weeks ago, one of our entrepreneurs-in-residence, Javier, who joined Redpoint from VMWare, told me about a Ruby gem called Mechanize that makes it really easy to crawl websites, particularly those with username/password logins. In about 30 minutes I had a working LinkedIn crawler built, pulling the names of new followers, new LinkedIn connections and LinkedIn status updates.

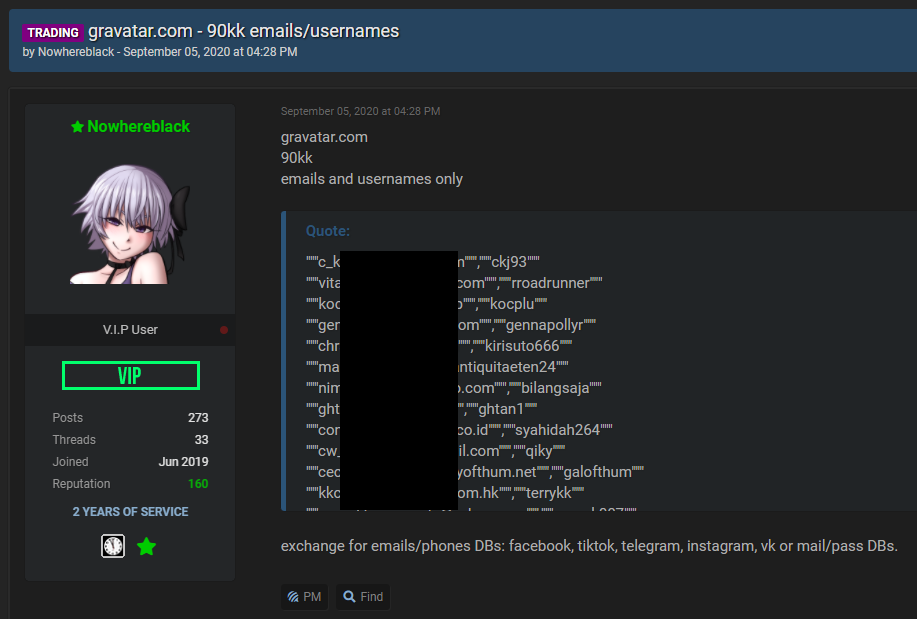

A decade and a bit ago during my tenure at Pfizer, a colleague's laptop containing information about customers, healthcare providers and other vendors was stolen from their car [https://www.doj.nh.gov/consumer/security-breaches/documents/pfizer-20110610.pdf] . The machine had full disk encryption and it's not known whether the

How to use the python web scraping framework Scrapy to crawl indeed.com. Learn data engineering strategies for getting actionable insights from public information.

Data extraction tools give you the boost you need for gathering information from a multitude of data sources. These four data extraction tools will help liberate you from manual data entry, understand complex documents, and simplify the data extraction process.

In this article, I'm going to walk you through a tutorial on web scraping to create a dataset using Python and BeautifulSoup.

The first step of any data science project is data collection.

Introduction to Web Scraping Businesses need better information to target and reach wider audiences. They get this information by scraping the web for content from social media platforms,...

You don’t need any coding skills to scrape data from websites.

A tutorial about a HTML parser for Python 3. Learn about the basic of a library for easily parsing web pages and extracting useful information.

Mixnode allows you to execute SQL against the web.