All posts, sorted by date (oldest first)

categories:

tags: platforms prodmgmt

date: 28 Jan 2025

slug:raindrop-prodmgmt-platforms

-->

prodmgmt/ecommerce (condensed)

categories:

tags: ecommerce prodmgmt

date: 29 Jan 2025

slug:raindrop-prodmgmt-ecommerce

-->

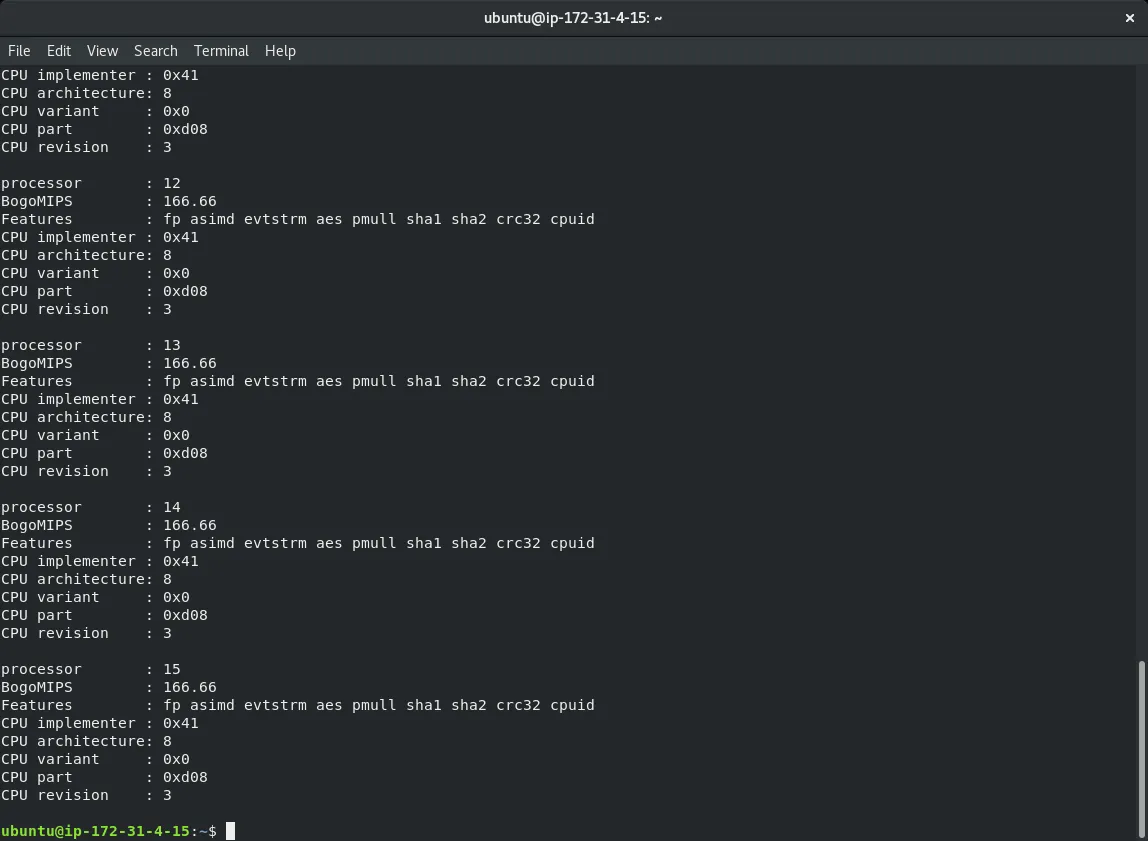

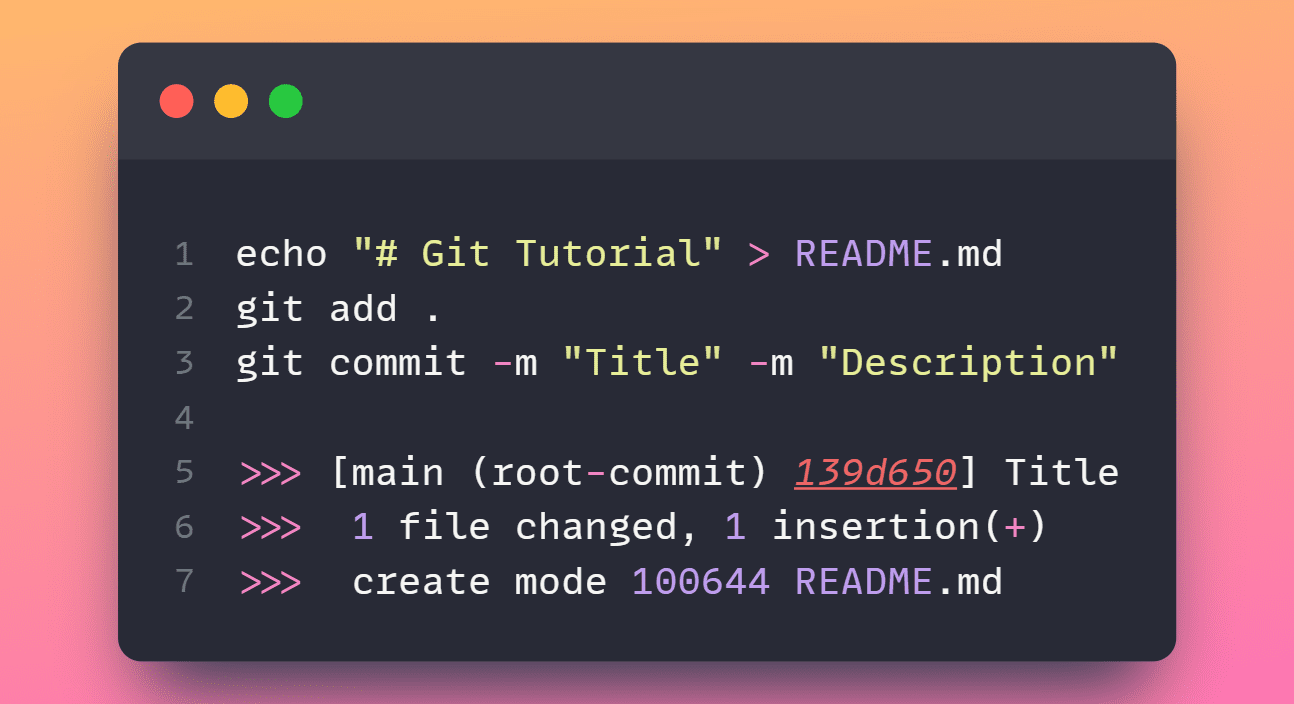

bash resources

categories:

tags: bash linux

date: 25 Mar 2025

slug:bash-resources

In this tutorial, we’ll cover 10 essential Bash shell commands every data scientist should know—commands that save time, simplify tasks, and keep you focused on insights rather than busywork.

Here are 10 powerful one-liners that can help you quickly accomplish essential data tasks.

Introduction For beginners venturing into the world of Linux, understanding shell expansion is a crucial step towards mastering the command line. Shell expansion is a powerful feature that allows users to generate complex commands and manipulat...

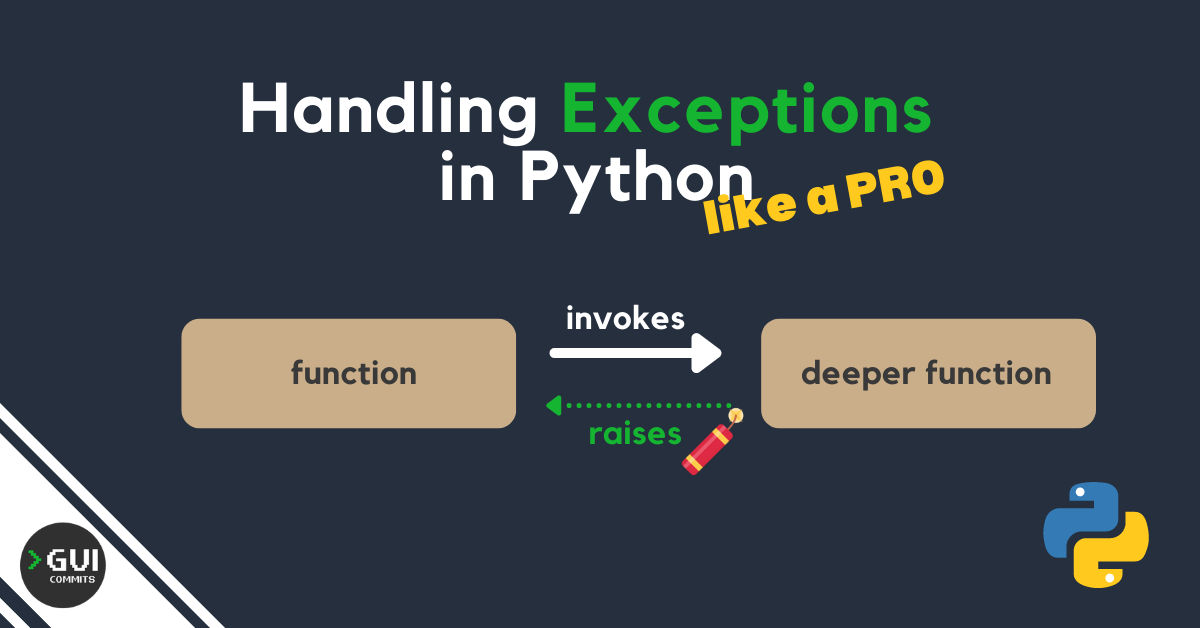

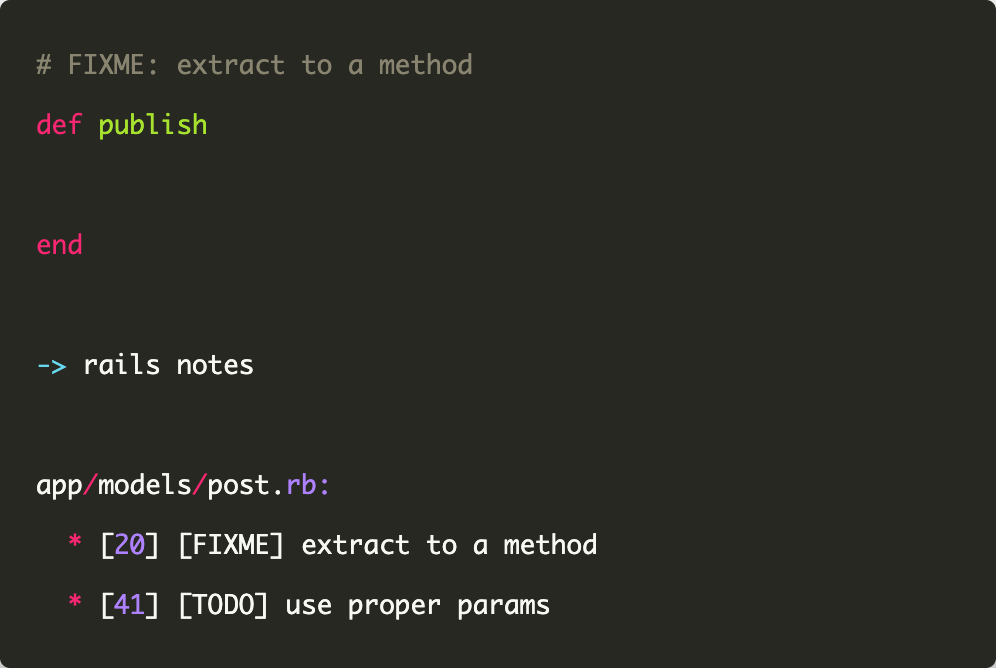

It’s more than rails!

Bash scripting, a cornerstone of Unix and Linux system administration, offers powerful tools to automate repetitive tasks, streamline workflows, and handle complex operations. For those already comfortable with basic scripting, diving into advanced techniques can unlock new levels of efficiency and capability. This post will explore advanced shell scripting techniques in Bash, focusing on script optimization, robust error handling, and automating complex system administration tasks. Script Optimization Optimization is crucial for ensuring that your scripts run efficiently, especially when dealing with large datasets or intensive tasks. Here are some key techniques to optimize your Bash scripts.

$BASH_REMATCH is a special array variable in the Bash shell that stores the results of matching a regular expression using the =~ operator…

📖 A collection of pure bash alternatives to external processes. - dylanaraps/pure-bash-bible

As a developer, you most likely spend a significant amount of time working with the command-line...

Explains three methods to get and extract filename extension in Bash for Linux and Unix shell scripting needs.

In Linux, there are shell built-in commands which you are already using but never paid attention to. Learn more about them in this tutorial.

While for maybe the most popular bash loop, wait until you discover until. Pun intended :)

Here are a couple of ways for reading file line by line in the Bash shell.

In this quick Bash tip, you'll learn about appending to an existing array in bash.

The exec command in shell scripts is super useful for logging, reading from files and running commands by replacing the current process.

The bash shell has some special variables that have specific usages and purposes. Learn more about them here.

This is an open-source introduction to Bash scripting ebook that will help you learn the basics of Bash scripting and start writing awesome Bash scripts that will help you automate your daily SysOps, DevOps, and Dev tasks...

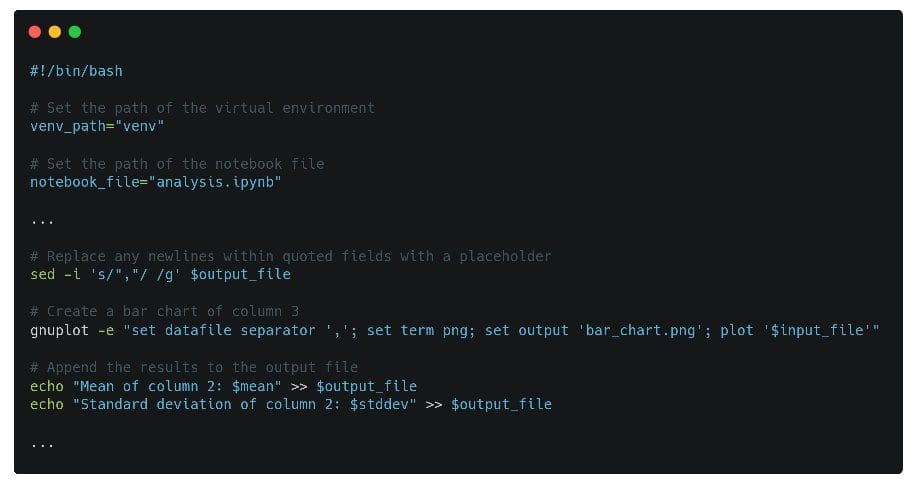

In this article, we are going to take a look at five different data science-related scripting-friendly tasks, where we should see how flexible and useful Bash can be.

Brace expansion in the bash shell is a lesser known but an awesome feature. Learn about using them like a Pro Linux user with practical examples.

This article is about a few quick thumb rules I use when writing shell scripts that I’ve come to appreciate over the years. Very opinionated....

Have you ever wondered how a Web server works under the hood? Moreover, would you be willing to...

A collection of handy Bash One-Liners and terminal tricks for data processing and Linux system maintenance. - onceupon/Bash-Oneliner

Learn how to find PID using a process name in Linux. Also learn to get the parent process ID (PPID) of the given process.

A complete guide for newcomers and advanced users to correct usage and deepen understanding of the bash shell language.

📖 A collection of pure bash alternatives to external processes. - dylanaraps/pure-bash-bible

Variables · Functions · Interpolation · Brace expansions · Loops · Conditional execution · Command substitution · One-page guide to Bash scripting

Intro Recently I wanted to deepen my understanding of bash by researching as much of it as possible. Because I felt bash is an often-used (and under-understood) technology, I ended up writing …

Get more efficient by using condensed versions of long Bash commands.

I write a letter to my past self about the Shell's importance I wish I'd focused on earlier in my career.

Update 25 Sep 2019: This article is now available in Japanese, thanks to the hard work of ラナ・クアール....

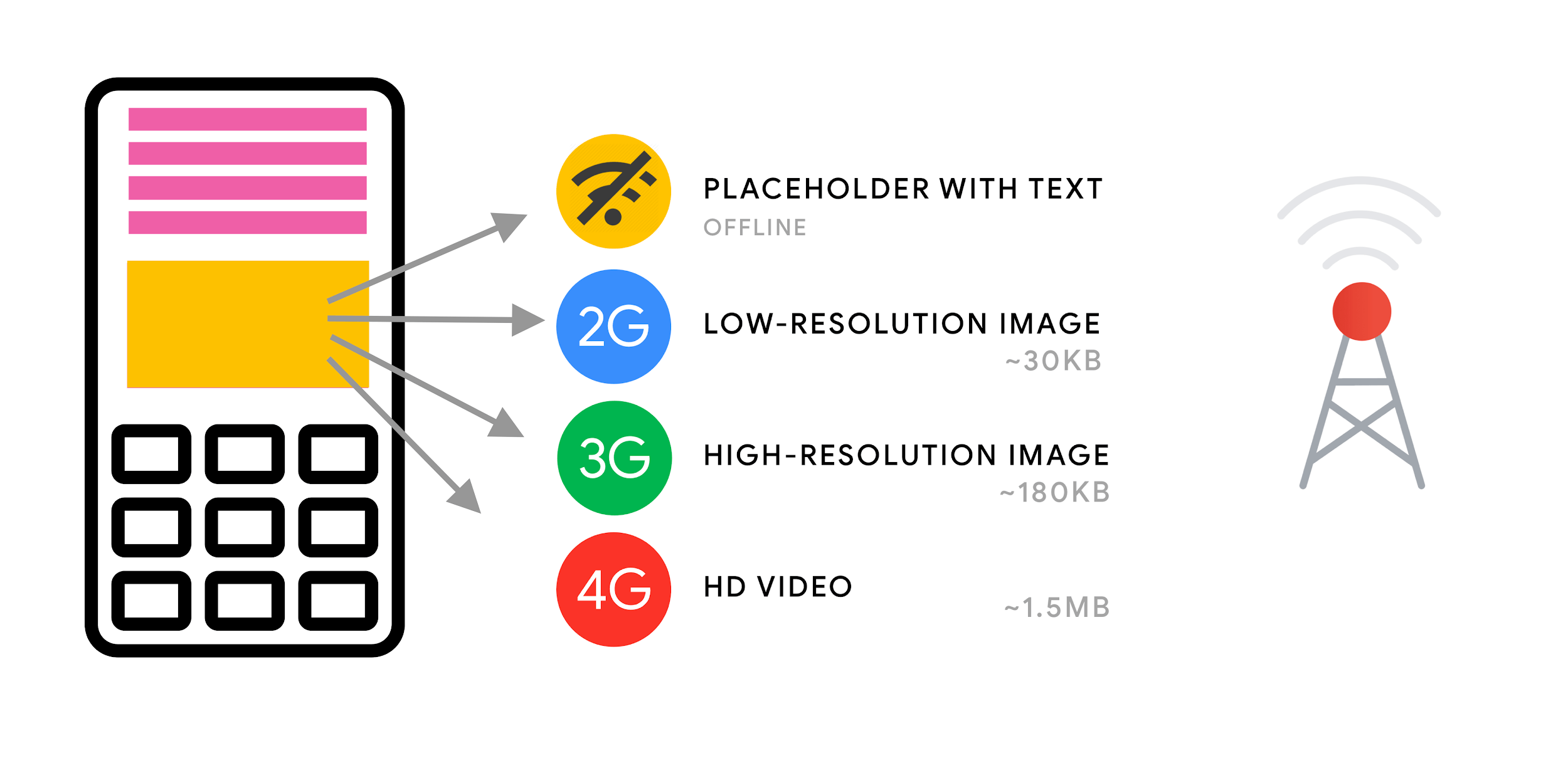

Images take up to 50% of the total size of an average web page. And if images are not optimized, users end up downloading extra bytes. And if they’re

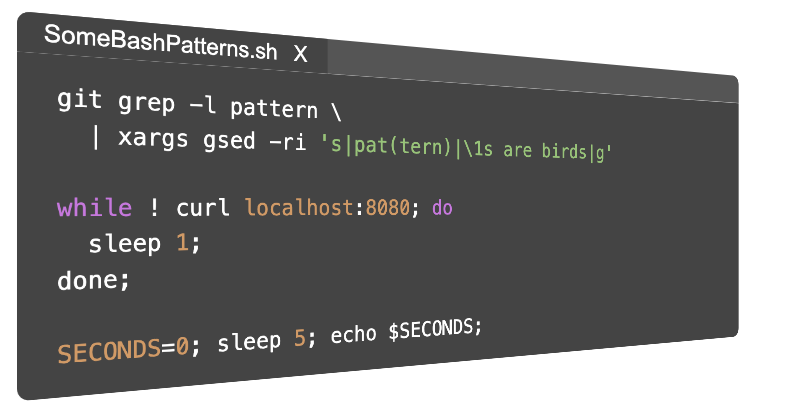

5 bash tricks I find myself using often that I wish I'd discovered sooner.

The seemingly insignificant #! characters at the beginning of a shell script has a major significance on how your script will be executed.

You might have used variables in Bash before, but probably not like this.

Learn to process thousands of items reliably and repeatably in this installment.

The .bash_logout file is the individual login shell cleanup file. It is executed when a login shell exits. This file exists in the user's home directory. For example, $HOME/.bash_logout. This file is useful if you want to run task or another script or command automatically at logout. For example, clear the mysql command line history stored in ~/.mysql_history or to make a backup of files you can use this file.

In this post, I try to explore various ways to repeat a character and string in Bash 'n' times that must run on macOS/FreeBSD and Linux.

Commandline one liners that makes your workflow more productive

What exactly happens when we run a file starting with #! (aka shebang), and why some people use #!/us...

Free Introduction to Bash Scripting eBook.

Bash aliases are essentially shortcuts that can save you from having to remember long commands and eliminate a great deal of typing when you are working on the command line.

Ruby is one of the most popular languages today. It has an elegant syntax and it is the language behind the powerful Ruby on Rails framework. In this tutorial we will show you three different ways to install Ruby on Ubuntu 18.04 system.

Dead simple testing framework for Bash with coverage reporting - Checksum/critic.sh

Bash-my-AWS is a simple but powerful set of CLI commands for managing resources on Amazon Web Services.

A short description and screenshot of some useful command line tools I use that aren't part of typical POSIX environment.

You can do more data science than you think from the terminal.

I've long been impressed by shell one-liners. They seem like magical incantations. Pipe a few terse commands together, et voilà! Out pops the solution to a problem that would seem to require pages of code. Are these one-liners real or mythology? To some extent, they're both. Below I'll give a famous real example. Then I'll argue

Why unix pipes are awesome.

Five lesser-known command line utilities you'll want to install right away.

A collection of small bash scripts for heavy terminal users - alexanderepstein/Bash-Snippets

other, mainly PDFs (7/6/22)

globs vs regexes

exit codes

if, [, [[

set

<()

quoting

top shortcuts

startup order

getopts

2. help

3. view/edit files

4. create/delete files & directories

5. move/copy files, making links, command history

6. directory trees, disk usage, processes

7. misc

8. disk, memory, cpu usage

9. REPLs & versions

10. environment vars

11. basic scripting

12. config files

13. find

14. download

15. redirect

16. superuser

17. file permissions

18. users & groups

19. text ops

20. pattern matches

21. copy files over SSH

22. long-running processes

23. more

2. Download something - be able to resume if something goes wrong

3. Generate a random, 20-character password for a new online account

4. Downloaded a file - test the checksum

5. Limit ping to five attempts

6. Start a web server in any folder

7. See how fast your network is with Speedtest-cli

8. See your external IP address

9. See your local IP address?

10. Clear the screen.

2. std output (22)

3. std input (8)

4. executing commands (10)

5. shell variables

6. logic & math ops

7. tools

8. more tools

9. finding files

10. more scripting features

11. dates & times

12. example user tasks as scripts

13. parsing, etc

14. secure scripts

15. advanced topics

16. config / customization

17. admin tasks

18. shortcuts

19. tips & traps

A. options (multiple)

B. examples

C. command line processing

D. revisino control

E. build from source

2. shebang

3. directory navigation

4. listing files

5. cat

6. grep

7. aliasing

8. jobs & processes

9. redirects

10. control structures

quoting variables

global, local, environment variables

for loops

if statements

functions

always quote your vars

return quotes

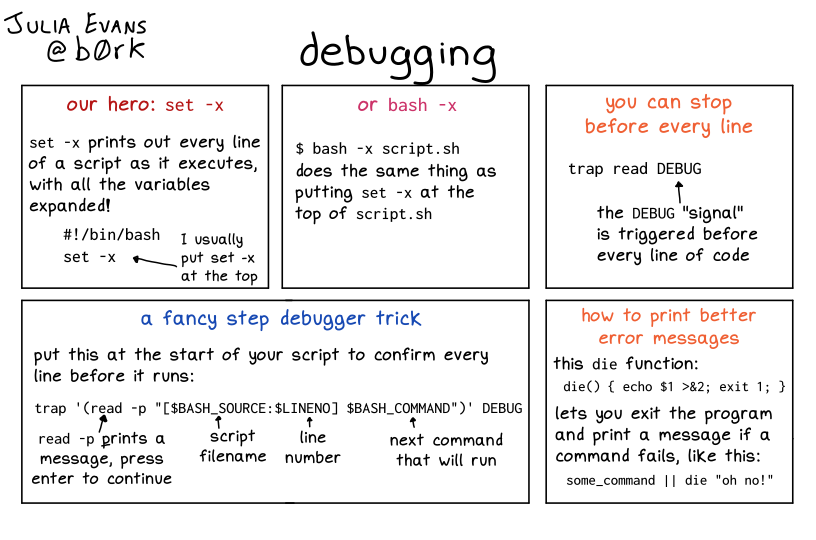

background processes,br> set -e, set -x, set -u

linting

2. param expansions

3. loops

4. functions

5. conditionals

6. arrays

7. dicts

8. options 9. command history

10. miscellaneous*

2. actions

3. package managers

4. dot files

5. VIM

6. aliases

7. scripts

-->

prodmgmt/platforms

categories:

tags: platforms prodmgmt

date: 26 Mar 2025

slug:raindrop-prodmgmt-platforms

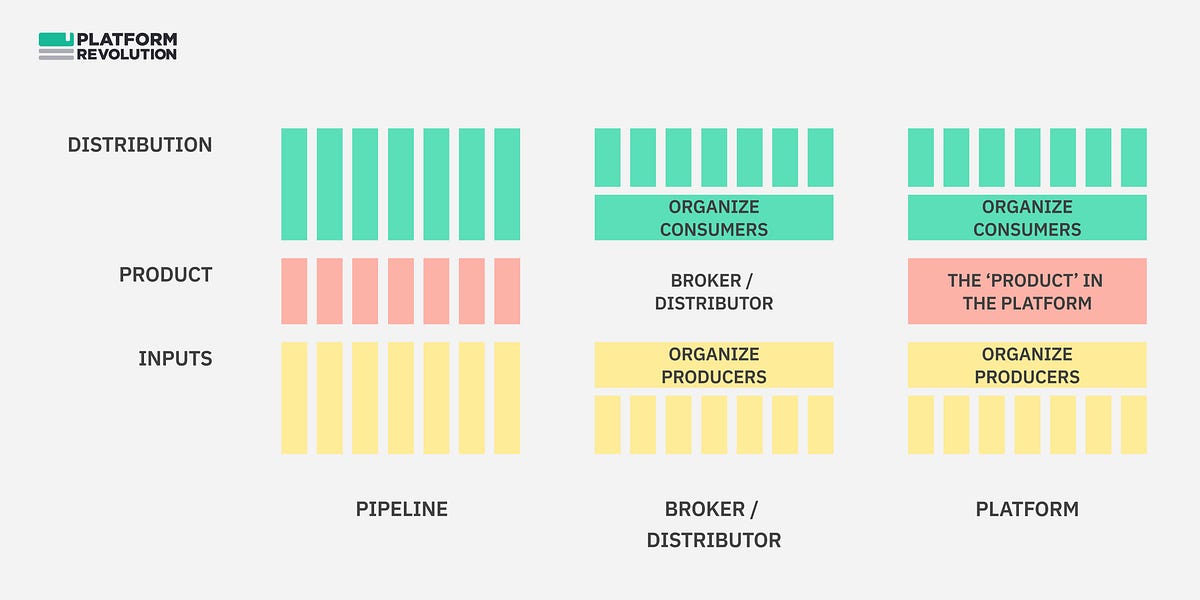

Companies that want to launch a B2B marketplace face a choice: own the platform, spin it off, or create a startup.

NEXT POST: Part II of my thoughts on TikTok, on how the app design is informed by its algorithm and vice versa in a virtuous circle.

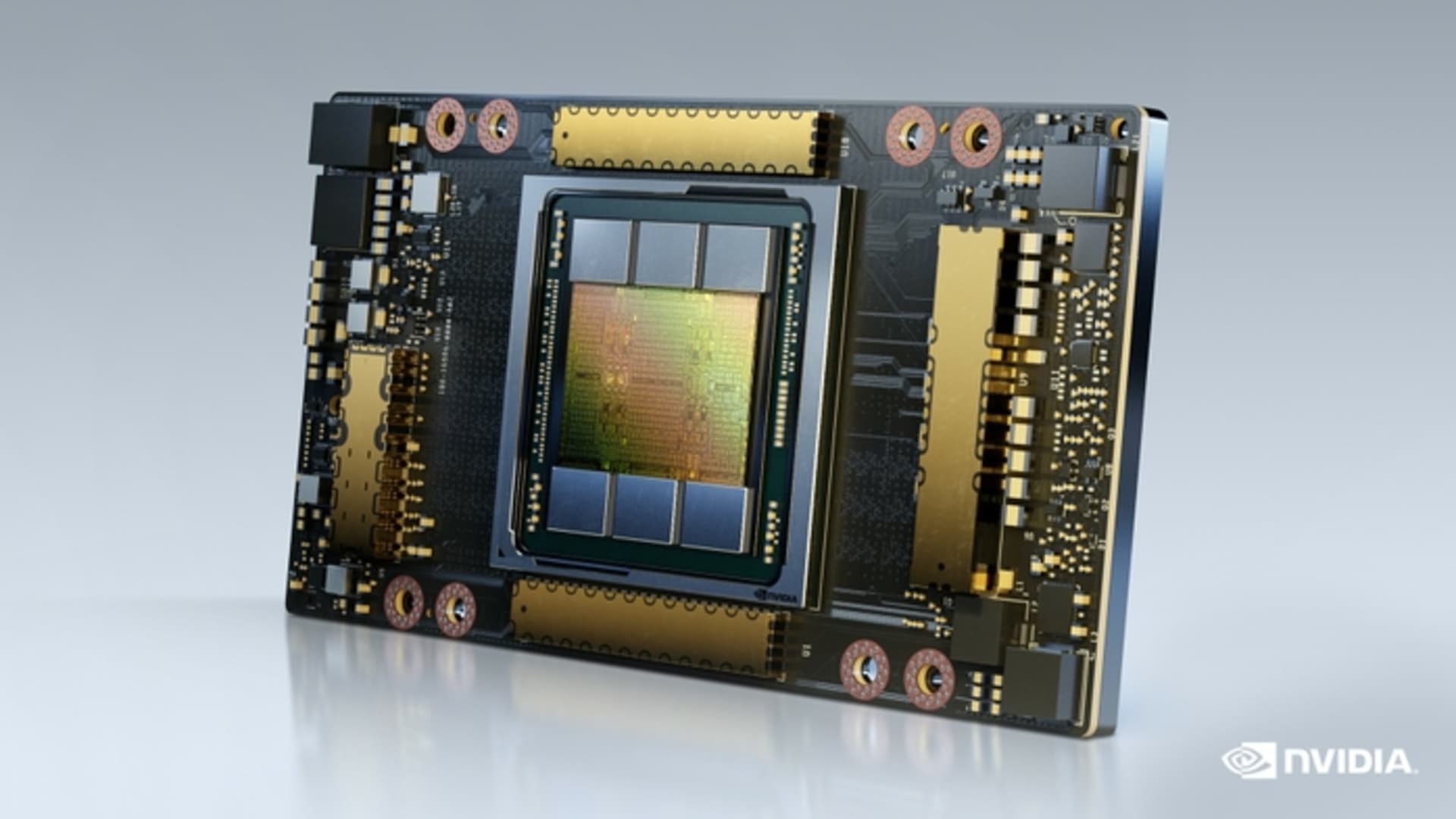

An interview with Daniel Gross and Nat Friedman about Stargate, DeepSeek, and where the margins and moats will come with models.

Thoughts on business models that don't seem to make perfect sense

By providing a foundation for collaboration, platforms can create network effects, where the value of the platform increases as more participants join.

On the risks of over-emphasizing platform thinking

Last updated: Jan 30, 2021 Are you looking for ideas to unlock your long-term business value? If you shook your head in yes, remember that business model is one of the ways to streamline your business process. Precisely, a business model is a holistic framework to define, understand, and design your entire business in the…

Business models based on the compiled list at http://news.ycombinator.com/item?id=4924647. I find the link very hard to browse, so I made a simple version in Markdown instead. · GitHub

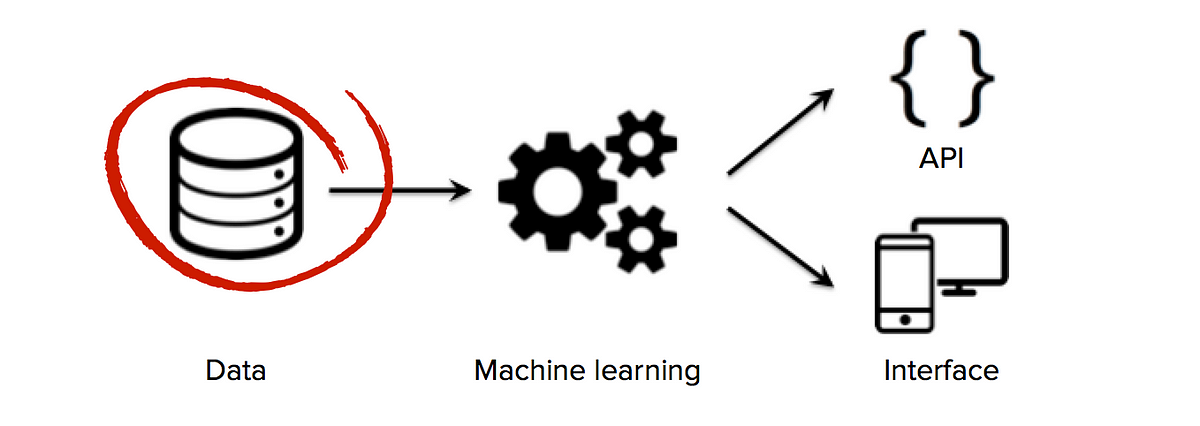

Why Systems of Intelligence™ are the Next Defensible Business Model

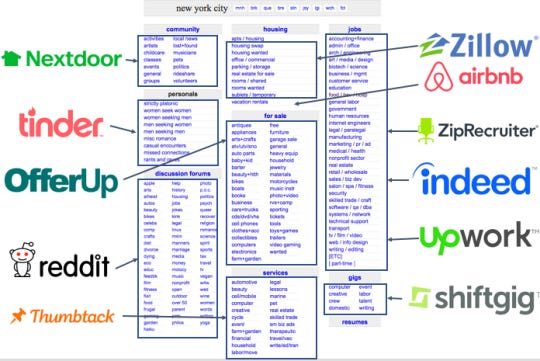

The unbundling of Excel is just as important as the unbundling of Craigslist. Here's what you need to know about the Excel Economy and how SaaS companies can take advantage of different verticals and use cases that Excel has dominated.

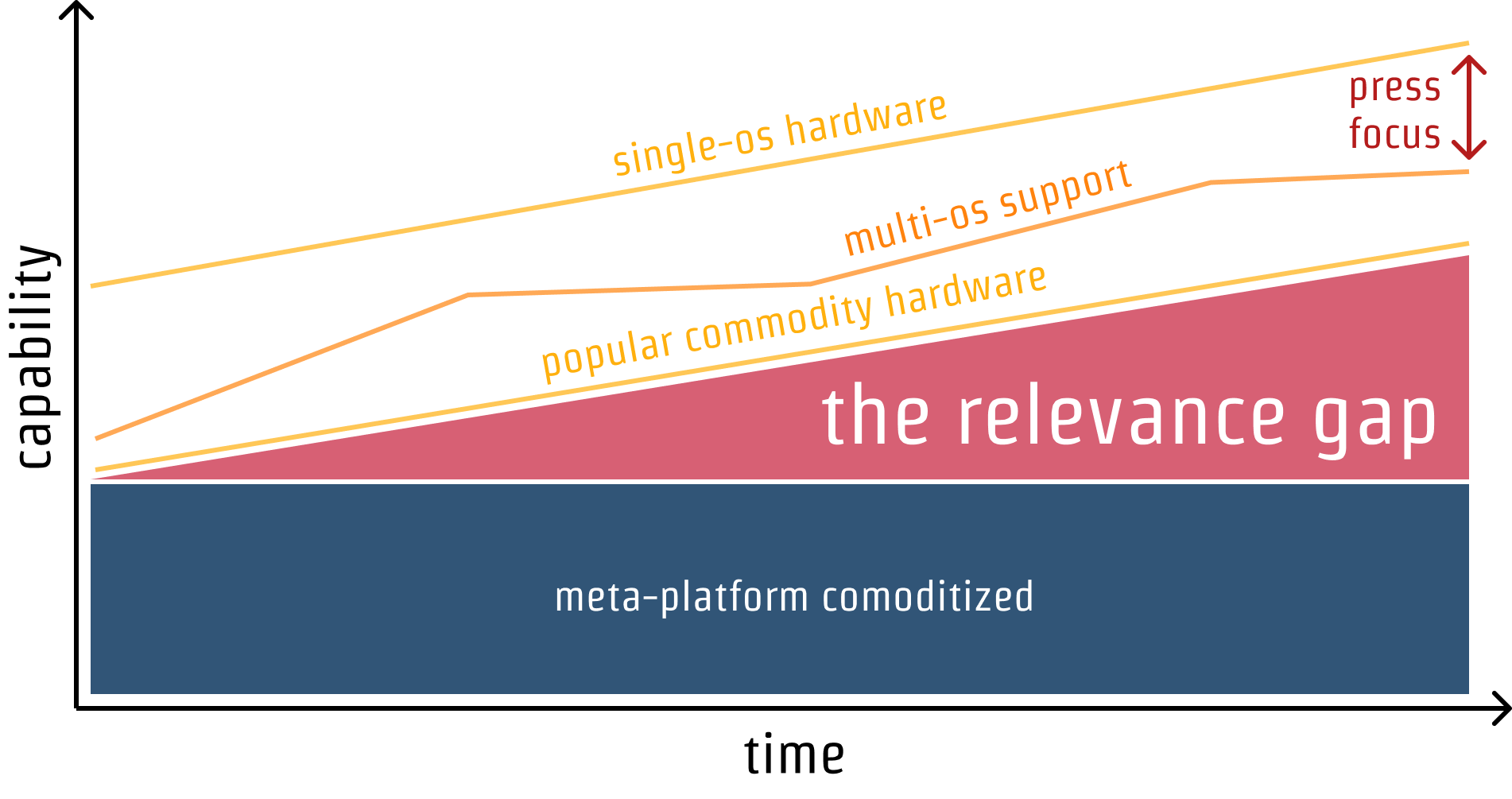

Like other meta-platforms **the web thrives or declines to the extent it can accomplish the lion's share of the things we expect most computers to do**. Platform Adjacency Theory explains how to expand in a principled way and what we risk when natural expansion is prevented mechanisms that prevent effective competition.

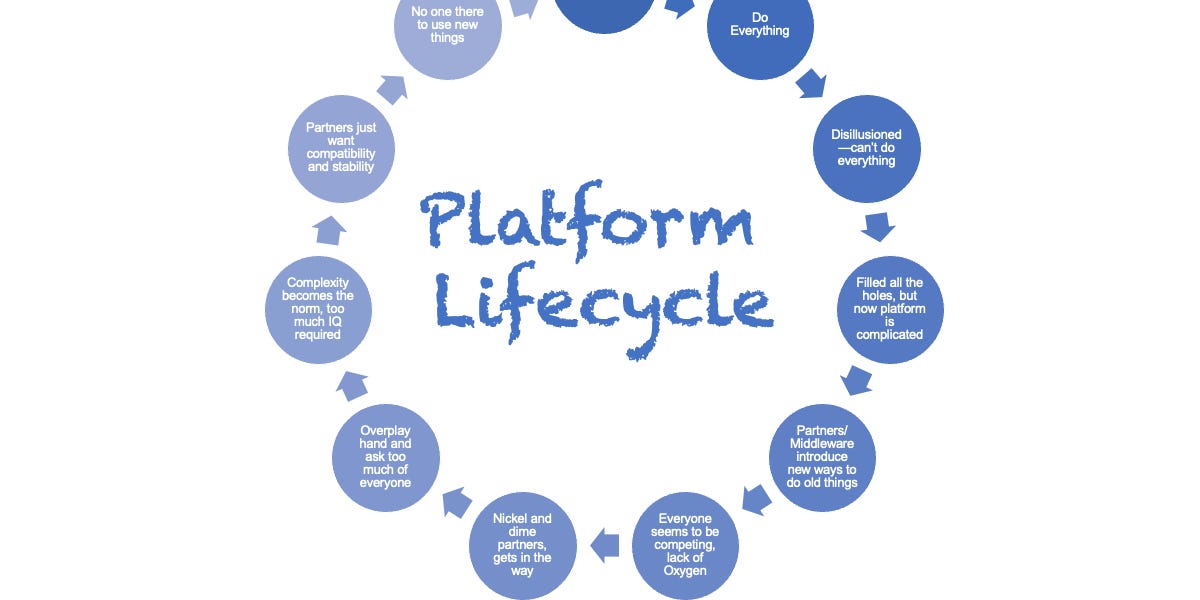

Patterns and Practices in the Creation, Rise, and Fall of Platforms

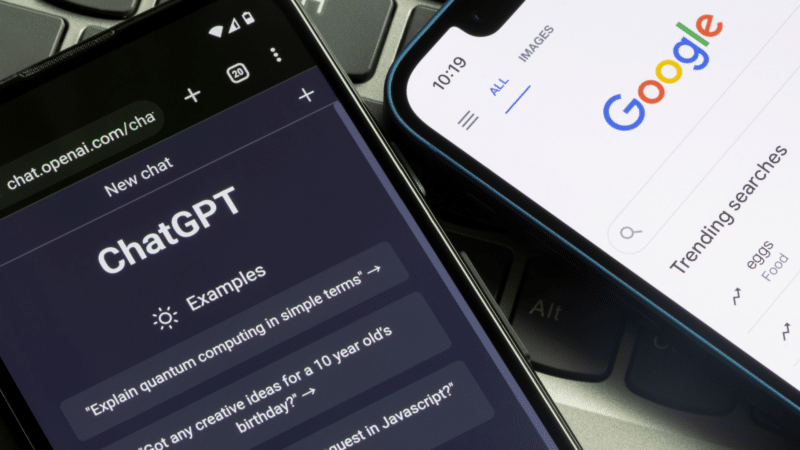

OpenAI today announced its support of new third-party plugins for ChatGPT, and it already has Twitter buzzing about the company's potential platform play.

The secrets of Zoom, Amazon, and Apple products.

It’s a place for obsessives to buy, sell and geek out over classic cars. The company pops open its hood after 100,000 auctions to explain why.

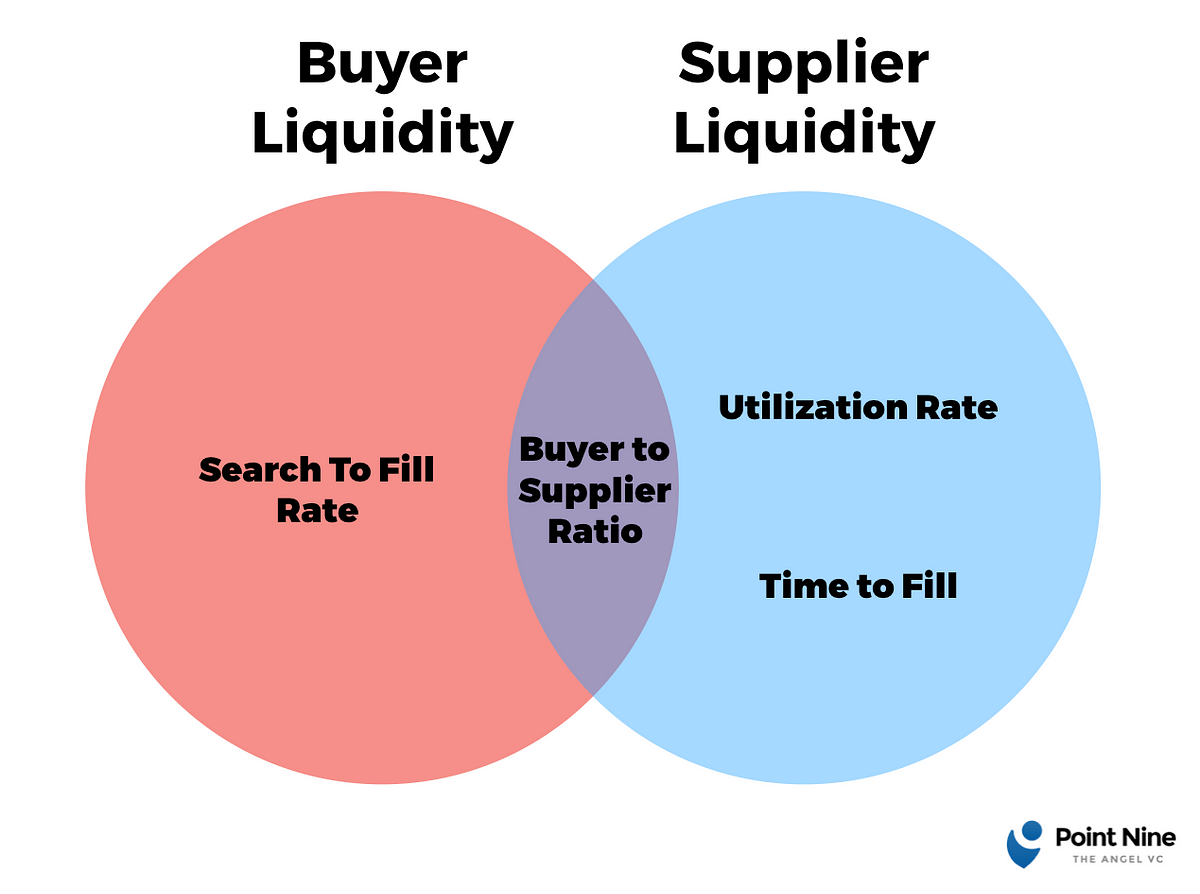

Methodologies for understanding and measuring marketplace liquidity

Who has their hand on the dial? Talk with someone who works at Apple, Amazon, Google, Linkedin, Facebook, etc, and they’ll be happy to give you tips on how to work the platform to your advant…

There is a fallacy in believing your current performance is indicative of future success: Performance is a trailing indicator. Power is a leading one.

This is a book summary of The Art of Profitability by Adrian Slywotzky. Read The Art of Profitability summary to review key ideas and lessons from the book.

Plus! Grills, Ads, Pricing, Drops, Movies, Diff Jobs

The most significant bottleneck in the adoption of healthcare technology to date has been distribution. Over the last decade, generations of digital health companies have struggled to reach escape velocity—not because their products and services weren’t transformative, but because they failed to find an executable path for sustainable distribution and value capture. Some of that...

Guest One key risk facing marketplace operators is the threat of disintermediation, when a buyer chooses to work directly with a seller and bypasses your platform. Through our experience investing in several pioneering marketplace companies, we've seen a handful of clever ways to fight this.

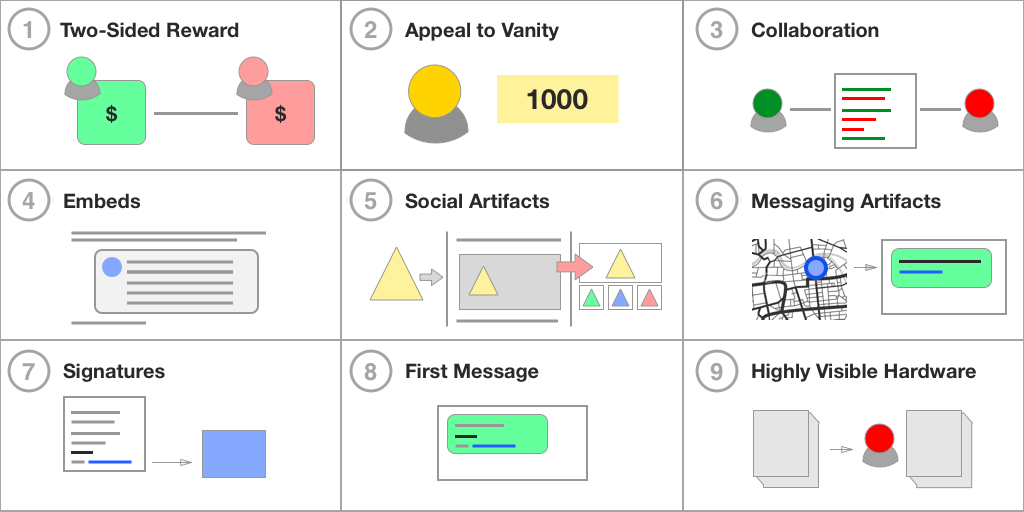

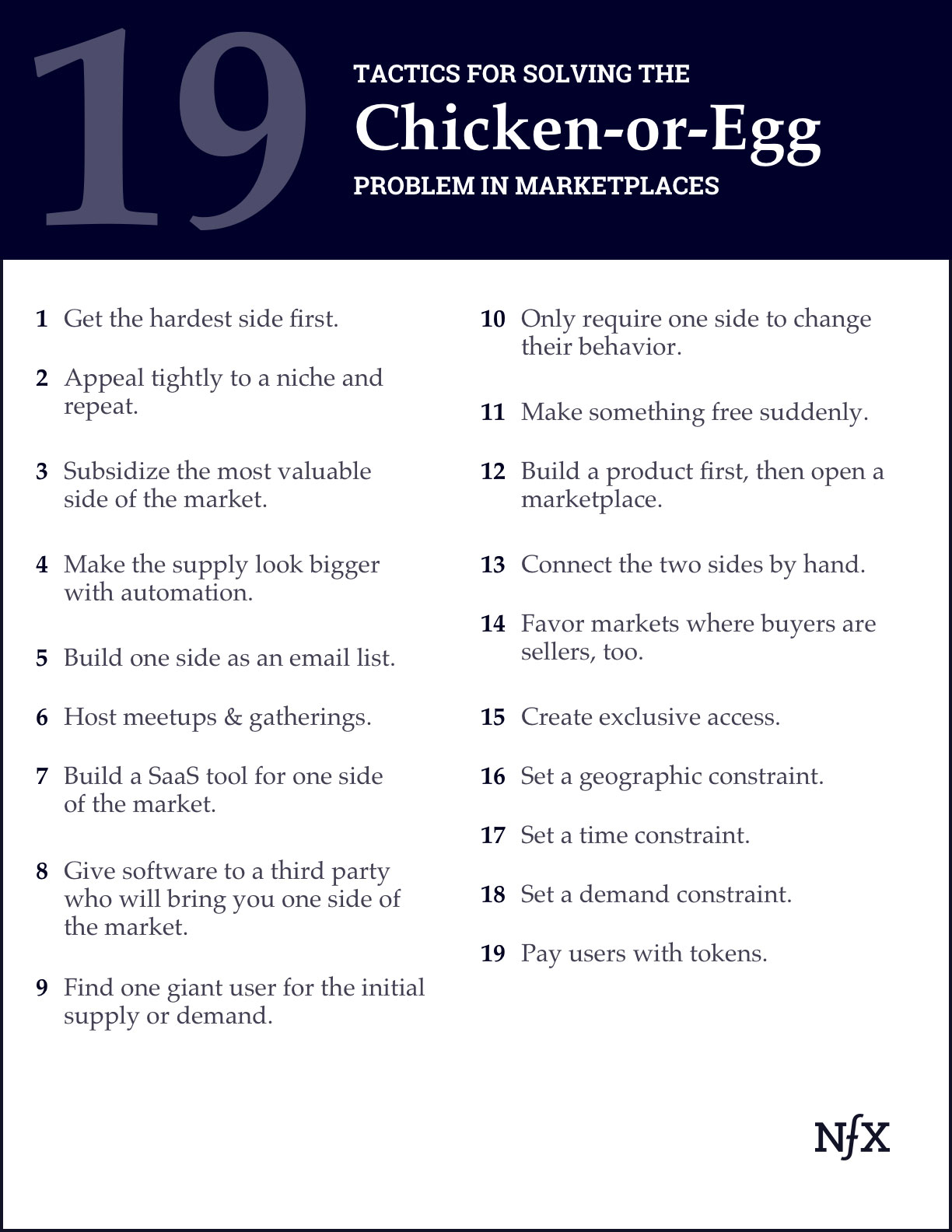

Building a two-sided market is probably the hardest thing you can build as an entrepreneur. It's so hard that a few weeks ago, I organized a Marketplace

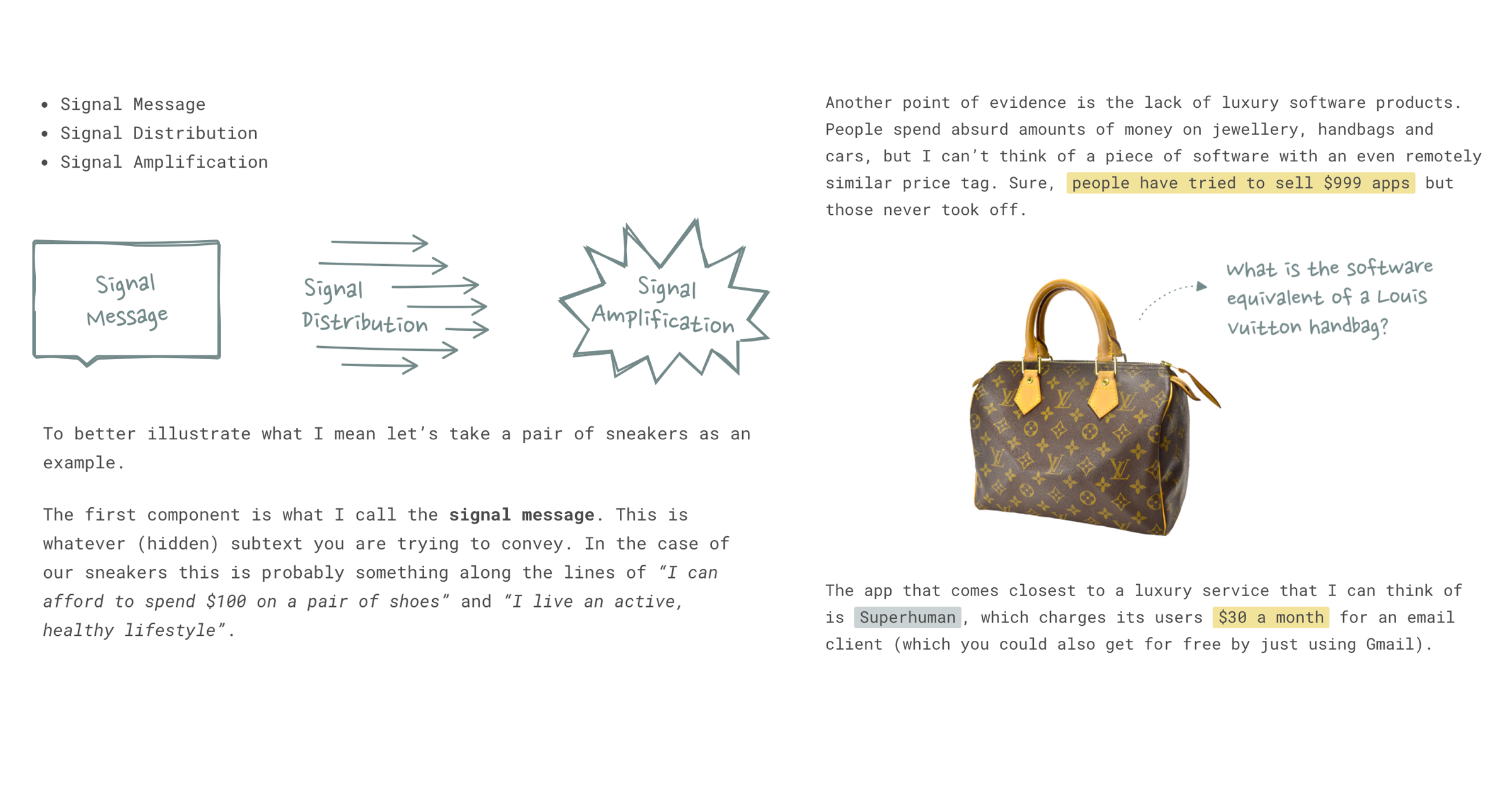

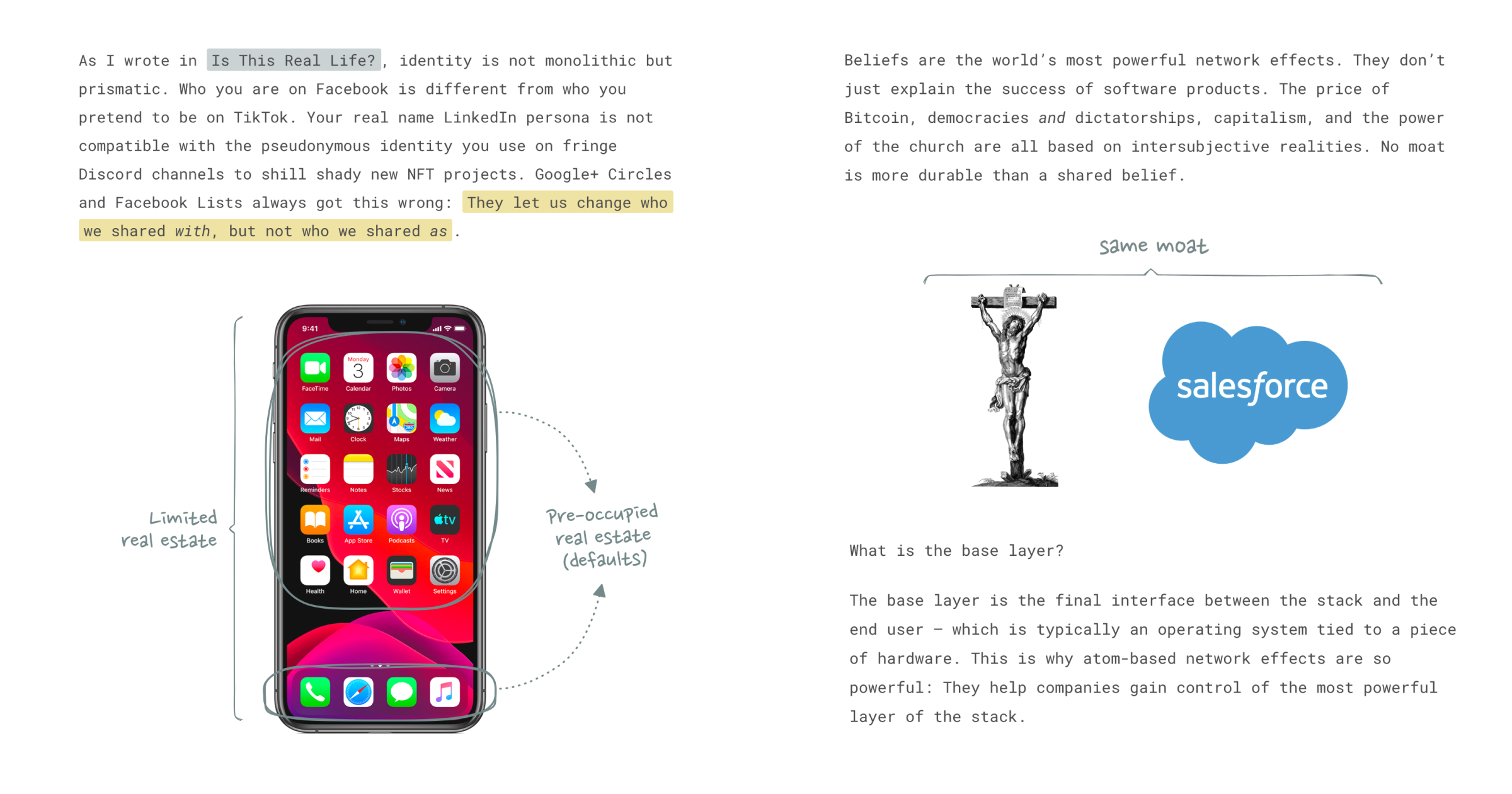

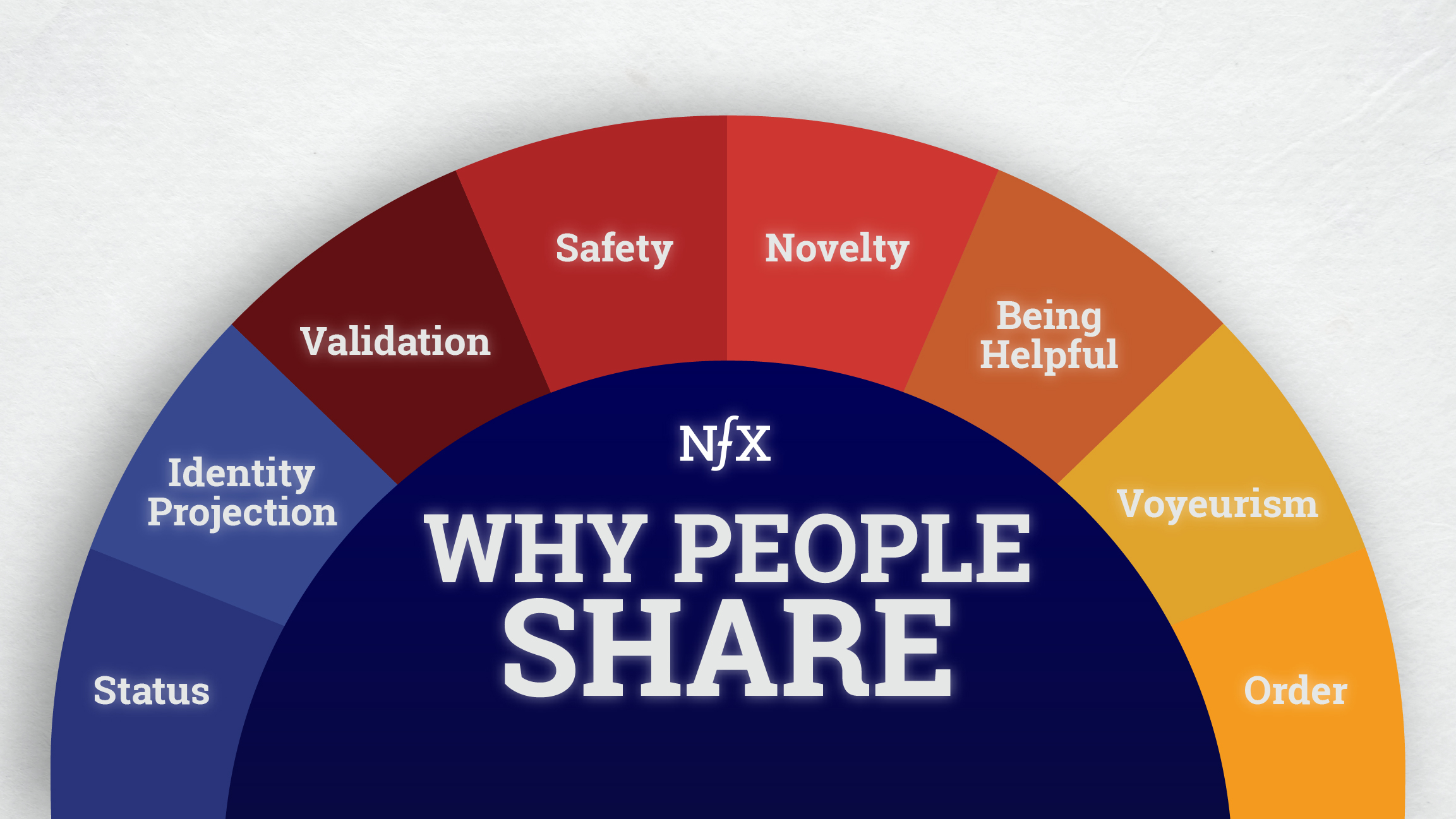

01 Intro One of the best books I have read in the last few years is The Elephant in the Brain by Robin Hanson and Kevin Simler. The book makes two main arguments: a) Most of our everyday actions can be traced back to some form of signaling or status seeking b) Our brains deliberately hi

Insights and Resources for Tech Entrepreneurs

The rise of on-demand marketplaces has brought with it varied business models, across number of industries. This framework tries to explain how a marketplace’s vertical impacts its business m…

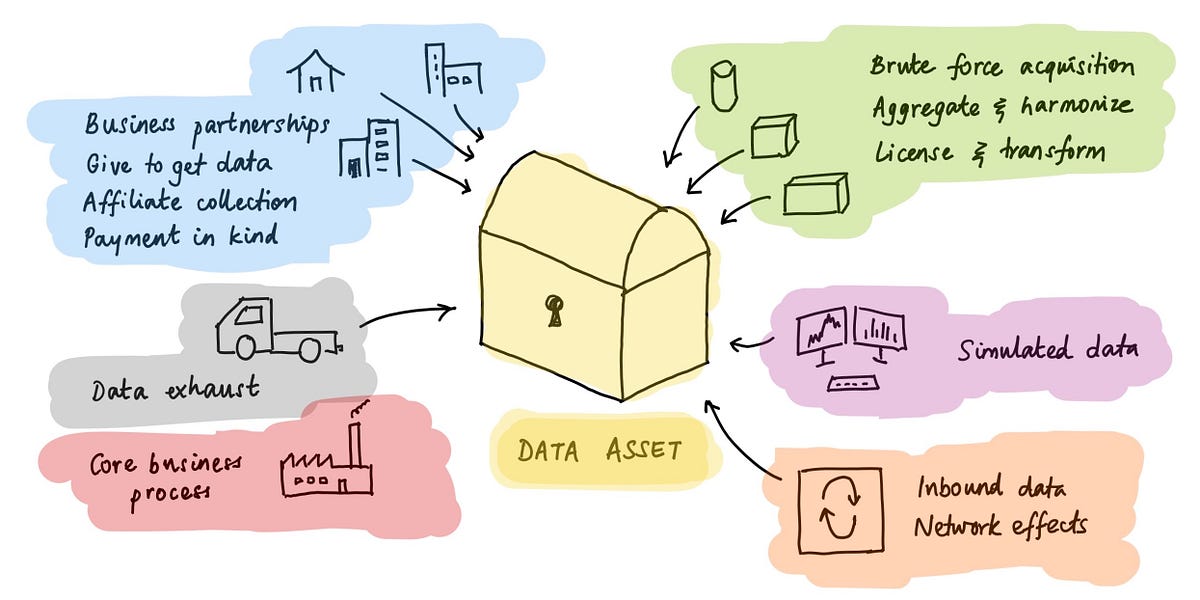

A technology advantage isn’t enough to build an enduring enterprise SaaS company because at the core, all SaaS software share the same architecture. A relational database stores data and a web site presents the data. This is true for CRM (Salesforce), marketing automation (Marketo), email (Exchange), content management systems (Sharepoint) and so on. Because SaaS apps use standard databases, engineers can easily transfer the data from one database to another. I’m greatly simplifying here because differences in architecture may exist, but in principle it’s simple to extract, transform and load data from one relational database into another.

New products change what we buy, but new platforms have much broader effects.

Each day on Tech Twitter, we get up in the morning, open up the website, and then go see what it is we’re mad about. A few days ago, it was this: The concept of “pay to get a better place in l…

Ride-sharing is a winner-take-all market that depends on controlling demand more than it does supply.

Raise a glass of bubbly to the count of Champagne.

Probably not the ones you think.

Building a better mousetrap isn’t enough.

In the furiously competitive world of tech startups, where good entrepreneurs tend to think of comparable ideas around the same time and "hot spaces" get crowded quickly with well-funded hopefuls, competitive moats matter more than ever. Ideally, as your startup scales, you want to not only be able

Goods versus Services: The next trillion dollar opportunity Marketplace startups have done incredibly well over the first few decades of the internet, reinventing the way we shop for goods, but less so for services. In this essay, we argue that a breakthrough is on its way: The first phase of the internet has been...

Perhaps the most egregious is a failure of imagination.

Companies are a sequencing of loops. While it’s possible to stumble into an initial core loop that works, the companies that are successful in the long term are the ones that can repeatedly find the next loop. However, this evolution is poorly understood relative to its existential impact on a company’s trajectory. Figma is a … Continue reading Why Figma Wins →

Since Benchmark’s investment in Ebay 15 years ago, we have been fascinated by online marketplaces. Entrepreneurs accurately recognize that the connective tissue of the Internet provides an opportunity to link the players in a particular market, reducing friction in both the buying and selling experience. For example, my car tax check is an online platfrom that allows you to book a slot for a complete history and guidance of your car taxes and other details. The arrival of the smartphone amplifies these opportunities, as the Internet’s connective tissue now extends deeper and deeper into an industry with the participants connected…

The same-day cancellation rate likely includes subscribers who only wanted access to one article, or who felt the full paid experience was lacking after a quick look around. New data suggests some just really hate the idea of auto-renewal.

You can't build a weatherproof company if you don’t constantly gather challenges to your thinking, learn to listen to them, and then test those learnings out.

This week, we published the a16z Marketplace 100, a ranking of the largest and fastest-growing consumer-facing marketplace startups and private companies. See the full index and analysis here, and visit a16z.com/marketplace-100 for more marketplace-related content. From a business standpoint, we know marketplaces are challenging to scale; from a conversational perspective, we’ve come to realize they’re...

Innovation is not a binary choice between the old and the new. The answer is often to contribute to evolution — by making parts that work…

Centralized planning is no longer required.

In many ways, online marketplaces are the perfect business model. Since they facilitate transactions between independent suppliers and customers rather than take possession of and responsibility for the products or services in question, they have inherently low cost structures and fat gross margins. They are highly defensible once established, owing to network effects. Yet online marketplaces remain extremely difficult to build, say Andrei Hagiu of Harvard Business School and venture capitalist Simon Rothman of Greylock Partners. Most entrepreneurs and investors attribute this to the challenge of quickly attracting a critical mass of buyers and suppliers. But it is wrong to assume that once a marketplace has overcome this hurdle, the sailing will be smooth. Several other important pitfalls can threaten marketplaces: growing too fast too early; failing to foster sufficient trust and safety; resorting to sticks, rather than carrots, to deter user disintermediation; and ignoring the risks of regulation. This article draws on company examples such as eBay, Lending Club, and Airbnb to offer practical advice for avoiding those hazards.

“A startup is a company designed to grow fast. Being newly founded does not in itself make a company a startup. Nor is it necessary for a startup to work on technology, or take venture fundin…

Last night, Twitter curtailed Meerkat's access to its graph . I saw lots of discussion on Twitter (I'd say this was ironic but it's just expected) about why and whether Twitter should just compete on its own merits with its recent acquisition Periscope . Some have termed what happened to Meerkat

Just don’t pretend you’re all on the same side.

Knowledge moats (secret sauces) are one of the most fundamental type of moat in business. They consist of the information, data and…

Five of the 10 most valuable companies in the world today—Apple, Alphabet, Amazon, Facebook, and Microsoft—derive much of their worth from their multisided platforms, which facilitate interactions or transactions between parties. Many MSPs are more valuable than companies in the same industries that provide only products or services: For instance, Airbnb is now worth more than Marriott, the world’s largest hotel chain. However, companies that weren’t born as platform businesses rarely realize that they can—at least partially—turn their offerings into one, say the authors. And even if they do realize it, they often wander in the dark searching for a strategy to achieve this transformation. In this article, Hagiu and Altman provide a framework for doing so. They lay out four specific ways in which products and services can be turned into platforms and examine the strategic advantages and pitfalls of each: (1) opening the door to third parties; (2) connecting customers; (3) connecting products to connect customers; and (4) becoming a supplier to a multisided platform. These ideas can be used by physical as well as online businesses.

Done right, companies competing as a multi-sided platform often win with higher percentage profit margins than those enjoyed by traditional resellers. The problem is that a winning strategy is far from self-evident. Professor Andrei Hagiu explains the potential and the pitfalls for life as an MSP.

Clayton Christensen claims that Uber is not disruptive, and he’s exactly right. In fact, disruption theory often doesn’t make sense when it comes to understanding how companies succeed …

Snapchat is on the verge of conquering the toughest messaging market in the world: the United States. The way they did it is by laddering-up.

Startups fail because they run out of money before achieving product-market fit. NFX Managing Partner Gigi Levy-Weiss identifies 10 places to look for product-market fit in startup ideas.

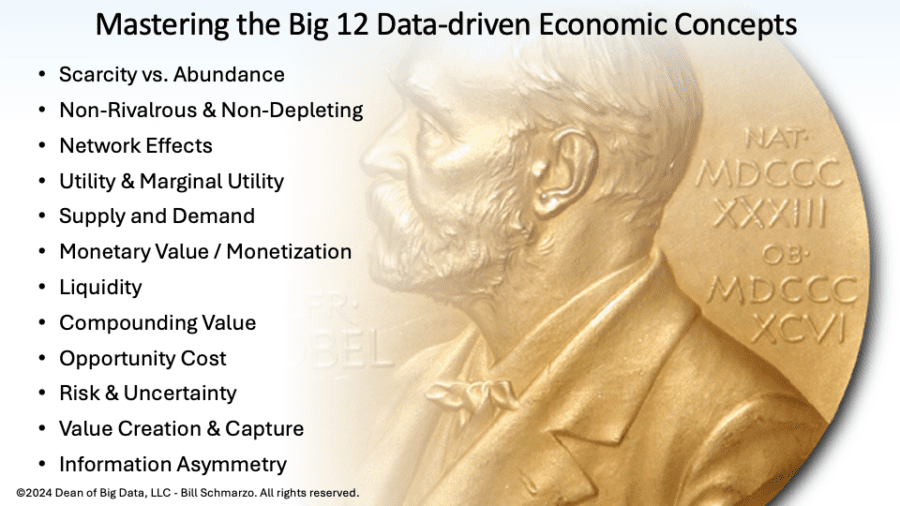

When I was in college I took two intro economics courses: macroeconomics and microeconomics. Macro was full of theories like “low unemployment causes inflation” that never quite stood u…

Editor's Note: The following is a guest post by Simon Rothman of Greylock Partners. Rothman is particularly passionate about Marketplace technology (Etsy, Kickstarter, Airbnb, etc) and how to garner success in that category. Marketplaces are endemic to the consumer web: Largely popularized by eBay, we've recently seen quite a few variations on the theme, like young guns Etsy, oDesk, Airbnb, and Kickstarter. Old or new, the two elements that bind all marketplaces are network effects (a good thing) and the chicken-and-egg problem (not such a good thing).

Data has long been lauded as a competitive moat for companies, and that narrative’s been further hyped with the recent wave of AI startups. Network effects have been similarly promoted as a defensible force in building software businesses. So of course, we constantly hear about the combination of the two: “data network effects” (heck, we’ve...

Managed marketplaces have been one of the hottest categories of venture investment over the past several years. They garner a lot of press because the consumer experiences are often radically different than what’s previously been available in the market. But there is confusion over what a true “managed” marketplace is. It’s fairly easy to spot if you know what to look for.

By Stephanie Tilenius, an entrepreneur in residence at Kleiner Perkins Caufield & Byers The Wild West of online marketplaces is over. From 1999 until 2006, eBay and Amazon Marketplaces dominated the field, offering platforms that brought buyers and sellers together. But over the last seven years, more than 20 new marketplace [...]

Building on Aggregation Theory, this provides a precise definition of the characteristics of aggregators, and a classification system based on suppliers. Plus, how to think about aggregator regulat…

Building a Marketplace: A Checklist for Online Disruption - Download as a PDF or view online for free

Money is made at chokepoints, and the most valuable chokepoints are operating systems; Amazon is building exactly that with Alexa.

Credit where it's definitely due: this post was inspired by a Twitter conversation with Box CEO Aaron Levie. Don't look now, but something remarkable is happening. Instagram had twelve employees when it was purchased for $700 million; all of its actual computing power was outsourced to Amazon Web Services. Mighty ARM has only 2300 employees, but there are more than 35 billion ARM-based chips out there. They do no manufacturing; instead they license their designs to companies like Apple, who in turn contract with companies like TSMC for the actual fabrication. Nest Labs and Ubiquiti are both 200-employee hardware companies worth circa $1 billion...who subcontract their actual manufacturing out to China.

Because of the Internet realities described by Aggregation Theory a smaller number of companies hold an increasing amount of power. However, an increasing focus on market forces reduces the latitud…

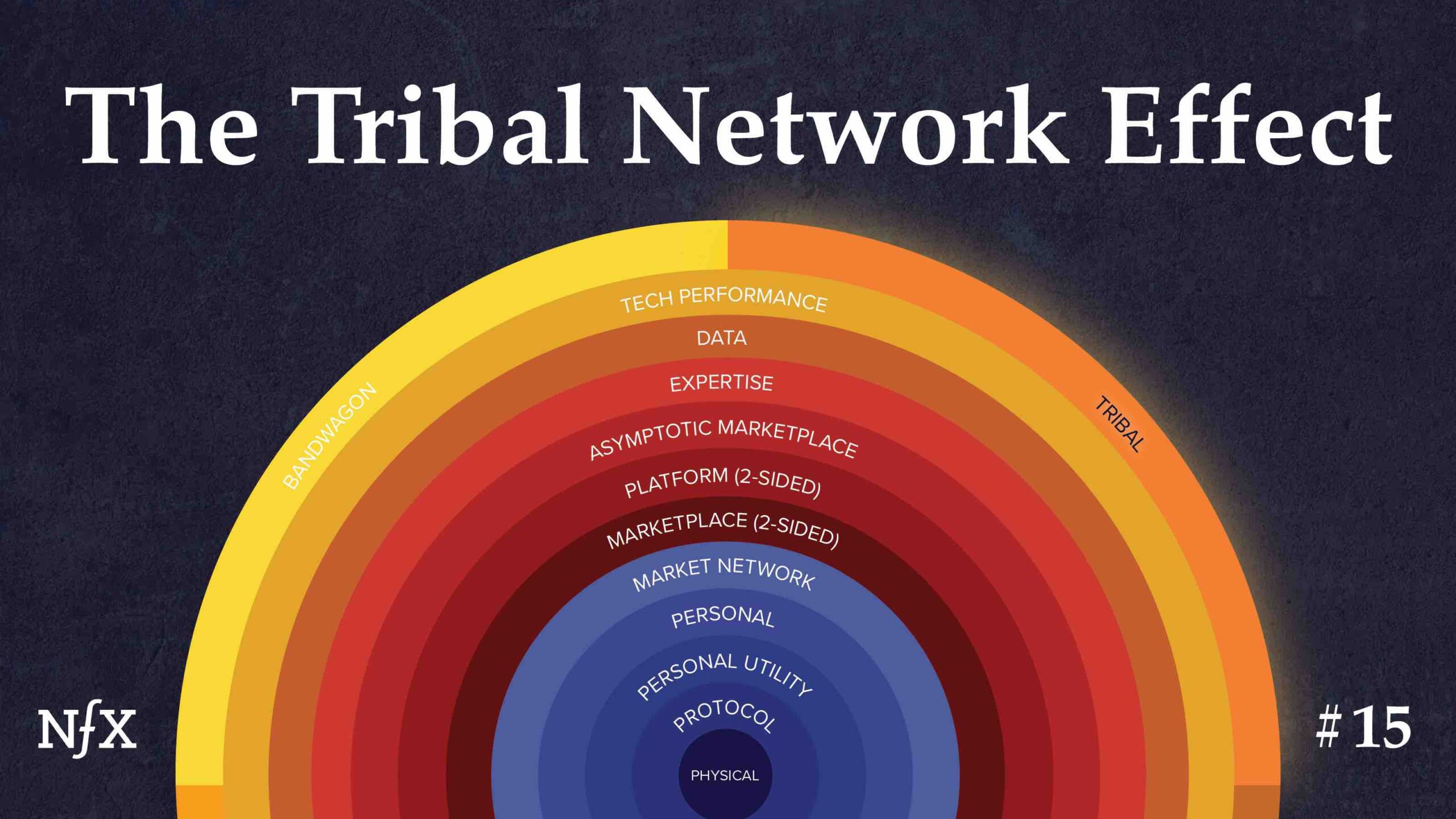

To explore the future of online networks, it's important to note how network effects correlate with value and the factors that make these network effects work in reverse.

Few realize that Uber's core network effects aren't as strong as they seem. At this point, we count no less than 9 additional defensibilities Uber is pursuing to reinforce their core network effect.

Value is created through innovation, but how much of that value accrues to the innovator depends partly on how quickly their competitors imitate the innovation. Innovators must deter competition to…

Zapier has 3M+ users and generates $125M in ARR. At a $5B valuation, its fast-growing horizontal platform is unable to meet the demands of all of its customers. The increase of underserved Zapier customers presents an opportunity.

How data businesses start, and how they keep going, and growing, and growing.

Forking over another $5 a month is getting pretty old.

In early and developing markets, selling complete products is often a superior go to market strategy, rather than selling an innovation in a layer in the stack. This is true for five reasons. First, for early customers to generate value from a novel technology, that technology must solve a business problem completely. End-to-end products do that. Layers in the stack don’t. They optimize existing systems. In early markets, customers want to buy a car, not a better camshaft.

Today, we’re sharing the newest social nfx we've identified—the 15th type of network effect: Tribal Network Effects.

A Guide to Reddit, Its Key Competitive Advantages, and How to Unbundle It

Hatching a Design Marketplace from Scratch at Visually

The world’s most successful companies all exhibit some form of structural competitive advantage: A defensibility mechanism that protects their margins and profits from competitors over long periods of time. Business strategy books like to refer to these competitive advantages as “economic moats”.

Building white label products is more profitable than starting a new design every time. Learn how to properly implement white labelling.

Business-to-business marketplaces are among ecommerce's leading growth trends, yet many industries remain under-served, especially for raw materials.

Western platforms are still way behind in giving creators (and fans) the tools to succeed.

Suppose you’ve started a company that’s creating a category. Most buyers in your target market haven’t heard of your business or the kind of software you sell. There’s no budget line item, no Magic Quadrant, no G2 High Performer Award, no conference. You have an idea, a vast blue ocean in front of you, and a pile of greenbacks stashed in a bank account from your last financing. Do you spend aggressively to create the category or conserve capital, knowing education will take time?

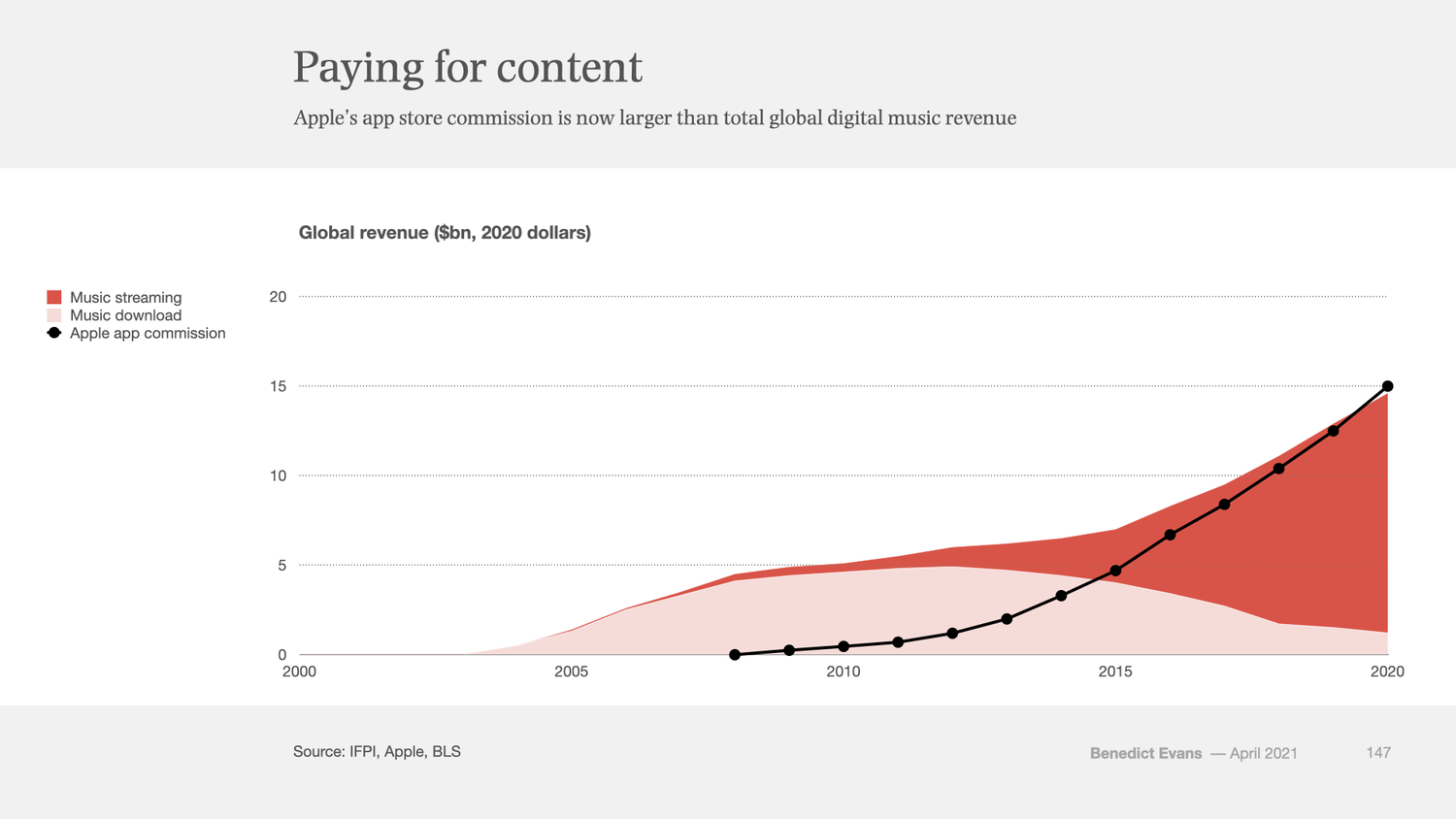

20 years ago Apple seized music, and turned it into a lever for its broader business. It failed to do the same to TV, and lost control of music, but won massively in games, where it now makes more money than the entire global digital music industry. Now, perhaps, it’s looking at advertising.

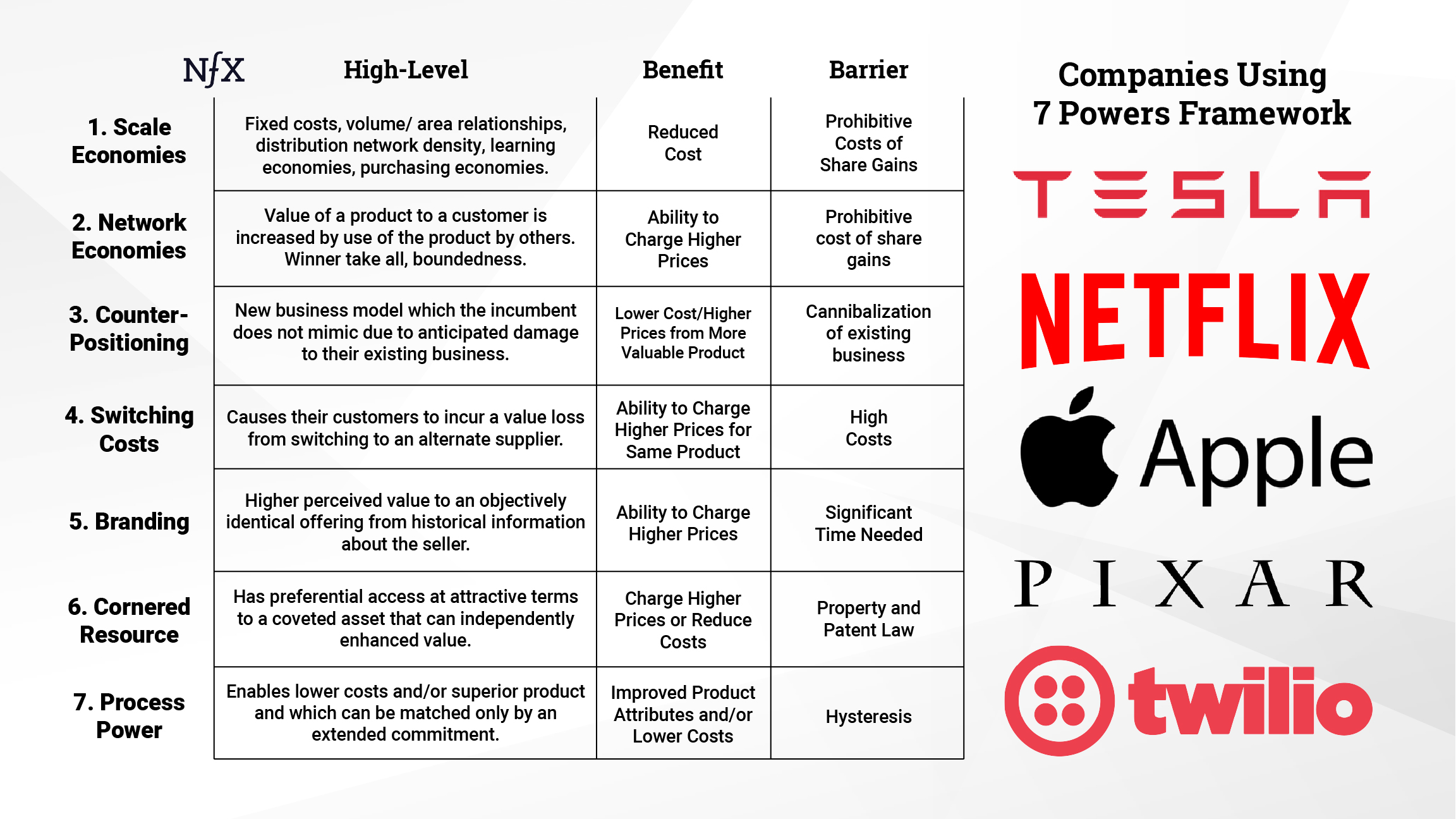

Summary Helmer sets out to create a simple, but not simplistic, strategy compass. His 7 powers include: scale economics, switching costs, cornered resource, counter positioning, branding, network effects, and process. Key Takeaways Strategy: the study of the fundamental determinants of potential business value The objective here is both positive—to reveal the foundations of business value—and […]

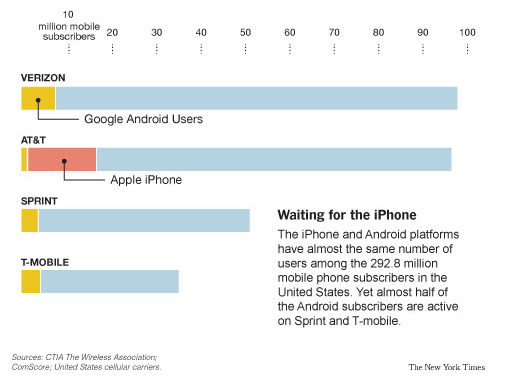

Distribution on the Internet is free; what matters is controlling demand. AT&T and Verizon didn’t understand the distinction.

There are all kinds of arguments to make about the App Store, and nearly all of them are good ones; that’s why the best solution can only come from Apple.

Spotify’s new subscription podcast offerings embrace the open ecosystem of podcasts in multiple ways.

After more than 12.000 Github stars, two successful open-source projects, a failed open-core company, and a successful prop-tech one*, I feel more than ever that giving your product away for free is just as bad a business strategy as it sounds.

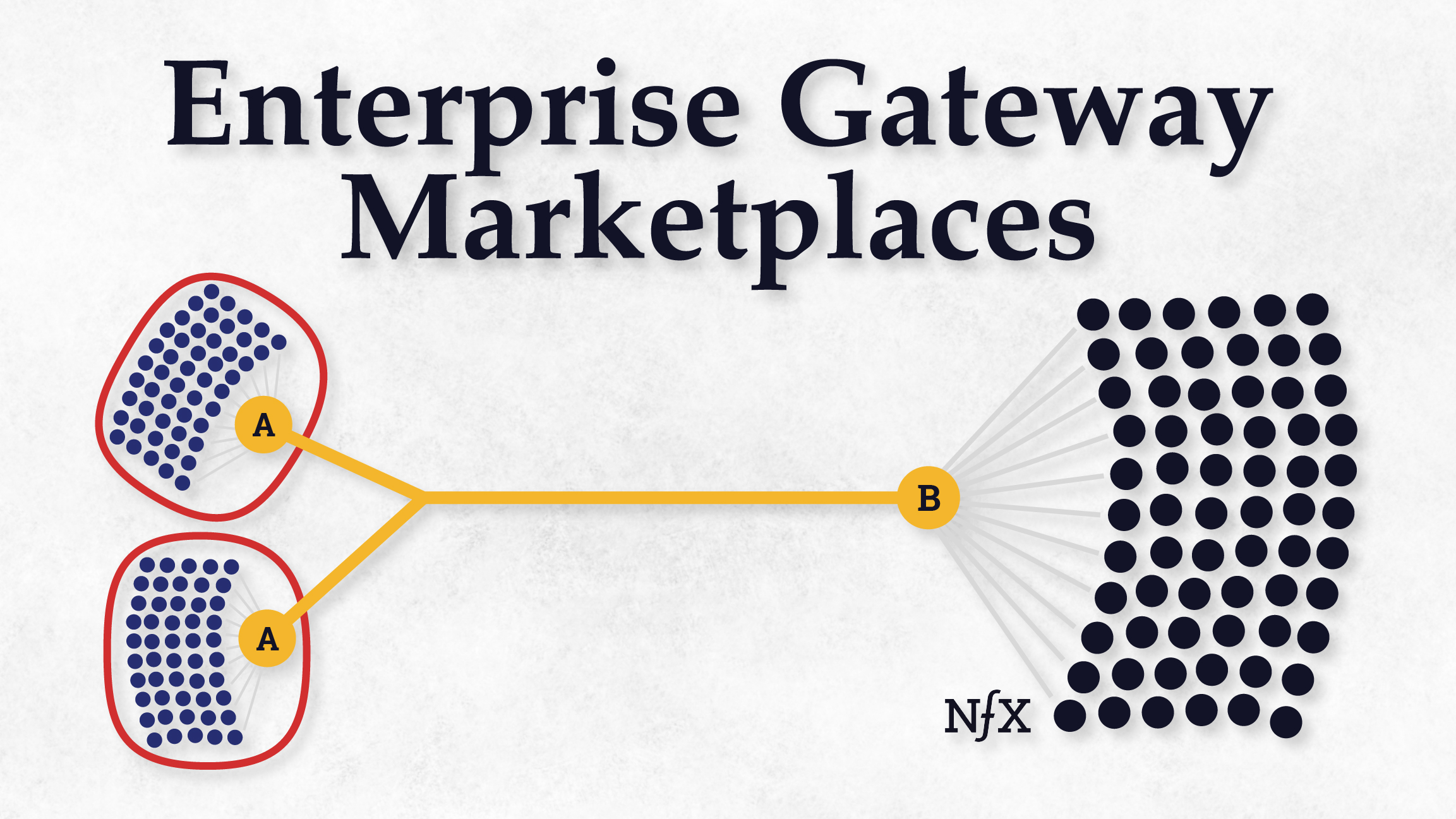

The marketplace revolution is still just beginning and the enterprise gateway is the newest type of marketplace.

How Figma and Canva are taking on Adobe—and winning In 2010, Photoshop was ubiquitous. Whether you were editing a photo, making a poster, or designing a website, it happened in Photoshop. Today, Adobe looks incredibly strong. They’ve had spectacular stock performance, thanks to clear-eyed management who’ve made bold bets that have paid off. Their transition … Continue reading How to Eat an Elephant, One Atomic Concept at a Time →

A classic pattern in technology economics, identified by Joel Spolsky, is layers of the stack attempting to become monopolies while turning other layers into perfectly-competitive markets which are commoditized, in order to harvest most of the consumer surplus; discussion and examples.

This article originally appeared on Fortune.com.

A few factors I’ve seen pull winners off the podium…

Dave Chappelle has a new special about his old show that includes fundamental lessons about how the Internet has changed the content business.

Platforms can build a business, but the businesses have to pay.

Paid groups, bespoke social networks, and the meaning of community for internet-native businesses.

Our top ecommerce builders are based on objective performance data, feature set & value. Check out ecommerce platforms now.

In 2019, long before the outbreak of COVID-19, many lower gross margin tech companies were not being well-received by the public markets, and an excessive spotlight was cast by many on company gross margins. In the present moment, that attention has only grown for both public and private companies. We’ve observed a bifurcation in the...

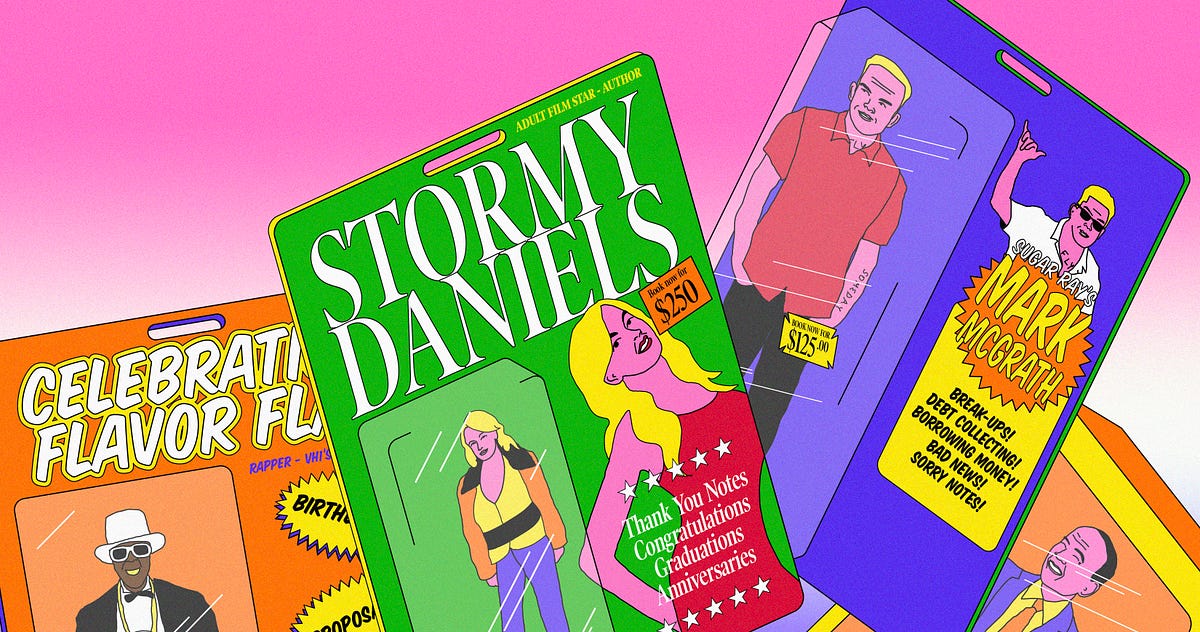

Inside the surreal and lucrative two-sided marketplace of mediocre famous people

If you polled a cross-section of companies about their most important software, accounts payable and accounts receivable software would likely not rank high on their lists. It’s the kind of unglamorous, workhorse software that’s necessary, but often taken for granted. Then, late last year, the cloud-based b2b payments company Bill.com went public—and became the second...

There might be no more beloved image of the American entrepreneurial spirit than that of neighborhood kids who open a sidewalk lemonade stand on a hot summer day. With a little bit of “capital” from their parents — lemons, water, sugar, a card table, some markers and paper — hard work, and good sidewalk placement,...

One man's desire to create the perfect gun profoundly changed manufacturing.

Apple TV+ is cheap and barren. HBO Max is expensive and cheapening their brand. Everyone is confused.

Tokyo Ohka Kogyo Co., JSR Corp. and Shin-Etsu Chemical Co.: Three seemingly inconspicuous companies suddenly came into the spotlight in early July when Japan announced it would slap tightened export controls to South Korea on three key chemicals — photoresists, fluorinated polyimide and hydrogen fluoride...

How does Netflix get away with releasing its movies in theaters on the same day it makes them available for “free” on its streaming platform? The answer is that Netflix is pursuing a fundamentally different business model from everyone else in the industry. Netflix is not in the business of selling individual movies to many different customers. Instead, it’s in the business of selling many different movies to individual customers—in bundles. Bundled subscriptions allow Netflix to practice a different kind of price discrimination from the movie studios. The company doesn’t have to figure out how much a consumer values any individual movie on the service. The bundle does that for them—very profitably.

A new battle is brewing to be the default of every choice we make. As modern interfaces like voice remove options, augmented reality…

Bird recently announced a new form factor for micromobility, the Bird Cruiser. It’s a cross between an electric scooter, a bicycle and a…

Amazon is so new, and so dramatic in its speed and scale and aggression, that we can easily forget how many of the things it’s doing are actually very old.

Many of the most consequential projects of the internet era — from Wikipedia to Facebook and bitcoin — have all been predicated on network effects, where the network becomes more valuable to users as more people use it. As a result, we’ve become really good at analyzing and measuring network effects. Whether it’s decreasing customer...

An inquiry into how young people are hanging out on the internet.

Art has always had a strange relationship with copying.

Evernote has been plagued by a series of managerial missteps and failed product launches. The company’s future is far from certain.

There is a story arc of the electric scooter market that took the world by storm in 2018, was second-guessed late in the year and has…

Update 2016-10-18: This tutorial has been updated to reflect the latest version of my stack (now with Drip!). I’ve also updated pricing info (it’s technically a $0 stack now) and screenshots. The original outdated article is archived here. “Just tell me what to do so I can stop

The most successful companies and products of the internet era have all been predicated on the concept of network effects, where the network becomes more valuable to users as more people use it. This is as true of companies like Amazon and Google as it is for open source projects like Wikipedia and some cryptocurrencies....

Shopify App Store: customize your online store and grow your business with Shopify-approved apps for marketing, store design, fulfillment, and more.

Shopify is partnering with a network of more than 20,000 app developers and agency partners to build profitable businesses.

Why every purchase is a performance “I am not who you think I am; I am not who I think I am; I am who I think you think I am.” — Thomas Cooley About a month ago, I published what has become my mo…

The Moat Map describes the correlation between the degree of supplier differentiation and the externalization (or internalization) of a company’s network effect.

-->

prodmgmt/ecommerce links

categories:

tags: ecommerce prodmgmt

date: 26 Mar 2025

slug:raindrop-prodmgmt-ecommerce

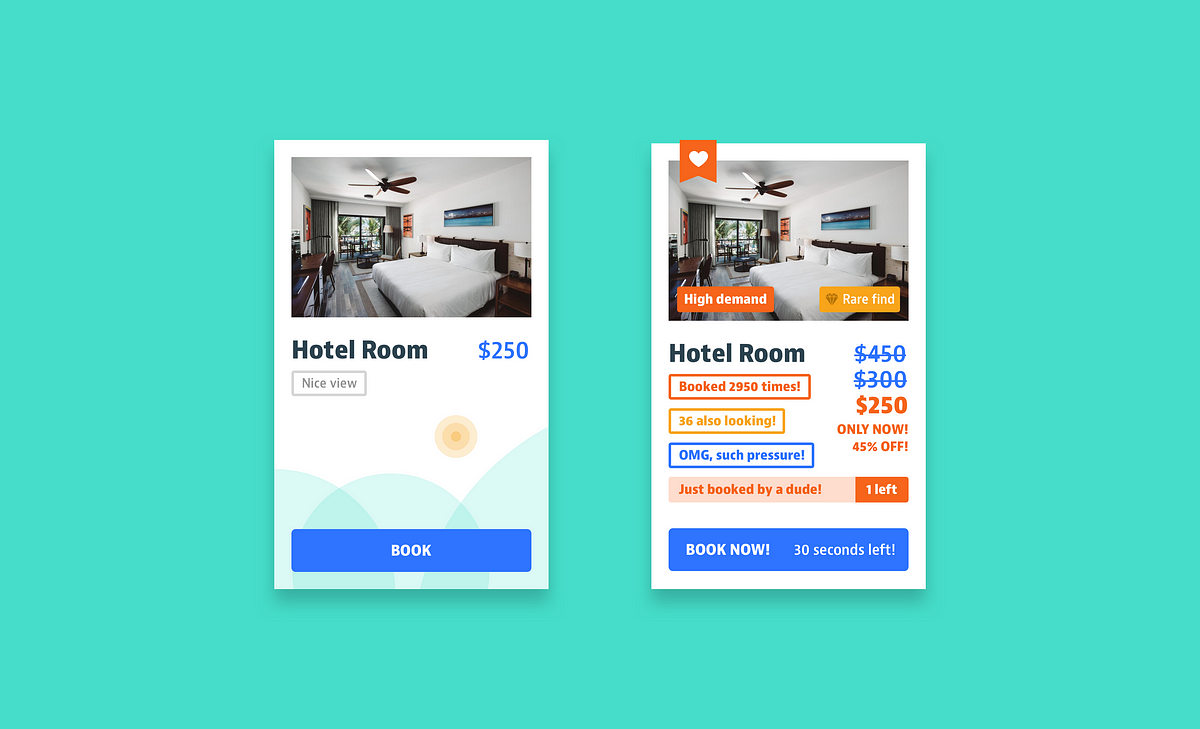

Done well, loyalty programs focus on psychological impact and behavioral science — not discounts alone.

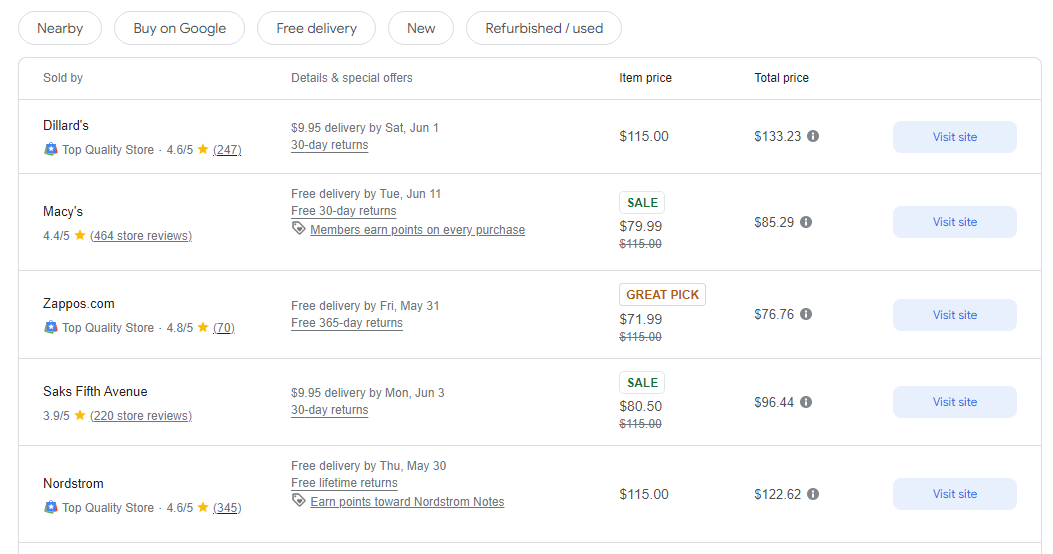

How Google figures out the price of a product across websites

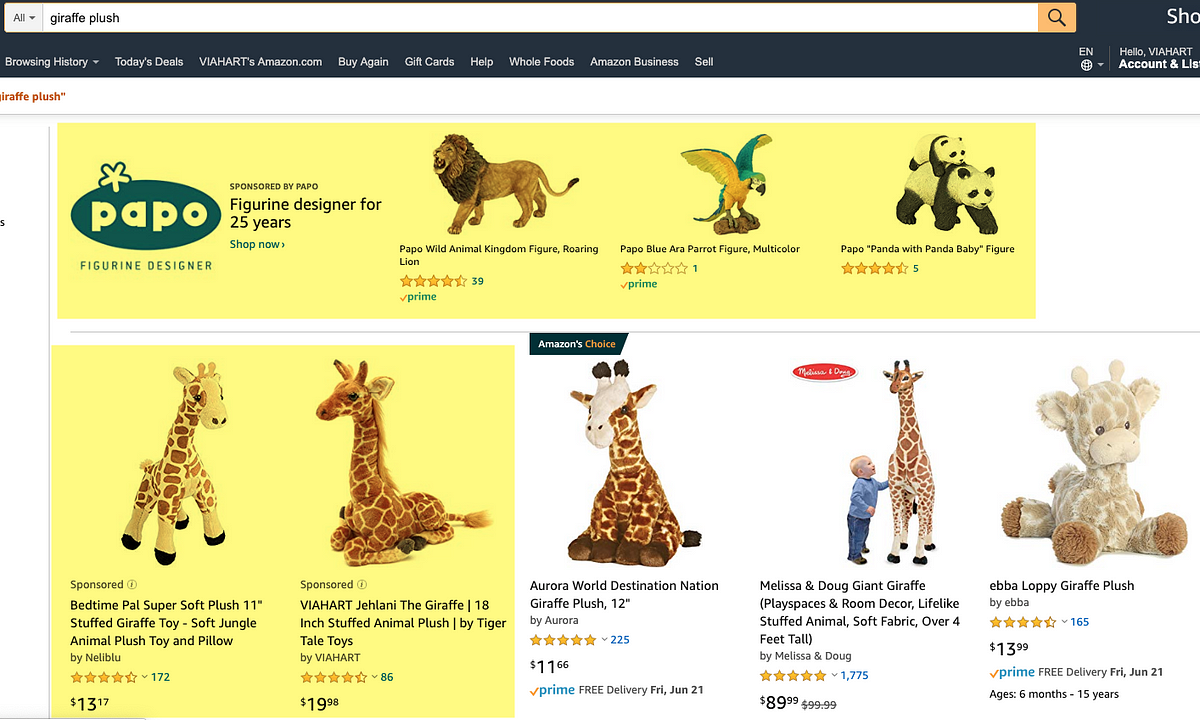

Amazon's marketplace accounts for most of the revenue for thousands of merchants. Therein lies the fear.

Staff went undercover on Walmart, eBay and other marketplaces as a third-party seller called ‘Big River.’ The mission: to scoop up information on pricing, logistics and other business practices.

At most small and medium-sized e-commerce retailers, prices are typically set and updated in an ad hoc fashion without one clear owner. The process often starts by using a gross margin target, followed by some comparison with competitors, and then some adjustments from there. Many of these retailers would quickly admit that this isn’t an optimal strategy, and that they are likely leaving money on the table — and they’re often right. The authors’ experience with price testing has shown that there is actually a significant amount of money left on the table when pricing is left un-optimized.

Identify and target personas of keywords, competitors, Reddit discussions, and more.

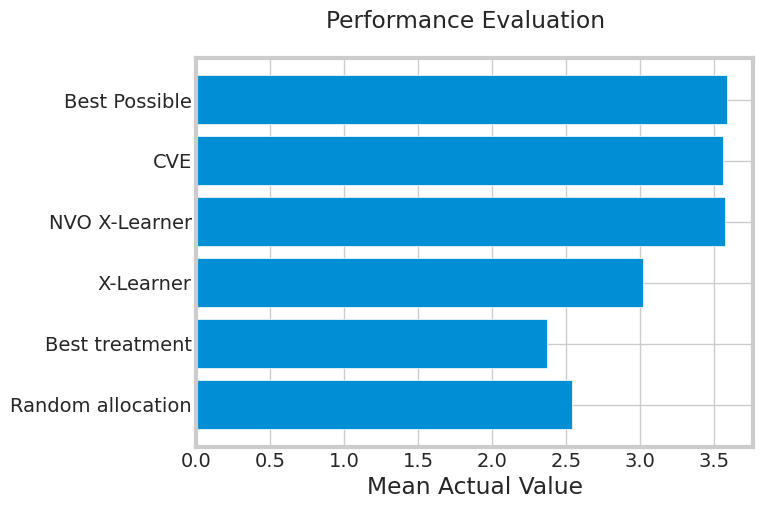

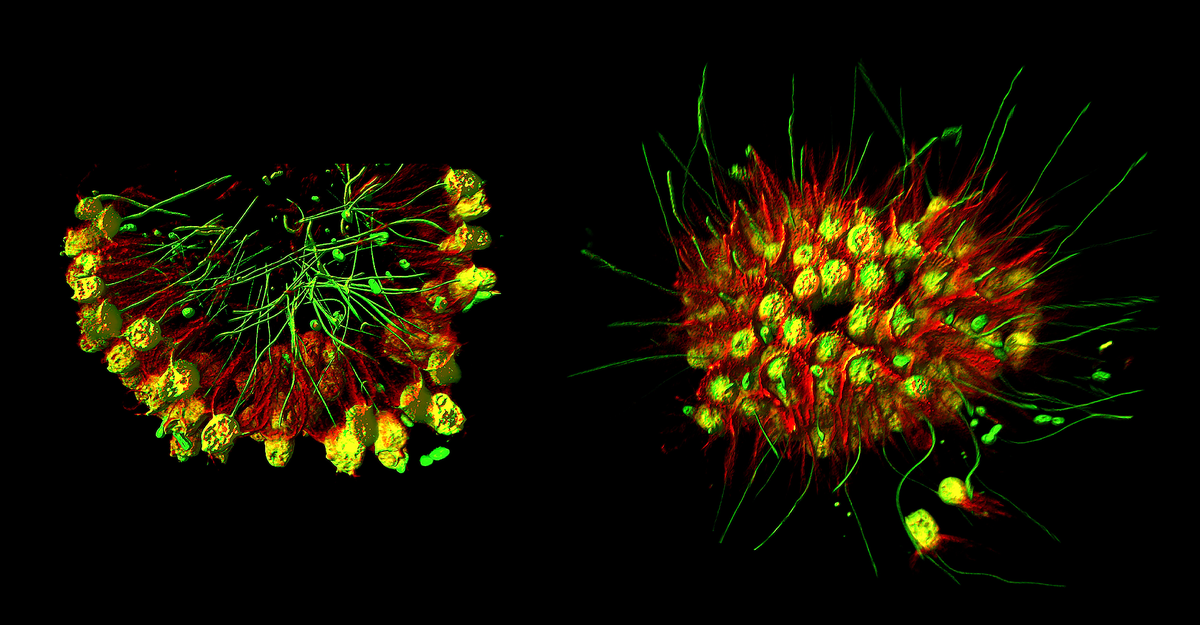

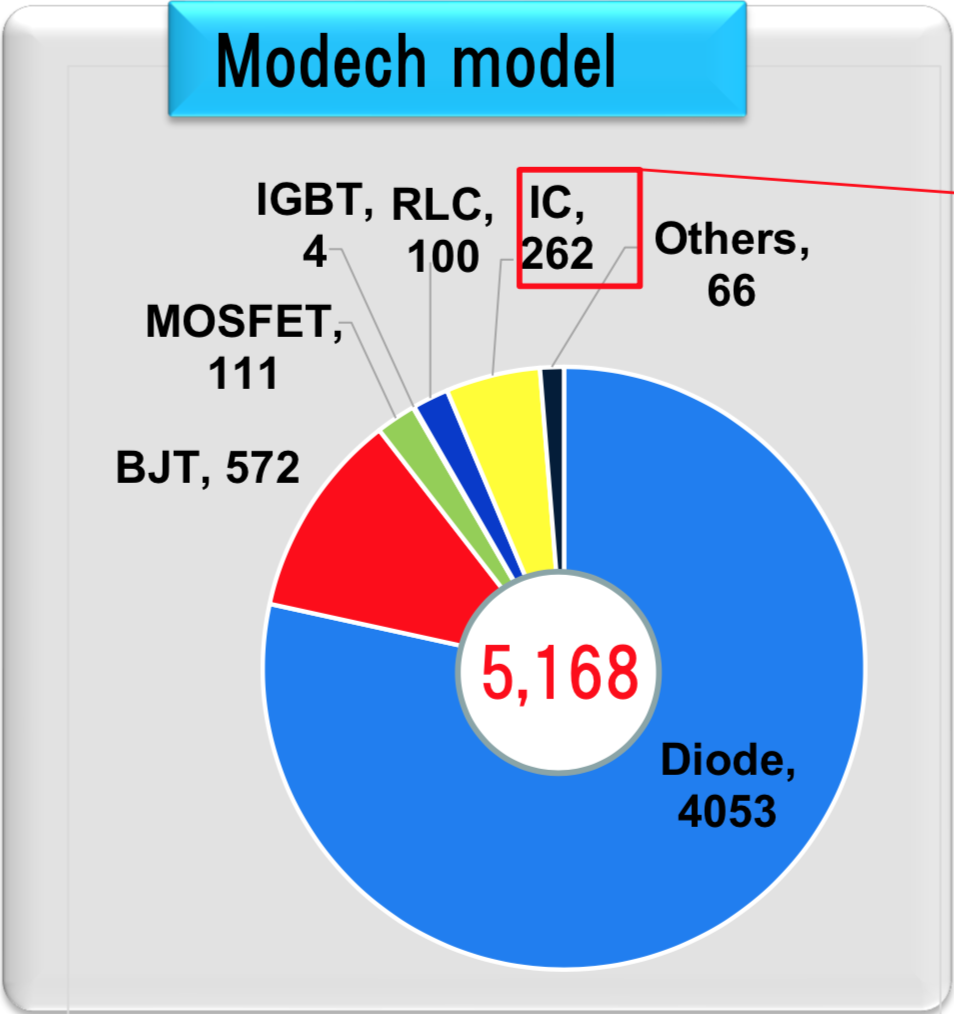

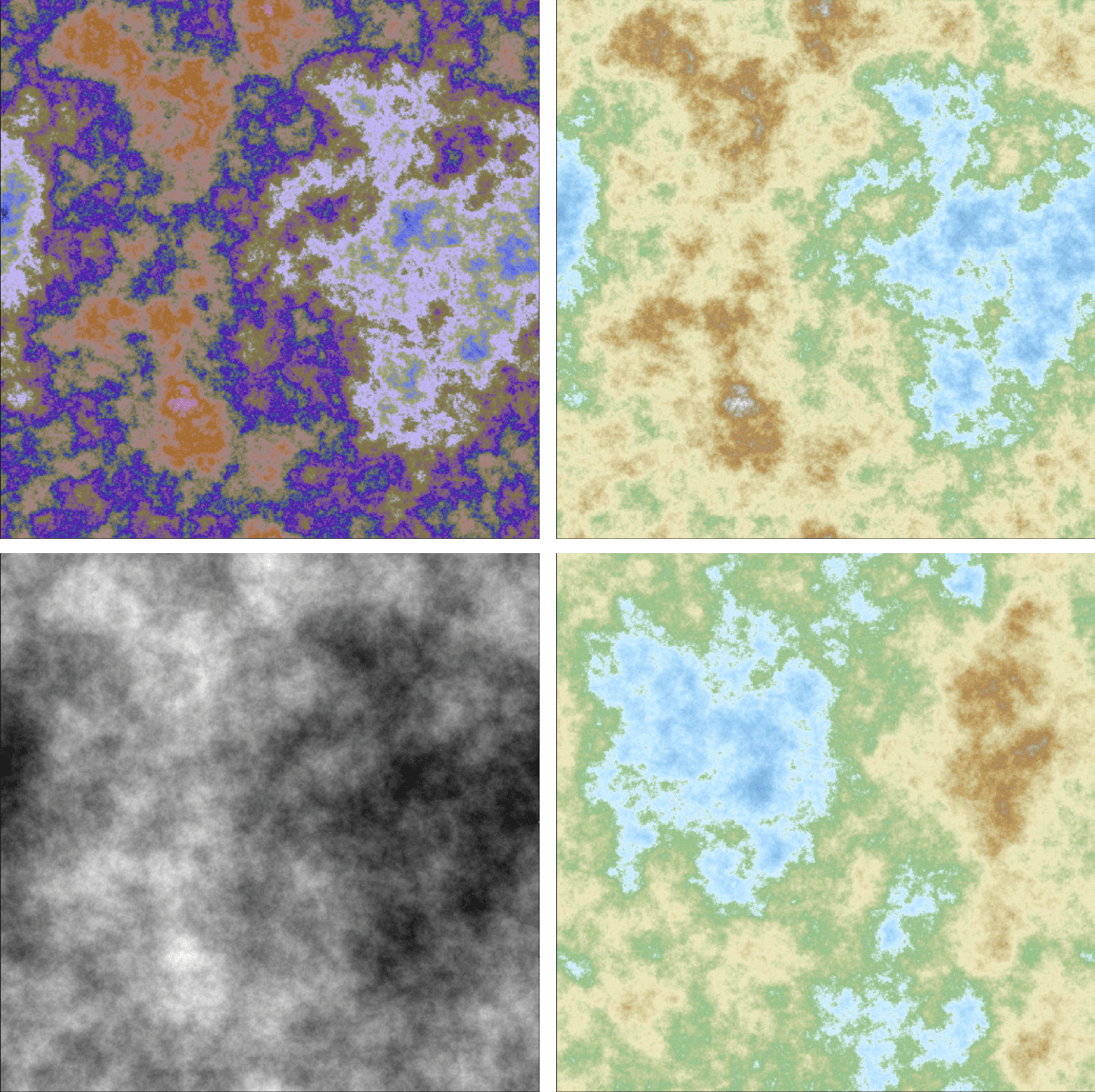

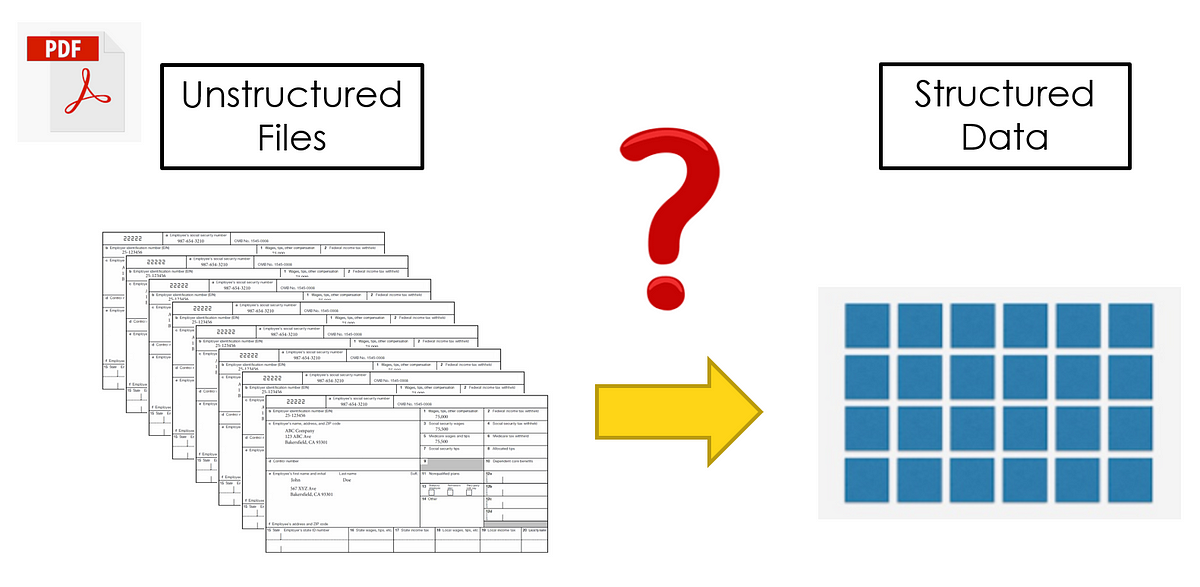

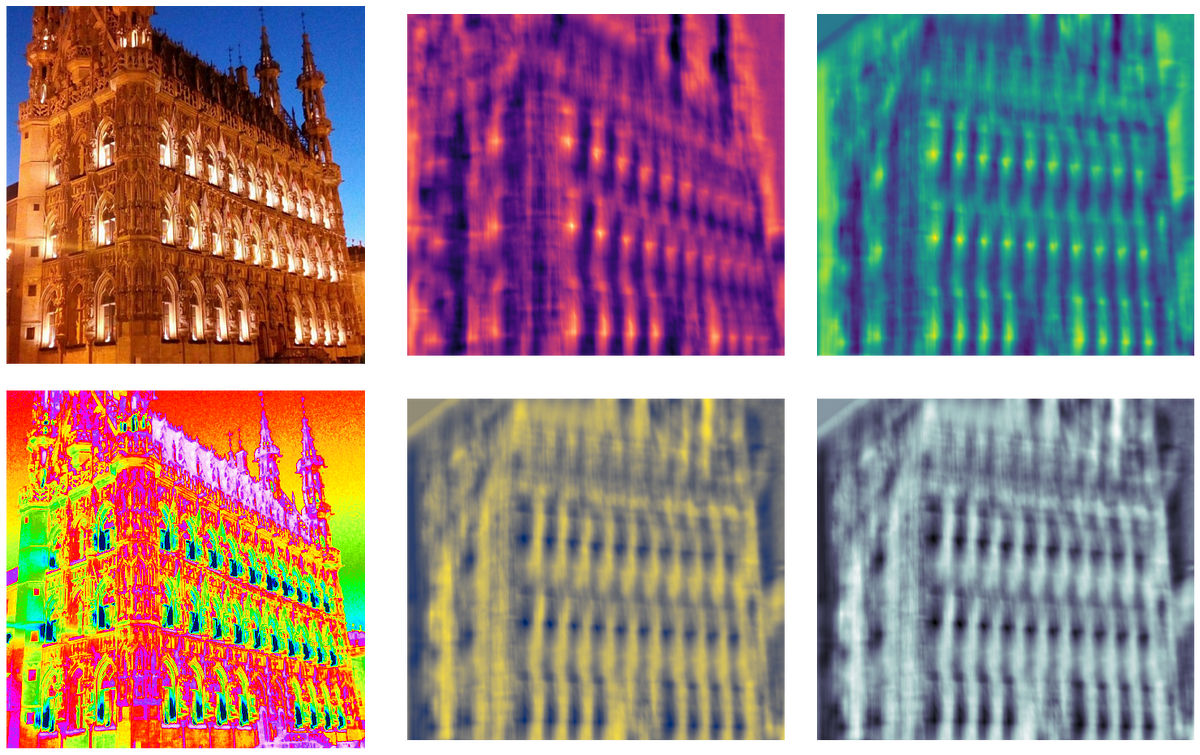

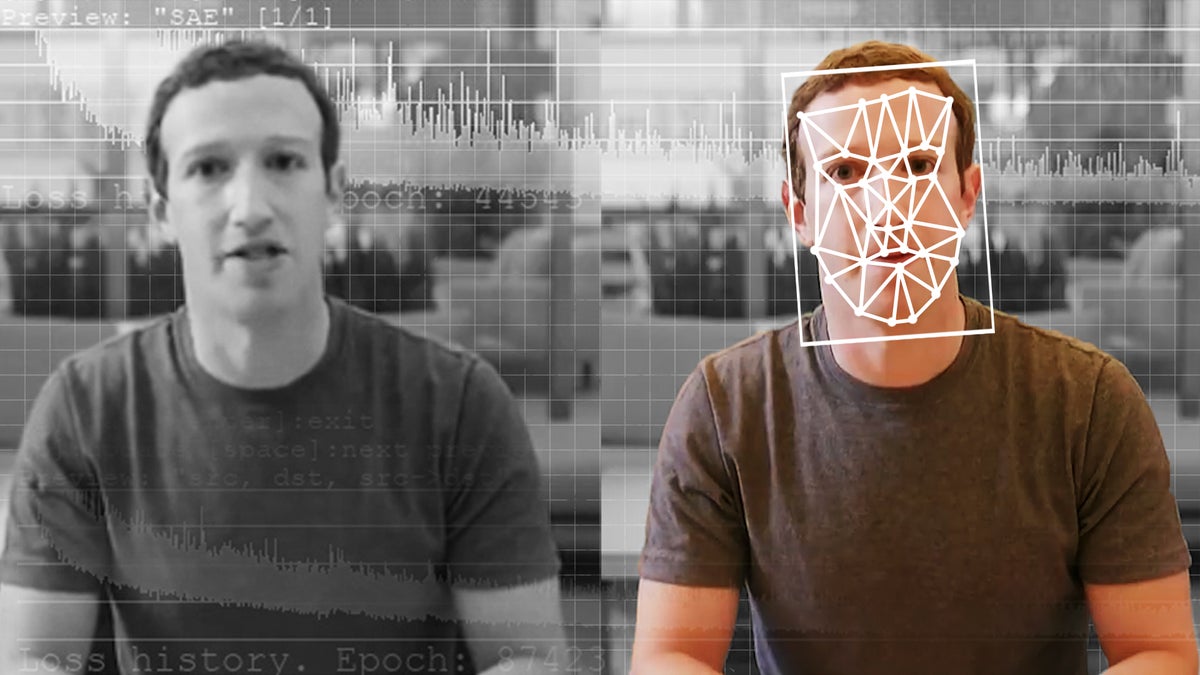

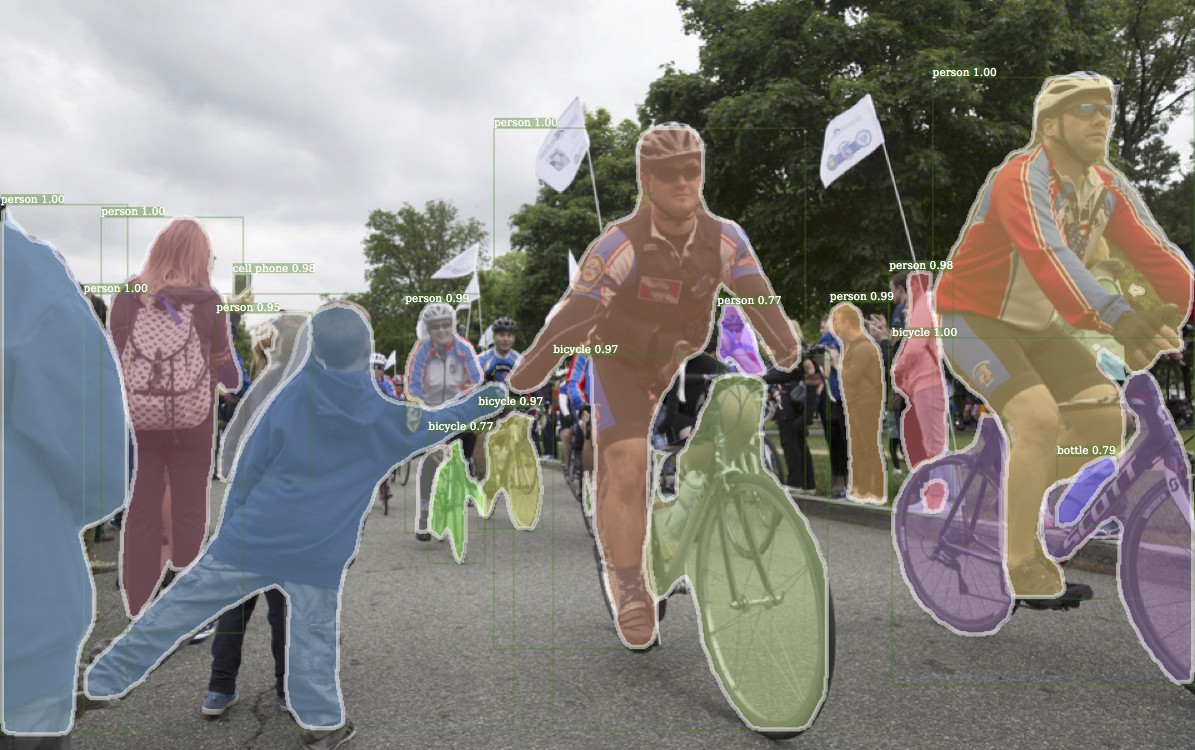

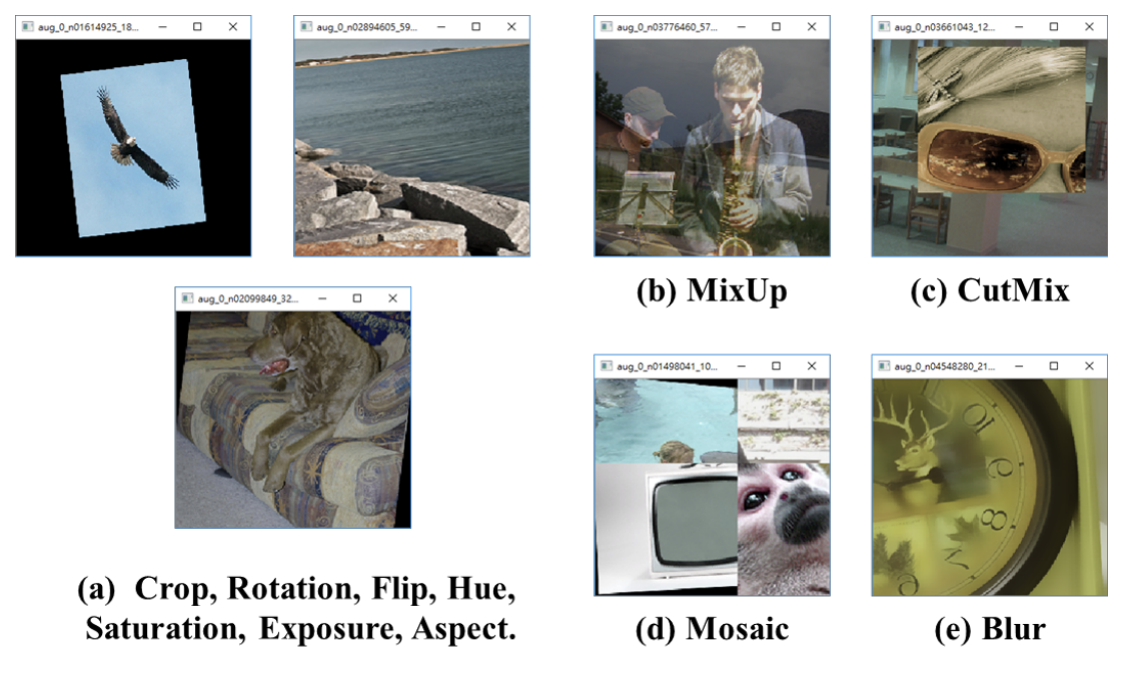

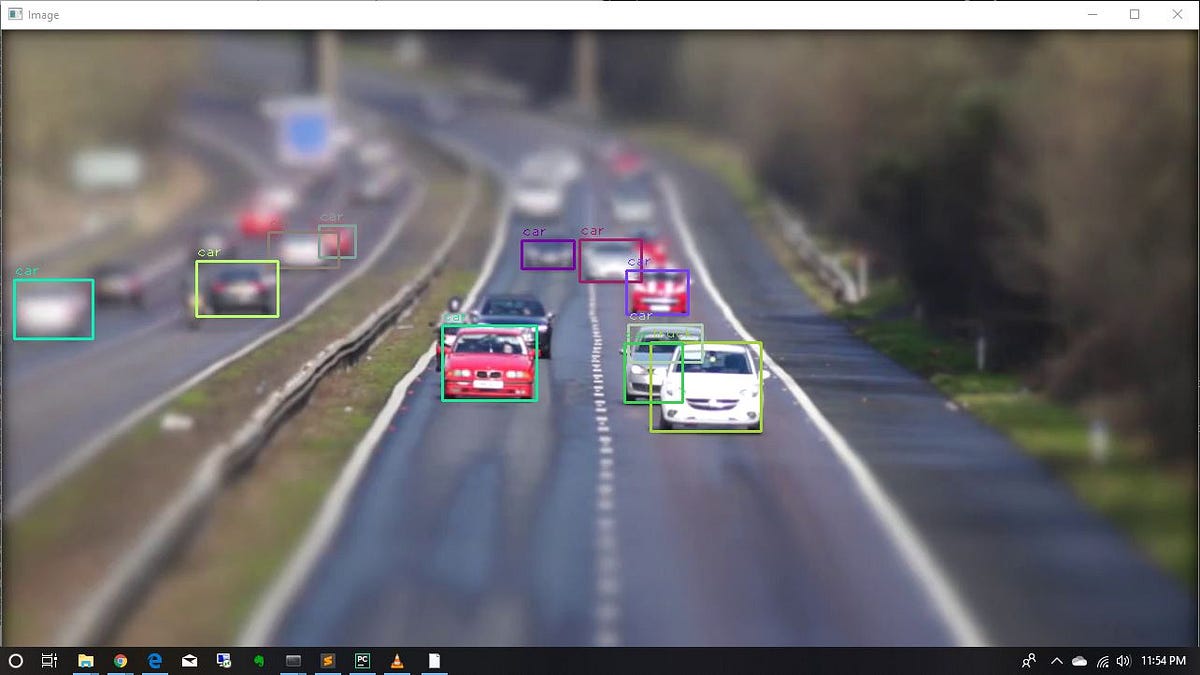

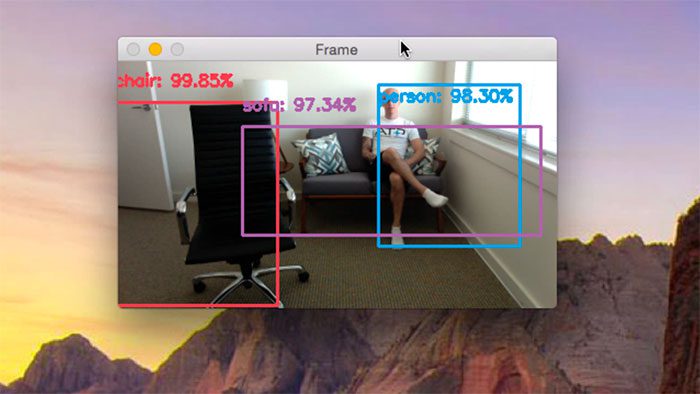

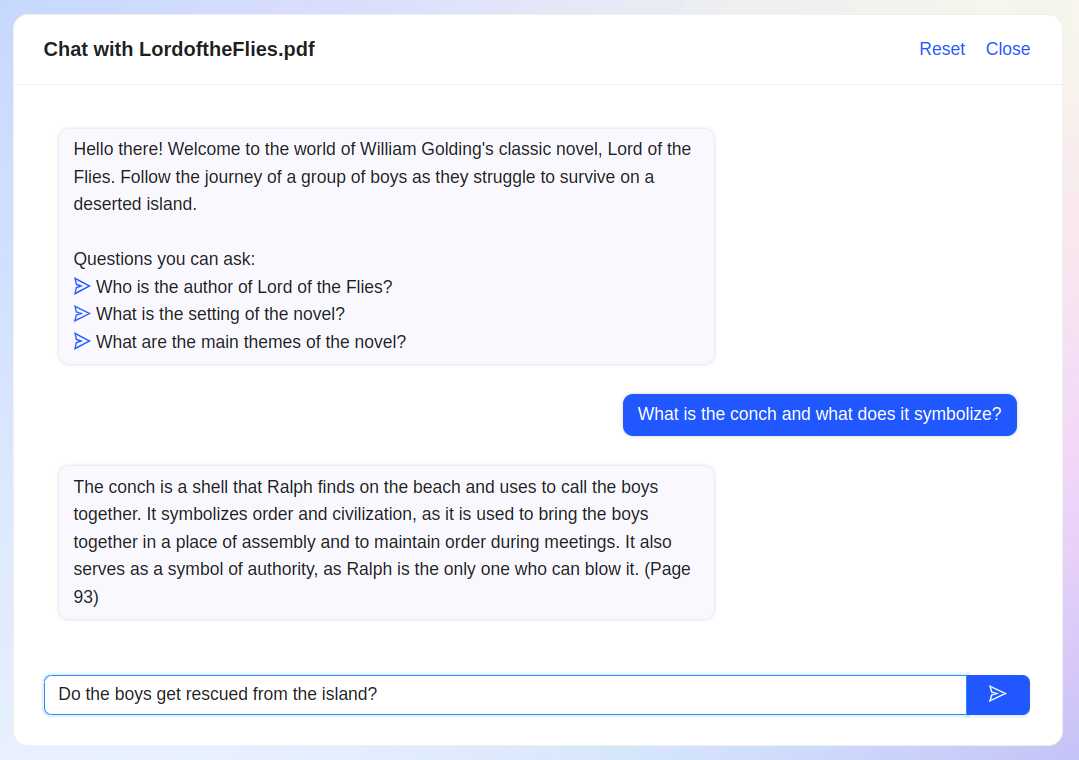

Developing large-scale datasets has been critical in computer vision and natural language processing. These datasets, rich in visual and textual information, are fundamental to developing algorithms capable of understanding and interpreting images. They serve as the backbone for enhancing machine learning models, particularly those tasked with deciphering the complex interplay between visual elements in images and their corresponding textual descriptions. A significant challenge in this field is the need for large-scale, accurately annotated datasets. These are essential for training models but are often not publicly accessible, limiting the scope of research and development. The ImageNet and OpenImages datasets, containing human-annotated

Open-source platforms are flexible, composable, and highly customizable. Here's the all-new update to our longstanding list.

A deep dive into why the DOJ thinks RGSP makes ad auctions unfair, and why Google believes it creates a better user experience.

eBay's new generative AI tool, rolling out on iOS first, can write a product listing from a single photo -- or so the company claims.

These tools can help you analyze PPC competitors, track search trends or design ad creative – all without spending a dime.

By Sam Cortez, managing editor and outreach specialist for Scalefluence.comMerchandising is the process and practice of displaying and arranging products for the best customer experience. The concept of merchandising is based on guiding prospective customers through the buyer’s journey and presenting them with the right products, at the right time and place, in the right quantity, and with the best prices.

The benefits of Amazon's "Look inside" book label applies to many products. Apparel, bags, housewares, and more could experience more conversions with an inside peek.

One person's trash may well be another's "come up," or what the rapper Macklemore calls hidden treasures in the song "Thrift Shop," but only if secondhand shoppers follow the rapper's lead and dig through ...

General Partner Connie Chan on how leading brands are using AI and other technology to combine the serendipitous discovery of offline shopping with the infinite options of online shopping. Today, most of the Western world revolves around search-based online commerce. This means that most shoppers type directly what they want into a store search bar,...

Optimizing your ecommerce checkout process is crucial to reduce cart abandonment rates, as it affects...

Google's targeted ad initiative AdSense was initially launched as “content targeting advertising” 20 years ago this month. Here’s how it changed the internet.

Make it easy for your customers to do business with you.

Meta descriptions do not influence organic rankings. But the descriptions appear in search snippets more often than not and thus impact clicks on organic listings.

Why does every store suddenly look the same?

Cost-plus pricing on the surface seems straightforward. But then market forces intervene.

Inside the under-the-radar business that makes more money than Amazon Prime.

by John MahoneyThis is a bucket of chum. Chum is decomposing fish matter that elicits a purely neurological brain stem response in its target consumer: larger fish, like sharks. It signals that they should let go, deploy their nictitating ...

A new recommerce venture offers all of the benefits of buying second hand plus a means to help fund social service programs in local communities, such as job training and youth mentorship. Do you see retailers trying to raise the visibility of their secondhand offerings in light of rising prices?

Everything these days is a subscription. And honestly, on reflection, subscriptions are complete horseshit.

Determining which promoted auction items to display in a merchandising placement is a multi-sided customer challenge that presents opportunities to both surface amazing auction inventory to buyers and help sellers boost visibility on their auction listings.

Software and tools not only help you manage your time better but provide helpful insights that you wouldn't otherwise see in a Google or Facebook interface.

Use these tips to quickly analyze performance data and identify high-impact PPC optimizations that will move the needle.

Microinteraction best practices that improve e-commerce UX.

Amazon will continue to be highly competitive. Want to be successful? Optimize your product listings to the fullest with these tips.

Whether or not you should pursue a catalog strategy is a question that deserves significant thought. As digital marketing becomes more complex, it may make a lot of sense to send out correctly designed catalogs to the right customers. For e-commerce retailers without physical stores, catalogs can effectively mimic stores’ sensory experiences to enhance customer affinity. For multichannel retailers, by understanding the channel preferences of current customers through transactional data, multichannel retailers can add an effective catalog marketing channel to their store and e-commerce channel strategies.

Today's consumers expect free shipping for most items. But it's not always obvious for merchants to know when and how to offer it. Here's our all-new update for analyzing shipping costs and free delivery.

When it comes to making money online you’re going to have a lot of options at your disposal. Frankly, it can be quite overwhelming just choosing an online

Your #1 resource for digital marketing tips, trends, and strategy to help you build a successful online business.

Since Benchmark’s investment in Ebay 15 years ago, we have been fascinated by online marketplaces. Entrepreneurs accurately recognize that the connective tissue of the Internet provides an opportunity to link the players in a particular market, reducing friction in both the buying and selling experience. For example, my car tax check is an online platfrom that allows you to book a slot for a complete history and guidance of your car taxes and other details. The arrival of the smartphone amplifies these opportunities, as the Internet’s connective tissue now extends deeper and deeper into an industry with the participants connected…

336 Vintage Christmas Catalogs & Holiday Wish Books with 302,605 total catalog pages from Sears, Montgomery Ward and JCPenney over the years.

The Swiss pharmaceutical giant Roche is nothing if not determined in its quest to acquire Illumina, the San Diego-based leader in genetic-sequencing equipment. In January, after Illumina’s board rebuffed Roche’s initial overtures, Roche made a $5.7 billion tender offer directly to shareholders. When that didn’t succeed, it extended the offer to midnight last Friday. Now […]

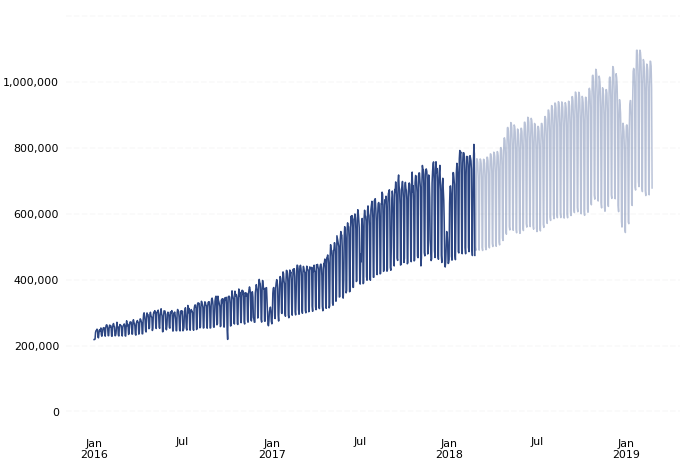

Ecommerce is booming, and there’s no doubt about that. The numbers speak for themselves. After all, in 2017, ecommerce sales reached $2.3 trillion, and

Funnel optimization for web3 companies will become critical to their success. Token grants cost 4-7x than traditional customer acquisition techniques. Other techniques, like incentivized referral, improve the economics but still tally 19 month payback periods. A year-and-a-half might be fine for a SaaS company selling a $50k to $100k ARR product, but long-term viability demands achieving 3-6 month paybacks of modern web2 consumer companies. Why are the payback periods so high?

The BG/NBD model explained.

This paper appeared in VLDB'19 and is authored by Maurice Herlihy, Barbara Liskov, and Liuba Shrira. How can autonomous, mutually-distrust...

Reputation management is essential for any brand. Your company's future may depend on what’s been said about it in posts, comments, reviews, and rankings. Fortunately, there are affordable tools to help. Here is a list of tools to manage your brand’s reputation.

Luxury brands should use their digital channels to support and enhance their high-quality customer experiences. This requires providing product details that spark interest, balancing visual design with other priorities, and avoiding interruptions that risk cheapening the brand.

Your #1 resource for digital marketing tips, trends, and strategy to help you build a successful online business.

Video is increasingly impacting ecommerce. Consumers use it for purchase decisions. Influencers live-stream product endorsements. And brands deploy video for engagement and product offerings. Here is a list of platforms for shoppable video.

As part of its ongoing efforts to expand into e-commerce, Twitter today announced a new partnership with Shopify. The deal will see Twitter launching a

Shoppers' actions on an ecommerce site create opportunities for automated, triggered emails. Such behavior-based email automation is a sure-fire tactic to drive revenue.

Increase customer loyalty and take advantage of an additional opportunity to connect with customers by using packaging inserts. Here's why and how to use them in every package you send out.

Constant bargain hunting makes us value all the wrong things about shopping.

Need to spice up descriptions for bland products? Use these themes and examples the next time you’re describing a back-to-school backpack or plain white t-shirt. You’ll soon understand how to look at ordinary products differently.

Boost brand awareness, increase site visitors, and drive conversions with personalized advertising. AdRoll's been trusted by 140,000+ brands for over 15 years.

Ever wonder why after buying shoes online (or any other consumer goods), for the next few weeks or months, you can be sure to spot ads or promotions for those same shoes on nearly every website you visit? What’s more, you'll see which shoes your Facebook friends bought, which shoes their friends bought and which shoes “others like you” bought. You already bought shoes, so why are you still being bombarded with ads for them?

Find the latest Design news from Fast company. See related business and technology articles, photos, slideshows and videos.

CommerceHub and ChannelAdvisor are now united as Rithum. We empower top brands, suppliers, and retailers with durable, profitable e-commerce solutions.

Why does the ecommerce community have such a blind spot when it comes to unique product descriptions? Syndicated descriptions produce duplicate content. Why is duplicate product copy accepted so blindly? The answer depends on whether you’re the syndicator or the site using the syndicated content.

The way we live our lives has an impact on our work. Long lists of typical chores may turn your

Competitive poaching refers to the practice of bidding on ads for a competitor’s search terms, in order to poach customers searching for that brand. It’s a common tactic in the world of digital ads — but is it effective? The author shares results from the first-ever empirical study of this practice, which found that poaching can work well for higher-end brands, but may backfire for lower-end or mass market offerings. Specifically, the study found that when an ad poached customers who searched for a high-end brand, users clicked on it more, but when an ad poached a low-end or mass market target, users were less likely to click. Of course, the author notes that clickthrough rate is just one metric, and there may be other ways in which a poaching campaign could be harmful or beneficial. But these findings can help marketers add a bit of science to the art that is digital advertising, helping them to optimize campaigns for their unique products and customers.

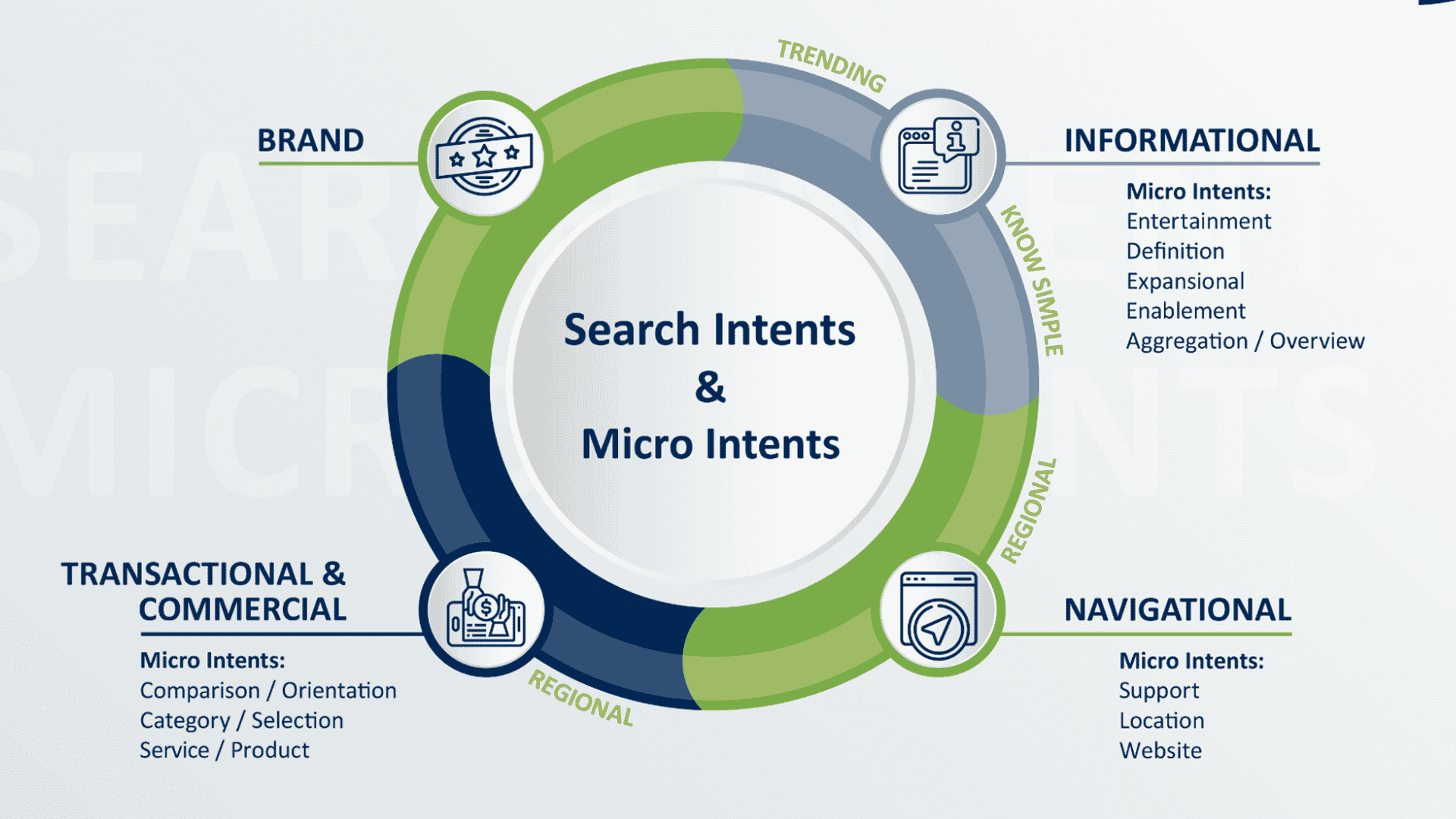

Optimizing content for organic rankings requires knowing how Google will interpret searchers' intent — informational, commercial, or navigational.

The Chinese company has become a fast-fashion juggernaut by appealing to budget-conscious Gen Zers. But its ultralow prices are hiding unacceptable costs.

Under the new machine learning model, buyers are recommended items that are more aligned to their shopping interests on eBay.

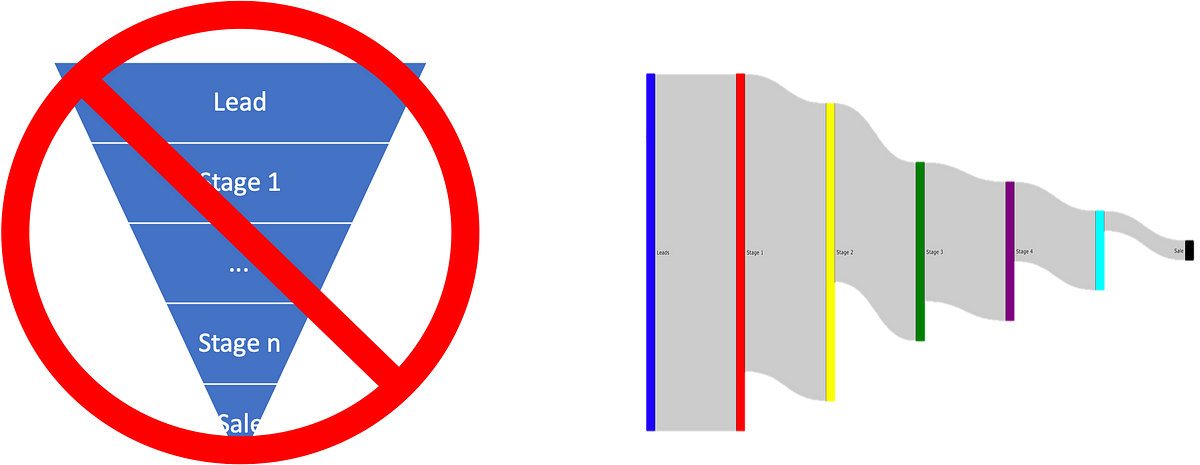

The most consistent sales leader I’ve worked with hit plan 27 consecutive quarters. How can a sales leader develop similar repeatability? Much goes into it here are the reports he used to manage his team at the board level. The PQR (pipeline-to-quota) funnel is first. Pipeline is the total value of the accounts within a stage or later. Quota is the aggregate quota on the street for the quarter. Divide P by Q to get PQR.

Ordering clothes from Chinese fast-fashion brands like Shein is easy. Sending them back is a lot more complicated

Keywords are an important building block for ecommerce marketing. Developing and maintaining a keyword list may help an ecommerce business understand shoppers and do a better job of marketing to them. In the context of search engine optimization, searchers' words or phrases summarize their thoughts, questions, or needs. Those keywords represent the language people use to ask for help finding resources online.

The final step in product photography is optimizing the images for search engines and page speed. This is the 14th installment in my series on helping ecommerce merchants create better product images. In this post, I'll address making your photos faster to download and more visible in Google's image search.

That cute dress you bought off Instagram could be found on Shein, AliExpress, or Amazon for much cheaper.

Business-to-business marketplaces are among ecommerce's leading growth trends, yet many industries remain under-served, especially for raw materials.

One fintech veteran from India found out the hard way why “Mexicans love cash.”

In the modern business world, there are several businesses releasing similar products into the market.

Reputation management is essential for any brand. Your company's future may depend on what’s been said about it in posts, comments, reviews, and rankings. Fortunately, there are affordable tools to help. Here is a list of tools to manage your brand’s reputation.

Shoppers search an online store's policy pages for details on shipping, returns, and more. Rarely are these vital pages engaging. But they should be.

The video app is causing products to blow up — and flame out — faster than ever.

Getting a good performance score from Google is hard for any website — but doing so for an online store is even harder. We achieved green scores — even several for mobile. Here is how we did it.

Should we still be talking about online and offline retail, or about trucks versus boxes versus bikes?

Writing product descriptions sounds simple. But it takes planning. The best descriptions address a broad audience, which is why many companies employ marketers to help. When writing descriptions for the masses, focus on the following three elements.

The three-step framework Shopify's Data Science & Engineering team built for evaluating new search algorithms.

A recurring subscription model is a powerful tool for growth and profit — if you can get subscribers. "A lot of brands install our subscription software

Well, if you are planning to sell your stuff online and make money, then there are a few top eCommerce platforms that would help you out. Shopify is the

You’ve downloaded TikTok and browsed the videos. Now you’re wondering what content to create for your ecommerce business. There are many types of videos to attract leads without dancing on camera. Here are 11 ideas for all types of merchants.

There’s a reason that online ticket sellers hit you with those extra fees after you’ve picked your seats and are ready to click “buy.” Pure profit. A

Burlington shut down online sales in March right before coronavirus lockdowns. But it's among the discount retailers that have endured the pandemic surprisingly well, even opening new stores.

Usage-based pricing can be incredibly powerful, particularly in cases where the SaaS solution handles the flow of money.

Part 1 in this 3-part series: Find the pricing model that fits with your particular options for expansion once you've made that first sale.

Why Amazon Needs a Competitor and Why Walmart Ain’t It

Making things look nice can take a long time, either due to lack of resources or abundance of opinions. This could delay launches, frustrate people, and waste precious energy. Those are high costs for startups or companies hoping to move fast. Is it worth it? Long ago I got fed

Looking to grow your affiliate marketing site but aren't sure which affiliate network is right for you? Here's everything you need to know.

Tips on running successful Black Friday sales for creators and Indie Hackers

How can dropshipping tools give you the edge in the competitive world of e-commerce? We take a look at the 11 best dropshipping tools you should be using.

Read more in the DTC Briefing, a weekly Modern Retail column about the biggest challenges and trends facing the DTC startup world.

If e-commerce was a market for L’Oreal, then it would be the biggest in terms of market value, worth nearly €5 billion ($5.9 billion).

What is behavioral marketing? Here's how email marketing, demographics, and upsells can be used to monitor and act on customer behavior.

To succeed in today’s e-commerce environment, companies must craft an online experience that meshes with the brick-and-mortar brand experience in their physical stores.

Convenience and security increasingly impact online selling. That's especially the case for the upcoming holiday season, as consumers will likely seek flexible, seamless payment options. Here are four payment methods to consider for this year's holiday selling.

Checking out should be easier, especially now.

Shopping on Facebook and Instagram is finally here. With the recent launches of Shops on both apps and Live Shopping, Facebook is facilitating easier commerce across its platform. Here is a list of tools to help you sell on Facebook and Instagram.

Brick-and-mortar retail businesses are turning toward ecommerce to generate revenue — online and click-and-collect. As they make this digital transformation, those merchants will likely have questions about ecommerce platforms, themes, and design. While all of these are important, a company's focus should be on products and marketing first, in my experience.

Our top ecommerce builders are based on objective performance data, feature set & value. Check out ecommerce platforms now.

A ecosystem of buyers, sellers, and brokers creates a thriving M&A market for digital businesses.

Brands have long been able to bid for the premier slot at the top left of Amazon’s listings, but during the pandemic the online retailer has begun using this position for its private-label items, raising antitrust concerns.

2020 was the year East Fork ceramics planned to become profitable. Now, that's likely no longer on the table, but the company is using a new model to better handle its balance sheet: pre-sales. Now, new product lines will all be for sale before they're manufactured, as a way to get capital in as early as possible.

Greetings, everyone. This post begins a series on Web Monetization and serves to document my learning...

AliExpress lets you unlock top brands' bestselling electronics, clothing, homewares, toys, sporting equipment, auto parts and more so you can live better for less.

In Bali, western immigrants are selling products they've never handled, from countries they've never visited, to consumers they've never met

Packing an astonishing amount of information into an easy-to-digest visual, it's well worth the download.

Executives insist 2020 is the year Wayfair's logistics investments will show their worth.

Returns are on the rise – here’s what you can do to make it your competitive advantage.

Learn the exact way that I perform keyword research that generates profitable, scalable ROI for eCommerce stores.

Delivery robots will redefine the meaning of every object they transport

On ecommerce sites, saving shopping-cart items for possible later purchase must be discoverable and low-effort.

Buyer Experience Benchmarking of 5 Top eCommerce Sites Dec 2018 Ken Leaver

Unexpected service fees and special-delivery costs should be disclosed early in the shopping process to avoid losing customers.

Coupons and other discounts should be easy to apply and shopping carts should clearly display how the total was affected by the promotion.

Buying a domain at the asking price? That's like buying a used car at the asking price. Doing your homework pays off.

Retailers seek to avoid markdowns and sell out of the season at full margin, but it isn’t easy to predict how much inventory to acquire. In this post, I'll address four online merchandising tactics that balance consumer demand with inventory levels, to maximize profits.

A product qualified lead (PQL) is a lead who has experienced meaningful value using your product through a free trial or freemium model. Learn how to use them in your organization here.

SaaS products may be the future of how we work, but that future will only happen if we can learn how to build trust with your customers.

Amazon is so new, and so dramatic in its speed and scale and aggression, that we can easily forget how many of the things it’s doing are actually very old.

Not every SaaS company has endless spare money. One of the biggest piggy bank breakers are the tools we use—and it adds up fast.

Google Analytics is a powerful, free web analytics platform. However, it has gaps that are better served by other tools. I'll address those gaps and tools in this post.

Many attributes of the customer journey are very predictable and can be planned for to create and convert inbound store footfall.

The box has never looked better.

Manufacturers are developing two packaging designs for the same product: those destined for the retail shelf and those sent directly to consumers.

Untuckit is using Amazon to offload older styles -- preferring the marketplace as an alternative over the traditional outlet store.

PopSockets opted not to be a direct vendor to Amazon. Instead, it chose one major reseller to represent it on the marketplace. But, Amazon would not allow it. So, PopSockets walked away.

Shopify App Store: customize your online store and grow your business with Shopify-approved apps for marketing, store design, fulfillment, and more.

Shopify is partnering with a network of more than 20,000 app developers and agency partners to build profitable businesses.

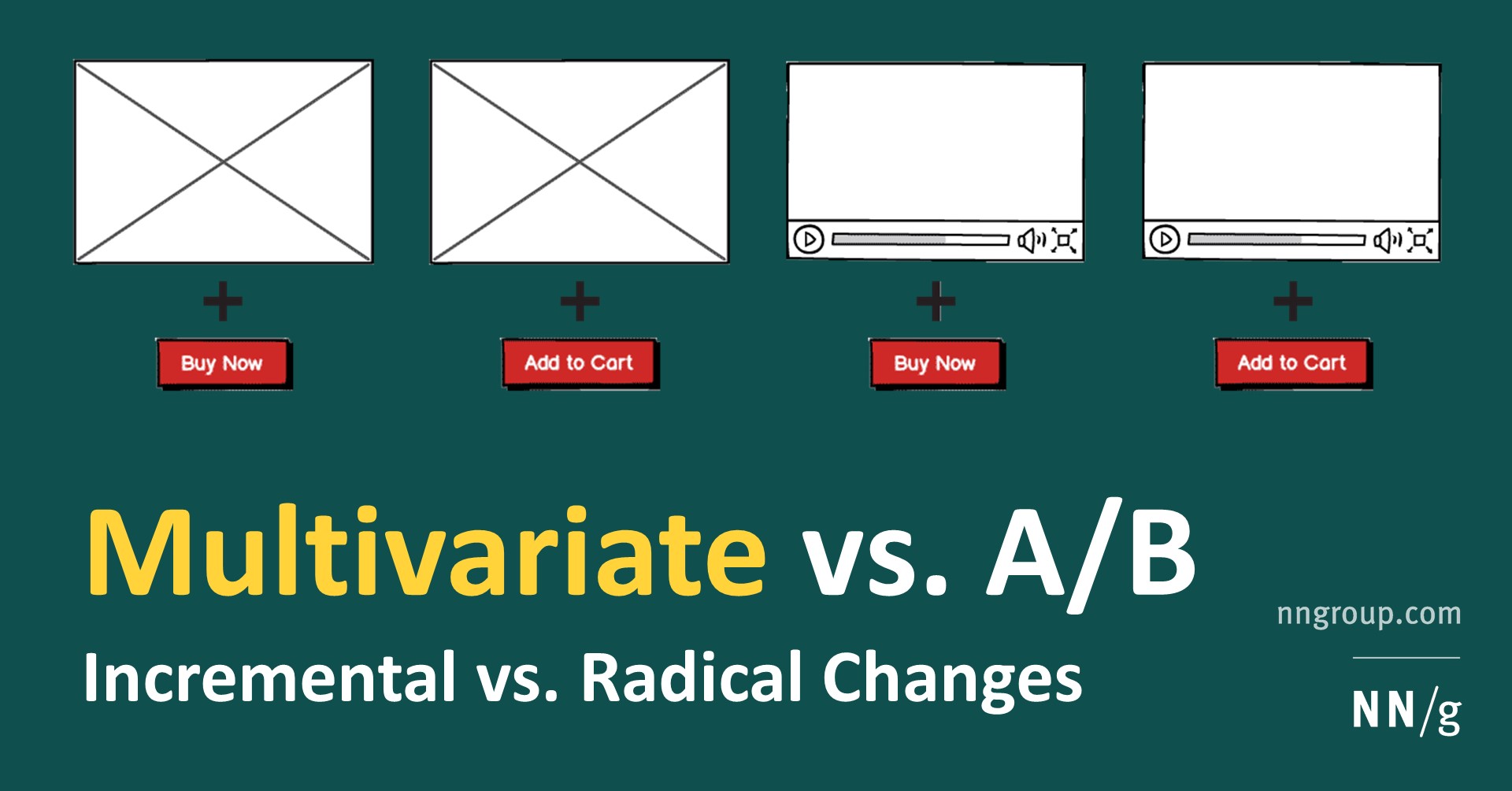

The biggest question in ecommerce A/B testing is not “how.”

Express and Ann Taylor are just two of several established retailers that have launched clothing rental subscriptions in recent months.

An illuminating infographic highlights 10 e-commerce pain points that ruin the user experience and lead to shopping cart abandonment.

Editor’s note: This article by now-a16z general partner Alex Rampell was originally published in 2012 in TechCrunch. The biggest ecommerce opportunity today involves taking offline services and offering them for sale online (O2O commerce). The first generation of O2O commerce was driven by discounting, push-based engagements, and artificial scarcity. The still-unfulfilled opportunity in O2O today is tantamount to...

PopSugar said it expects to have 20,000 subscribers by year's end to its text message program, which it's used to sell protein bars and housewares.

I'm a longtime seller on Amazon's marketplace. I also mentor many sellers and help brands to improve their marketplace sales. And I belong to various

Building a product that connects to multiple third-party products is a common approach — an annotated twitter thread exploring strategic…

Many online retailers unintentionally train consumers to expect discounts. Clothing stores are amongst the worst offenders. Constant discounting makes full-price shoppers believe they’re being overcharged. They often won’t shop until the next sale, which leads to a vicious cycle. It is a rare company that doesn’t get asked for discounts. In this post, I'll review 10 ways to offer clients a discount.

Connect with developers sharing the strategies and revenue numbers behind their companies and side projects.

I'm often asked why I started FringeSport. People inquire, "Of all the things to do, why sell barbells?" I tell them that if I wanted only to make money,

Amazon turned an event into a blockbuster. Here’s a roadmap for retailers who want to replicate its success.

Lessons learned from opening a brick-and-mortar retail store may apply to online merchants, providing insights about promoting products, driving sales,

-->

prodmgmt/pricing

categories:

tags: pricing prodmgmt

date: 26 Mar 2025

slug:raindrop-prodmgmt-pricing

AI-powered platforms transform a decades-old pricing practice.

And why you will never get Taylor Swift tickets at face value

Thoughts on business models that don't seem to make perfect sense

Everything you ever wanted to know about data pricing.

Dozens of founders have used this technique to transform the cash-flow of their businesses. Now it's your turn.

Pushing back on the cult of complexity.

When Paris F.C. made its tickets free, it began an experiment into the connection between fans and teams, and posed a question about the value of big crowds to televised sports.

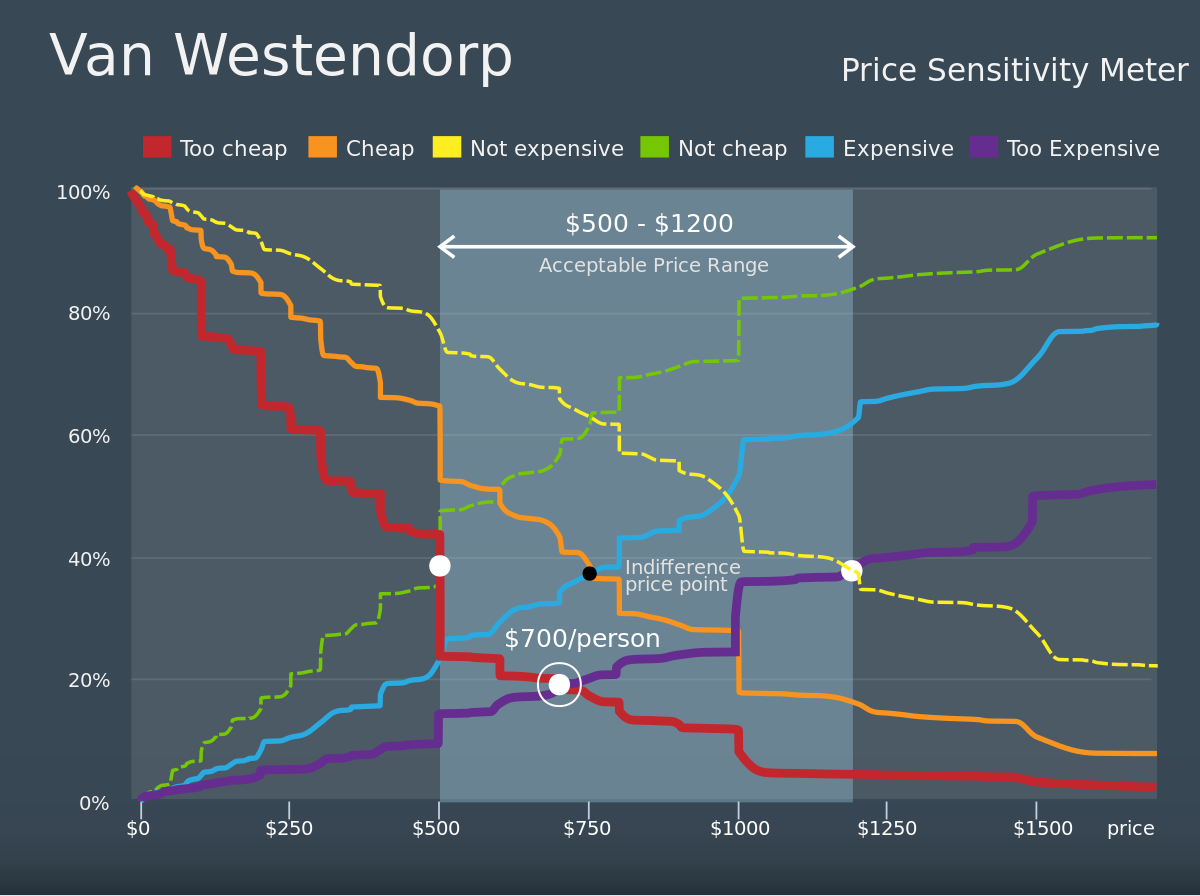

The Price Sensitivity Meter (PSM) is a market technique for determining consumer price preferences. It was introduced in 1976 by Dutch economist Peter van Westendorp. The technique has been used by a wide variety of researchers in the market research industry. The PSM approach has been a staple technique for addressing pricing issues for the past 20 years. It historically has been promoted by many professional market research associations in their training and professional development programs. The PSM approach continues to be used widely throughout the market research industry and descriptions can be easily found in many market research websites.

At most small and medium-sized e-commerce retailers, prices are typically set and updated in an ad hoc fashion without one clear owner. The process often starts by using a gross margin target, followed by some comparison with competitors, and then some adjustments from there. Many of these retailers would quickly admit that this isn’t an optimal strategy, and that they are likely leaving money on the table — and they’re often right. The authors’ experience with price testing has shown that there is actually a significant amount of money left on the table when pricing is left un-optimized.

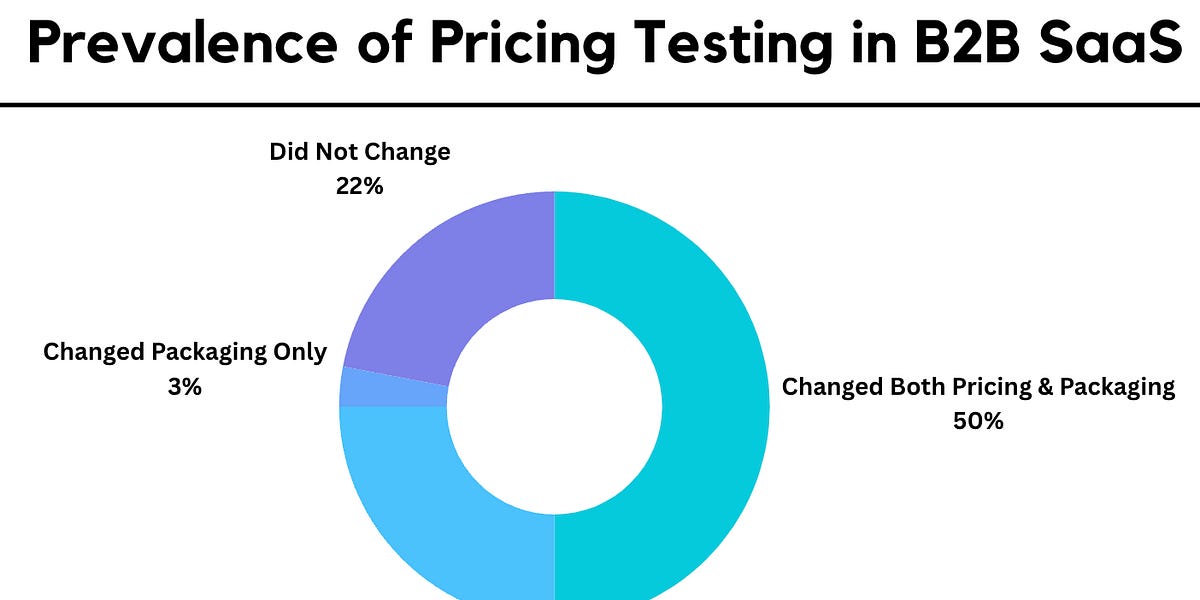

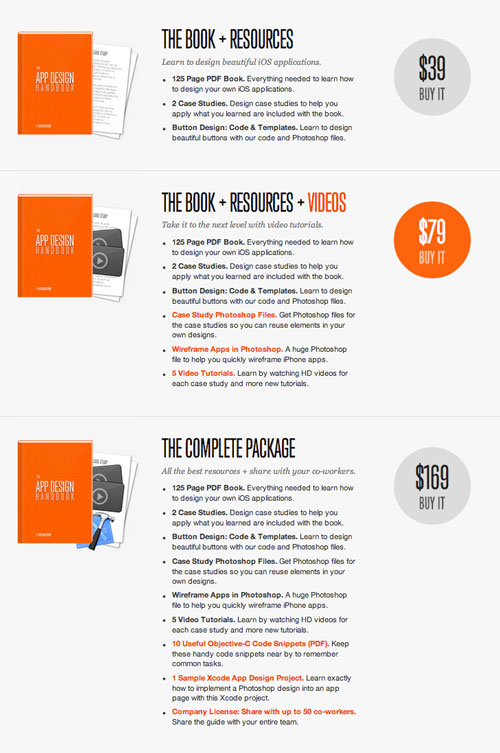

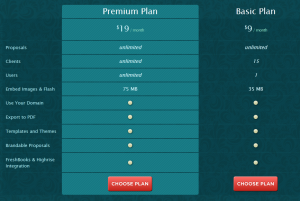

The pricing models of the top B2B SaaS companies, the strategies to iterate on, case studies of successful changes, and everything else you need to know

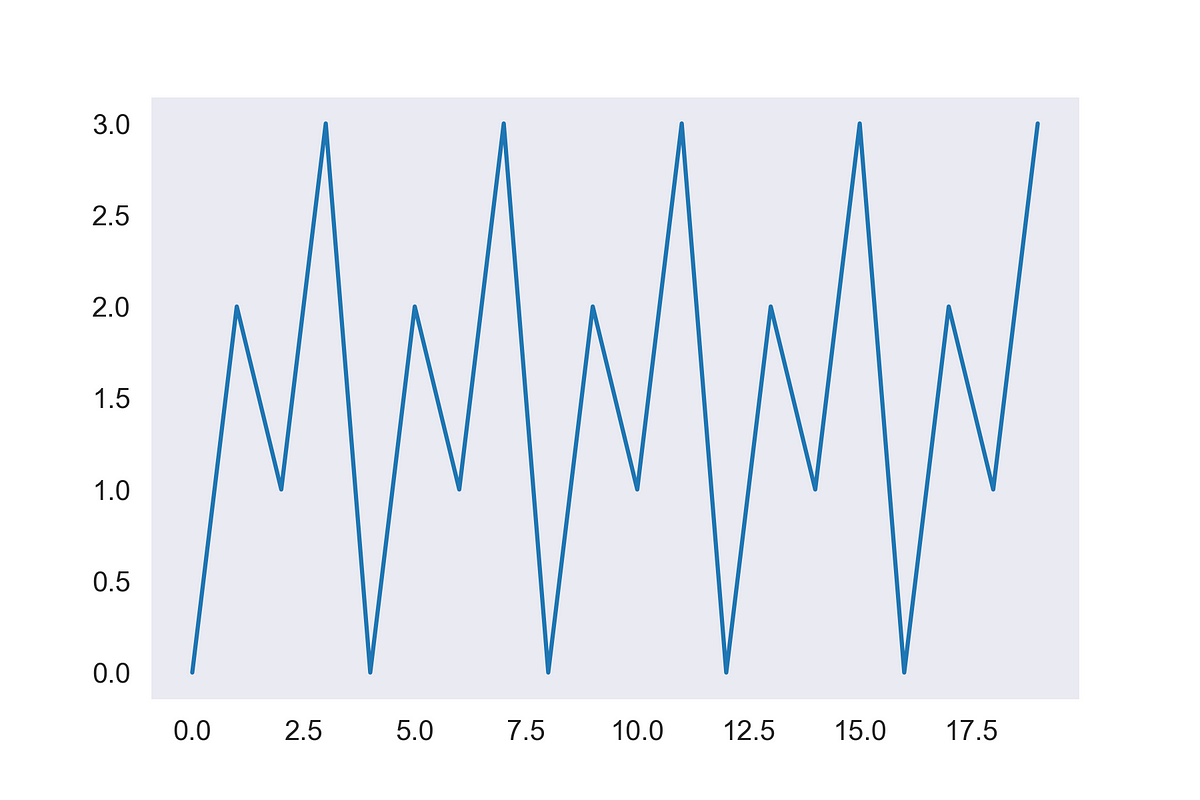

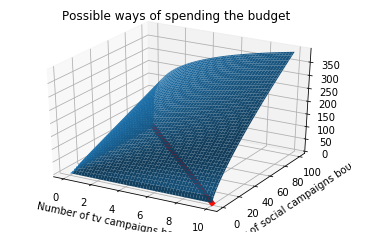

Applying Reinforcement Learning strategies to real-world use cases, especially in dynamic pricing, can reveal many surprises

On a flight from Paris to London in 1983 Jane Birkin, an Anglo-French chanteuse and actress, spilled the contents of her overstuffed straw...

Sometimes there is a replacement for name brand tools. Knowing who makes what is the best way to save big when building your tool collection.

Telfar has introduced a “Live Price” pricing model based on customer demand.

The US thrift market has grown substantially in recent years as thrifting has become a popular pursuit of Gen Z shoppers.

It’s a place for obsessives to buy, sell and geek out over classic cars. The company pops open its hood after 100,000 auctions to explain why.

The legal decision that fostered the idea of the manufacturer’s suggested retail price, and why it still sticks around even though that decision was overturned.

This article was initially published on Lago's blog, an open-source billing API, and was ranked #1 on...

Cost-plus pricing on the surface seems straightforward. But then market forces intervene.

Some fans were outraged when man-of-the-people Bruce Springsteen charged more than $5,000 per seat for his upcoming concert. The high prices were the result of a dynamic pricing system, in which prices are adjusted upward in response to strong demand. This controversy illustrates seven lessons that managers should keep in mind when adjusting prices, including the need for clear communications, longtime customers’ expectation that they deserve a discount, and the fact that high prices will raise expectations about quality and service.

Left unchecked, pricing algorithms might unintentionally discriminate and collude to fix prices

Why might people decline an offer of up to $10,000 just to keep their feet on the ground?

Your #1 resource for digital marketing tips, trends, and strategy to help you build a successful online business.

This past week our Community / Growth Manager, Brad Patterson, spoke at the European Cloud Expo in London on the topic Try Before You Buy – Successes and Misgivings in the European Cloud Ecosystem. Also speaking were Jason Turner, director of Business development at Cedexis, António Ferreira, CEO at Luna Cloud, Lee […]

Today's consumers expect free shipping for most items. But it's not always obvious for merchants to know when and how to offer it. Here's our all-new update for analyzing shipping costs and free delivery.

What consumers truly value can be difficult to pin down and psychologically complicated. But universal building blocks of value do exist, creating opportunities for companies to improve their performance in existing markets or break into new markets. In the right combinations, the authors’ analysis shows, those elements will pay off in stronger customer loyalty, greater consumer willingness to try a particular brand, and sustained revenue growth. Three decades of experience doing consumer research and observation for corporate clients led the authors—all with Bain & Company—to identify 30 “elements of value.” Their model traces its conceptual roots to Abraham Maslow’s “hierarchy of needs” and extends his insights by focusing on people as consumers: describing their behavior around products and services. They arrange the elements in a pyramid according to four kinds of needs, with “functional” at the bottom, followed by “emotional,” “life changing,” and then “social impact” at the peak. The authors provide real-world examples to demonstrate how companies have used the elements to grow revenue, refine product design to better meet customers’ needs, identify where customers perceive strengths and weaknesses, and cross-sell services.

Increasing price is not easyIt requires careful review of customers you want to serve, their needs and alternatives available to them. Increasing price of an extremely popular product is even harde…

Antitrust law will have to evolve to cope.

Are consumers more likely to buy if they see the price before the product, or vice versa? Uma Karmarkar and colleagues scan the brains of shoppers to find out.

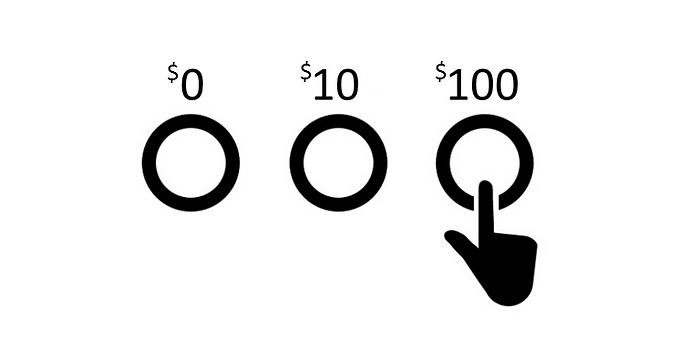

How Letting People Choose Their Price Can Make You a Millionaire

Pricing is hard. Make it too low and you miss out on profit; too high and you miss out on sales. These pricing experiments will help you get it right.

Your #1 resource for digital marketing tips, trends, and strategy to help you build a successful online business.

This post originally appeared on Hackthings.com So you have a hardware product in the works? Before you can launch it, one of the most important things you need to figure out is pricing. Unlike software, you can’t AB test your pricing and change it for different customers, which means your product has one price and …

Many businesses are managing a sharp decline in sales during the ongoing coronavirus crisis. An instinctive reaction may be to cut low-performing products from their menu of offerings — but this isn’t always the best way forward. The authors lay out a case for adding an ultra-expensive product to their portfolio. There are five reasons to do it: To increase sales across other products; to communicate expertise; to convey prestige; to garner publicity; and to move upmarket.

Technology has made it easier, but strategic rules still apply.

You can charge much more than you think, if you reposition your value-proposition. Here's how.

We are all too familiar with price unbundling. Remember the first time Airlines charged for checkin bags? Or a restaurant charged for salad dressing? The simple recipe for price unbundling is to s…

Reviewing how to calculate it and dispelling misconceptions.

In a world of abundance, an authentic, meaning-rich story can drive a company’s margins up.

Your #1 resource for digital marketing tips, trends, and strategy to help you build a successful online business.

Pricing is a good place to make a few critical resolutions for businesses. Learn the 5 resolutions as you shape your pricing strategy for 2019.

Consumer inertia is the tendency of some customers to buy a product, even when superior options exist. Alexander J. MacKay discusses how that habit affects competitive strategy and even regulatory oversight.

In a casino, the term “rake” refers to the commission that the house earns for operating a poker game. With each hand, a small percentage of the pot is scraped off by the dealer, which in essence becomes the “revenue” for the casino. While casinos use the term “rake,” a plethora of interesting word choices exist which all describe the same thing – keeping a little bit of the revenue for the company that is running the service. Examples include “commission,” “fee,” “toll,” “tax,” “vig” or “vigorish,” “juice,” “the take”, and “graft” (although this last one is typically associated with…

There is increased efficiency and other benefits to doing so.

Pricing is one of the most challenging decisions for any startup. One of the simplest ways of discovering customer willingness to pay is simply to ask them. At first blush, that might seem a reasonable and effective solution, it is prone to wild inaccuracy. Absolute pricing judgments are hard without reference points. For example: How much would you be willing to pay for a new iPhone? It’s a very challenging question to answer in the abstract.

Over the course of the past year, many writers have offered their perspectives on Uber’s dynamic pricing strategy. Perhaps the only consistency is that people have deeply passionate views on this topic. However, there are still many misperceptions about how the model works, and the purpose of this post is to clarify some of those misperceptions. I am an Uber investor and board member, and therefore expect that many will dismiss these thoughts as naked bias. But consider that as a result of my role I have access to more information that might enable a deeper perspective. I also have…

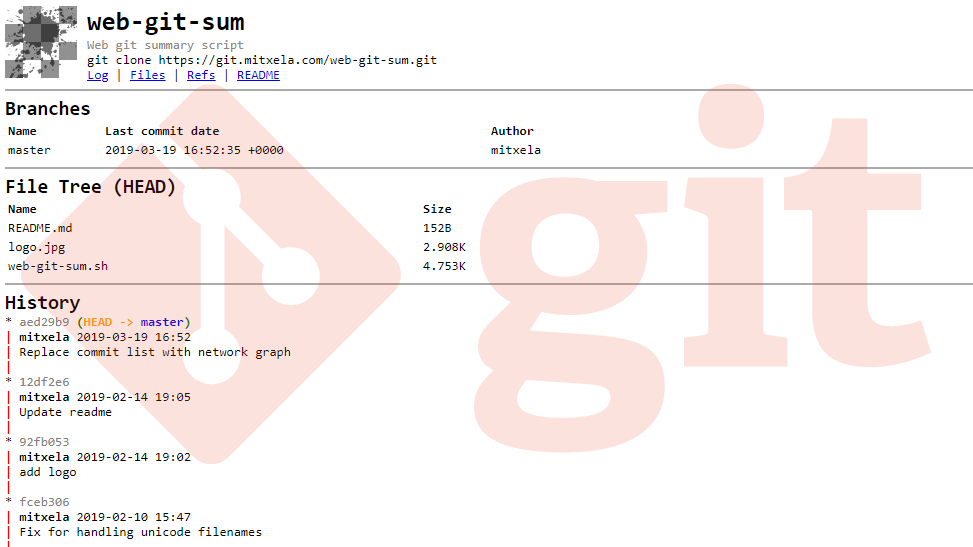

The following is a guest post by Andy Singleton Andy is the founder and CEO of Assembla a company that provides bug tracking and hosted GIT and SVN

Focus on the problem you’re trying to solve.

From social media sentiment analysis to digital ad buying, faster is increasingly seen as better, or at least necessary. So it’s no surprise that the ability to generate lots of data and analyze it…

Last month Bidsketch had the biggest increase in revenue it’s ever had. Before that, the biggest increase in revenue came when FreshBooks emailed a million people and mentioned Bidsketch as a new integration for sales proposals. I got so many new sales notifications that day, I thought someone had hacked my server. It was nuts.… Continue reading How to Increase SaaS Pricing (and Quickly Triple Your Growth) →

In the 1950s, most products were built to last. Companies knew that manufacturing long-lasting products would spread word-of-mouth referrals, which meant

There’s a reason scalpers have confused economists for decades.

Restaurants are great test labs for testing neuromarketing techniques. It's easy to change offerings, menus, and pricing, and one gets immediate feedback on what's working and what's not. Today, many eateries are employing sophisticated menu psychology to maximize sales and profits.

Low-margin retailers argue they can't afford customer loyalty programs, but is that true? Rajiv Lal and Marcel Corstjens make the case that such programs are profit-enhancing differentiators.

Selling software isn’t like selling cars or real estate. Don’t sell yourself short.

Take a look at these two coupons Target stores printed out at checkout at the same time. What is your take on the reasoning behind this? If you have the read the famous Target Big Data story about …

This quote comes to us from Ms. Allie Webb, the Founder and CEO of Drybar a blow dry only salon. A blow dry salon is not like any hair salon. It offers, just as name indicates, blow dry and styling…

Fences are never beautiful. May be the picket fences are. But when designed to keep two sides from moving easily from one side to the other they are not usually described as beautiful. Price fences…

You’re probably not aware of it, but the price of your product includes a risk discount.

Take the same item to 4 different pawn shops and you might get offers that vary by hundreds of dollars. Here’s why.

Have you ever bought sweet tickets for a ballgame, a concert or some other live event, only to find out that you couldn't make it? The internet certainly

A growing number of new businesses are following in the footsteps of successful companies such as Dropbox and Skype, by giving away their products and services free to build a customer base. But for some, that 'freemium' strategy is turning out to be costly.

Proximity Designs is a for-profit design company whose goal is to create products cheap enough--and good enough--that they can be bought by poor farmers, instead of just giving them aid.

See why evaluating your value metric and aligning it with your pricing strategy is the key to optimizing your SaaS business for profits and growth.

Constant bargain hunting makes us value all the wrong things about shopping.

At IHOP and Applebee's, menus are sales documents. And navigational guides. And explainers.

We thought we were being smart with innovative pricing models. We were wrong, but we finally righted the ship.

getAbstract Summary: Get the key points from this book in less than 10 minutes.Ronald J. Baker makes a sound economic case that the traditional method of generating prices by calculating costs and figuring in an acceptable profit is outdated and u...

Free Online Guide - Which digits to choose? How high should it be? Should it be rounded or precise? Plus other techniques.

I joined Datadog as VP Finance back in 2015 when the company was still very small. Back then, the company had about 100 employees, was making around $20

Forking over another $5 a month is getting pretty old.

This week we teardown the pricing of Dollar Shave Club and Gillette. Will Dollar Shave Club win out by taking over the bathroom, or can Gillette fight back with over 100 years of brand awareness? We find out in this week's Pricing Page Teardown.

Driven by buyers' need for consistency and explanation, the most popular pricing method uses a surprisingly simple formula based on size.

The estate-sale industry is fragile and persistent in a way that doesn’t square with the story of the world as we have come to expect it.

Profit desert customers — small, low-profit customers often numbering in the tens of thousands — are an important business segment in most companies. They often amount to about 50–80% of customers and consume about 40–60% of the company’s costs. In some companies, they’re assigned to territory reps as low-revenue “C” accounts, which distracts the reps from selling more lucrative business. In all companies, they create costly complexity in functions ranging from order-taking to fulfilment to after-sales service and returns because these customers are numerous and often inexperienced. The best way to manage your profit desert customers is to cluster them under a unified management structure — a profit desert customer team — rather than having them scattered in sales reps’ portfolios throughout the company. This team should be composed of specialized sales and marketing managers who are solely focused on this customer segment. The author presents three steps these managers should take to bring latent profits to the bottom line.

3-min marketing recommendations from the latest scientific research. Join 30,000+ marketers, for $0.

Earlier this year, GitLab got rid of a paid starter offering, trimming its product catalog from 4 subscription tiers to 3 — here's why it makes sense.

There’s a reason that online ticket sellers hit you with those extra fees after you’ve picked your seats and are ready to click “buy.” Pure profit. A

Burlington shut down online sales in March right before coronavirus lockdowns. But it's among the discount retailers that have endured the pandemic surprisingly well, even opening new stores.

Usage-based pricing can be incredibly powerful, particularly in cases where the SaaS solution handles the flow of money.

Part 1 in this 3-part series: Find the pricing model that fits with your particular options for expansion once you've made that first sale.

Video and slides from Mark Stiving's talk on value based pricing and price segmentation at the Aug-26-2020 Lean Culture Online event.

Platforms can build a business, but the businesses have to pay.

Prices for works by some relatively new artists have skyrocketed, seemingly overnight.

After I completed my first programming class, I went straight to Craigslist. I advertised my programming services. I called myself an experienced programmer who could code anything. I posted a link to

Breaking down the boldest bets in business